Spaces:

Running

on

Zero

Sa2VA: Marrying SAM2 with LLaVA for Dense Grounded Understanding of Images and Videos

[🏠 Sa2VA] [📜 arXiv] [🤗 HuggingFace] [🎥 Introduction] [🧑💻 GitHub] [Online Demo (Sa2VA-4B)]

Haobo Yuan1* · Xiangtai Li2*† · Tao Zhang2,3* · Zilong Huang2 · Shilin Xu4 ·Shunping Ji3 ·Yunhai Tong4 ·

Lu Qi2 · Jiashi Feng2 · Ming-Hsuan Yang1

1UC Merced 2ByteDance Seed 3WHU 4PKU

† project lead * the first three authors equally contribute to the work.

Overiew

This repository contains the code for the paper "Sa2VA: Marrying SAM2 with LLaVA for Dense Grounded Understanding of Images and Videos".

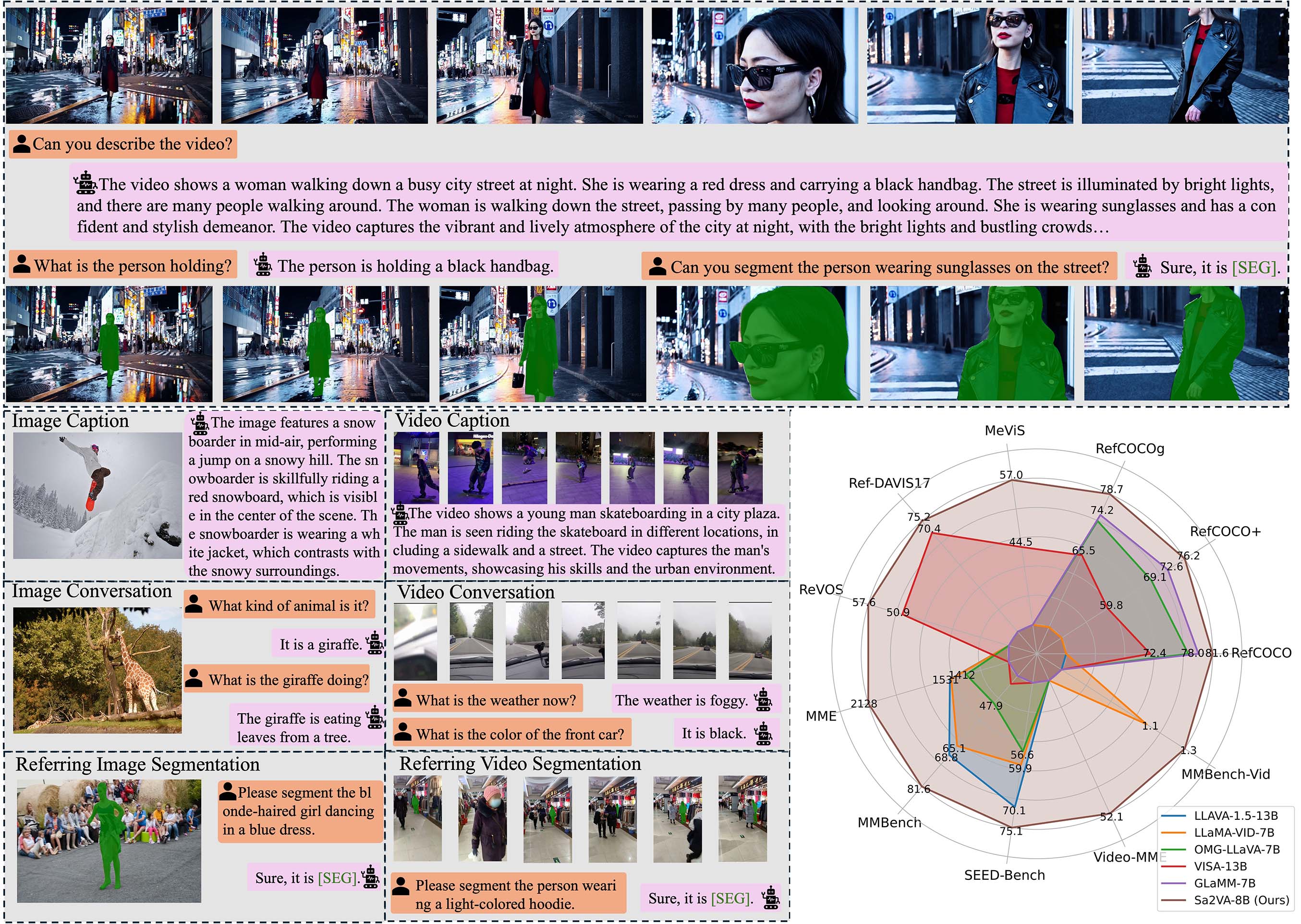

Sa2VA is the the first unified model for dense grounded understanding of both images and videos. Unlike existing multi-modal large language models, which are often limited to specific modalities and tasks, Sa2VA supports a wide range of image and video tasks, including referring segmentation and conversation, with minimal one-shot instruction tuning. Sa2VA combines SAM-2, a foundation video segmentation model, with LLaVA, an advanced vision-language model, and unifies text, image, and video into a shared LLM token space.

Model Zoo

We provide the following models:

| Model Name | Base MLLM | Language Part | HF Link |

|---|---|---|---|

| Sa2VA-1B | InternVL2.0-1B | Qwen2-0.5B-Instruct | 🤗 link |

| Sa2VA-4B | InternVL2.5-4B | Qwen2.5-3B-Instruct | 🤗 link |

| Sa2VA-8B | InternVL2.5-8B | internlm2_5-7b-chat | 🤗 link |

Gradio Demos

We provide a script that implements interactive chat using gradio, which requires installing gradio==4.42.0. You can try it to quickly build a chat interface locally.

PYTHONPATH=. python projects/llava_sam2/gradio/app.py ByteDance/Sa2VA-4B

Quick Start

Our Sa2VA model is available on 🤗HuggingFace. With very few steps, you can try it with your own data. You can install the demo/requirements.txt to avoid training-only packages.

Option1 - scripts:

Supposing you have a folder (PATH_TO_FOLDER) that contains images of a video, you can use the following script to chat with the Sa2VA model or segment the objects in the videos.

> cd scripts

> python demo.py PATH_TO_FOLDER --model_path ByteDance/Sa2VA-8B --work-dir OUTPUT_DIR --text "<image>Please describe the video content."

If the output contains the segmentation results, the results will be saved to OUTPUT_DIR.

Option2 - Jupter Notebook:

Please refer to demo.ipynb.

Demo

Demo 1

Input Video (Source: La La Land 2016):Instruction: "Please segment the girl wearing the yellow dress."

Demo 3

Input Video (Source: Internet):Instruction: "Please segment the person wearing sun glasses."

Demo 4

Input Video (Source: Internet):Instruction: "Instruction: "Please segment the singing girl."

Demo 5

Input Video:Instruction: "What is the atmosphere of the scene?"

Answer: "The scene has a dark and mysterious atmosphere, with the men dressed in suits and ties, and the dimly lit room."

Training

Installation

- Please install the python and pytorch first:

> conda create -n vlm python=3.10

> conda activate vlm

> conda install pytorch==2.3.1 torchvision==0.18.1 pytorch-cuda=12.1 cuda -c pytorch -c "nvidia/label/cuda-12.1.0" -c "nvidia/label/cuda-12.1.1"

- Install mmcv:

> pip install mmcv==2.2.0 -f https://download.openmmlab.com/mmcv/dist/cu121/torch2.3/index.html

- Install other dependencies:

> pip install -r requirements.txt

Pretrained Model Preparation

You are expected to download the following pretrained models and place them in the ./pretrained directory:

Data Preparation

(TODO) Please download the training datasets and place them in the data directory. The download link is here.

Training Script

Please run the following script to train:

> bash tools/dist.sh train projects/llava_sam2/configs/sa2va_4b.py 8

References

If you find this repository useful, please consider referring the following paper:

@article{sa2va,

title={Sa2VA: Marrying SAM2 with LLaVA for Dense Grounded Understanding of Images and Videos},

author={Yuan, Haobo and Li, Xiangtai and Zhang, Tao and Huang, Zilong and Xu, Shilin and Ji, Shunping and Tong, Yunhai and Qi, Lu and Feng, Jiashi and Yang, Ming-Hsuan},

journal={arXiv},

year={2025}

}