license: mit

datasets:

- nyanko7/danbooru2023

language:

- en

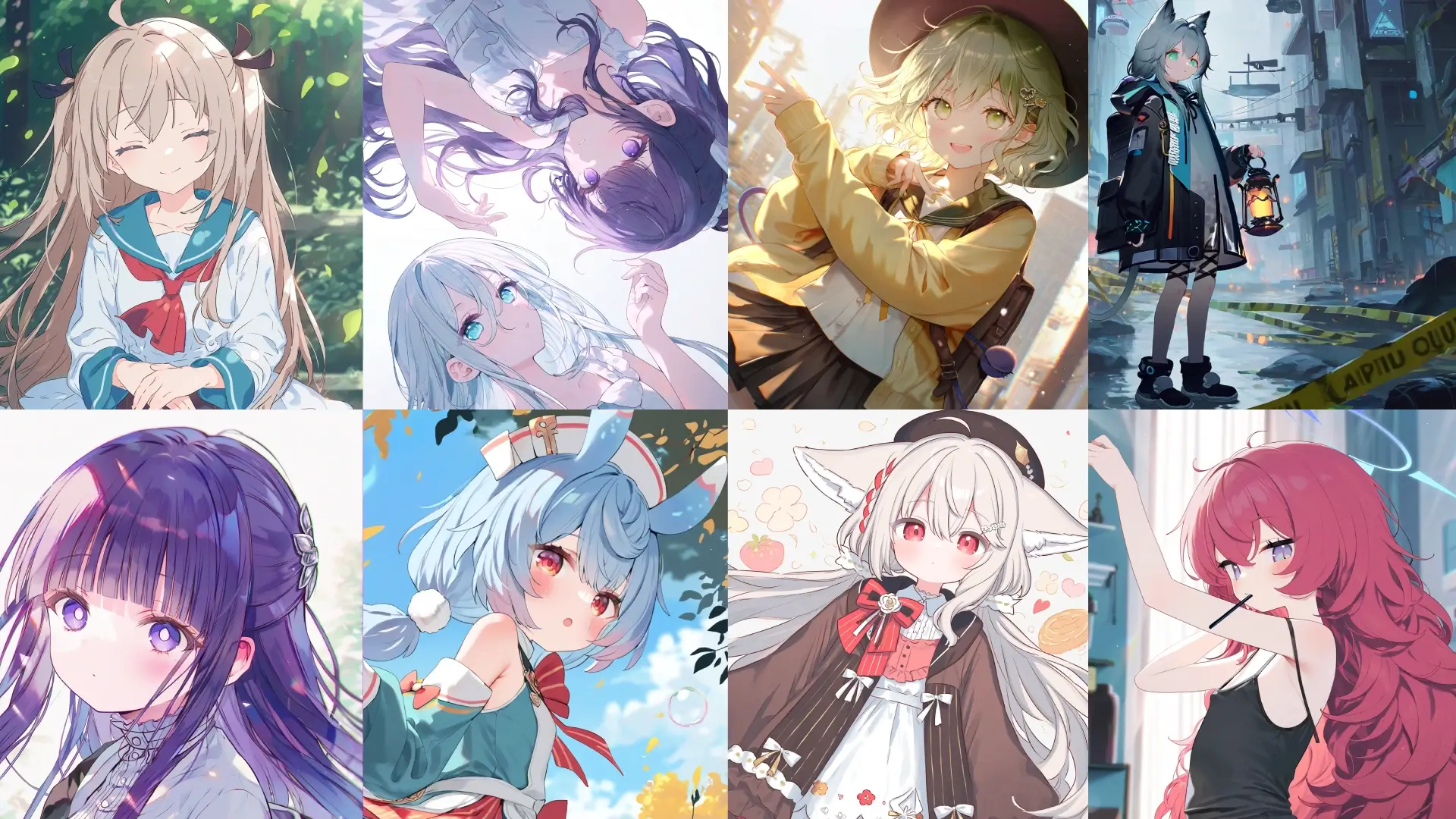

nyaflow-xl [alpha]

This is an experiment to finetune Stable Diffusion XL model using the Flow Matching training objective.

Done in July 2024, based on sdxl, using several publicly available datasets. [demo]

Model Details

Flow Matching generates a sample from the target data distribution by iteratively changing a sample from a prior distribution, e.g., Gaussian. The model is trained to predict the velocity V_t = \frac{dX_t}{dt}, which guides it to “move” the sample X_t in the direction of the sample X_1. As in prior work (Esser et al., 2024), we sample t from a logit-normal distribution where the underlying Gaussian distribution has zero mean and unit standard deviation, use the optimal transport path to construct X_t.

Our training dataset consists of 3.6M recaptioned/tagged image-text pairs, with filtering and processing for improved context and stability. Training was completed on a 32×H100 GPU cluster using deepspeed framework. (Thanks for the compute grant!)

We observe consistent improvements in both validation loss and evaluation performance with increased training steps and compute, due to limited training budget we had to cap the training duration at ~48 hours. Despite the constraints, we observed that the baseline sdxl model adapted well to the Flow Matching target.

The model supports concepts, styles, and detailed character rendering. It maintains semantic alignment for diverse prompts and complex inputs but performs not well with natural language inputs due to the limited amount of NL captions included in this training run. Furthermore, we found that the model may produce over saturated images and overfitting to some styles.

While nyaflow-xl demonstrates interesting results, it remains a prototype. Feel free to leave comment and criticism