Chroma: Open-Source, Uncensored, and Built for the Community

Chroma is a 8.9 billion parameter rectified flow transformer capable of generating images from text descriptions.

Based on FLUX.1 [schnell] with heavy architectural modifications.

Table of Contents

- Chroma: Open-Source, Uncensored, and Built for the Community

- How to run this model

- ComfyUI

- diffusers [WIP]

- brief tech report

- Architectural modifications

- [Training Details]

- [T5 QAT training] [WIP]

- [Prior preserving distribution training] [WIP]

- [Scramming] [WIP]

- [blockwise droppout optimizers] [WIP]

- Citation

How to run this model

ComfyUI

Requirements

- ComfyUI installation

- Chroma checkpoint (pick the latest version on this repo)

- T5 XXL or T5 XXL fp8 (either of them will work)

- FLUX VAE

- Chroma_Workflow

Manual Installation (Chroma)

- Navigate to your ComfyUI's

ComfyUI/custom_nodesfolder - Clone the repository:

git clone https://github.com/lodestone-rock/ComfyUI_FluxMod.git

- Restart ComfyUI

- Refresh your browser if ComfyUI is already running

How to run the model

- put

T5_xxlintoComfyUI/models/clipfolder - put

FLUX VAEintoComfyUI/models/vaefolder - put

Chroma checkpointintoComfyUI/models/diffusion_modelsfolder - load chroma workflow to your ComfyUI

- Run the workflow

Architectural Modifications

12B → 8.9B

TL;DR: There are 3.3B parameters that only encode a single input vector, which I replaced with 250M params.

Since FLUX is so big, I had to modify the architecture and ensure minimal knowledge was lost in the process. The most obvious thing to prune was this modulation layer. In the diagram, it may look small, but in total, FLUX has 3.3B parameters allocated to it. Without glazing over the details too much, this layer's job is to let the model know which timestep it's at during the denoising process. This layer also receives information from pooled CLIP vectors.

But after a simple experiment of zeroing these pooled vectors out, the model’s output barely changed—which made pruning a breeze! Why? Because the only information left for this layer to encode is just a single number in the range of 0-1. Yes, you heard it right—3.3B parameters were used to encode 8 bytes of float values. So this was the most obvious layer to prune and replace with a simple FFN. The whole replacement process only took a day on my single 3090, and after that, the model size was reduced to just 8.9B.

MMDiT Masking

TL;DR: Masking T5 padding tokens enhanced fidelity and increased stability during training.

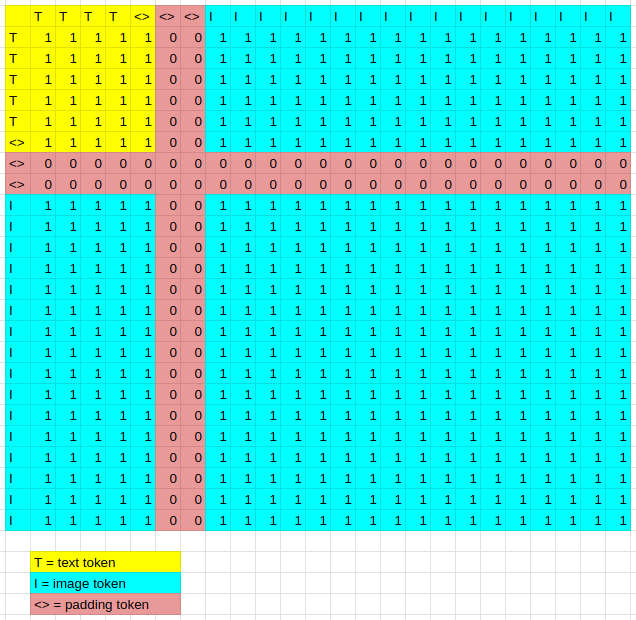

It might not be obvious, but BFL had some oversight during pre-training where they forgot to mask both T5 and MMDiT tokens. So, for example, a short sentence like “a cat sat on a mat” actually looks like this in both T5 and MMDiT:

<bos> a cat sat on a mat <pad><pad>...<pad><pad><pad>

The model ends up paying way too much attention to padding tokens, drowning out the actual prompt information. The fix? Masking—so the model doesn’t associate anything with padding tokens.

But there’s a catch: if you mask out all padding tokens, the model falls out of distribution and generates a blurry mess. The solution? Unmask just one padding token while masking the rest.

With this fix, MMDiT now only needs to pay attention to:

<bos> a cat sat on a mat <pad>

Timestep Distributions

TL;DR: A custom timestep distribution prevents loss spikes during training.

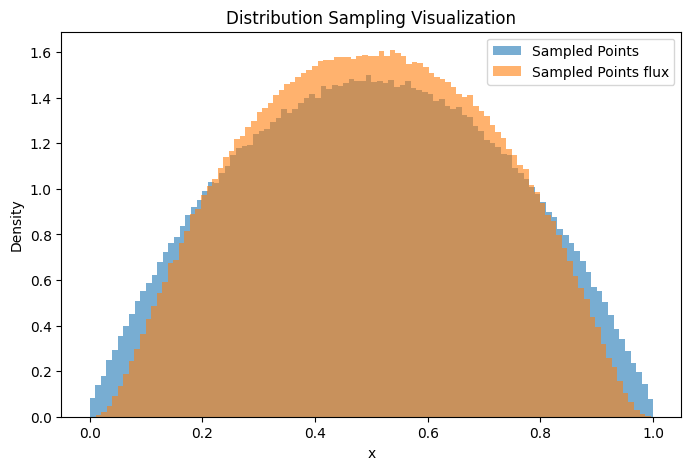

When training a diffusion/flow model, we sample random timesteps—but not evenly. Why? Because empirically, training on certain timesteps more often makes the model converge faster.

FLUX uses a "lognorm" distribution, which prioritizes training around the middle timesteps. But this approach has a flaw: the tails—where high-noise and low-noise regions exist—are trained super sparsely.

If you train for a looong time (say, 1000 steps), the likelihood of hitting those tail regions is almost zero. The problem? When the model finally does see them, the loss spikes hard, throwing training out of whack—even with a huge batch size.

The fix is simple: sample and train those tail timesteps a bit more frequently using a -x^2 function instead. You can see in the image that this makes the distribution thicker near 0 and 1, ensuring better coverage.

Minibatch Optimal Transport

TL;DR: Transport problem math magic :P

This one’s a bit math-heavy, but here’s the gist: FLUX isn’t actually "denoising" an image. What we’re really doing is training a vector field to map one distribution (noise) to another (image). Once the vector field is learned, we "flow" through it to transform noise into an image. To keep it simple—just check out these two visuals:

[graph placeholder]

By choosing better pairing through math magic it accelerates training by reducing the “path ambiguity”

Citation

@misc{rock2025chroma,

author = {Lodestone Rock},

title = {{Chroma: Open-Source, Uncensored, and Built for the Community}},

year = {2025},

note = {Hugging Face repository},

howpublished = {\url{https://huggingface.co./lodestones/Chroma}},

}