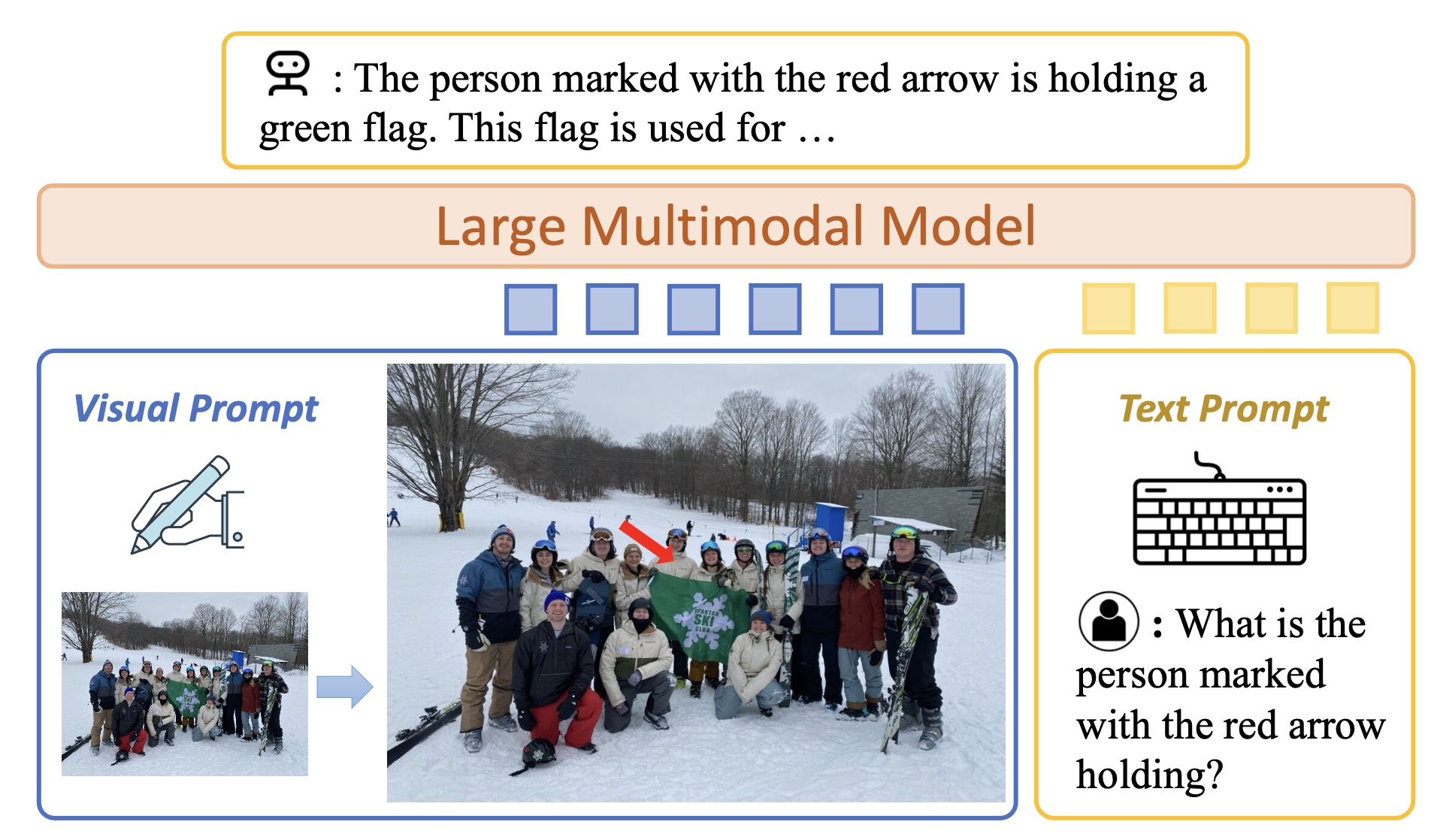

ViP-Bench: Making Large Multimodal Models Understand Arbitrary Visual Prompts

ViP-Bench a region level multimodal model evaulation benchmark curated by University of Wisconsin-Madison. We provides two kinds of visual prompts: (1) bounding boxes, and (2) human drawn diverse visual prompts.

Evaluation Code See https://github.com/mu-cai/ViP-LLaVA/blob/main/docs/Evaluation.md

LeaderBoard See https://paperswithcode.com/sota/visual-question-answering-on-vip-bench

Evaluation Server Please refer to https://huggingface.co./spaces/mucai/ViP-Bench_Evaluator to use our evaluation server.

Source annotation

In source_image, we provide the source plain images along with the bounding box/mask annotations. Researchers can use such grounding information to match the special tokens such as <obj> in "question" entry of vip-bench-meta-data.json. For example, <obj> can be replaced by textual coordinates to evaluate the region-level multimodal models.

- Downloads last month

- 172