id

stringlengths 36

36

| status

stringclasses 1

value | inserted_at

timestamp[us] | updated_at

timestamp[us] | _server_id

stringlengths 36

36

| title

stringlengths 11

142

| authors

stringlengths 3

297

| filename

stringlengths 5

62

| content

stringlengths 2

64.1k

| content_class.responses

sequencelengths 1

1

| content_class.responses.users

sequencelengths 1

1

| content_class.responses.status

sequencelengths 1

1

| content_class.suggestion

sequencelengths 1

4

| content_class.suggestion.agent

null | content_class.suggestion.score

null |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

0a213c9b-9637-437b-b443-ee77a81eea0f | completed | 2025-01-16T03:09:11.596466 | 2025-01-19T17:17:19.097914 | e1b38558-cec3-44a1-9d97-1de32f3bde1c | Generating Human-level Text with Contrastive Search in Transformers 🤗 | GMFTBY | introducing-csearch.md | ****

<a target="_blank" href="https://colab.research.google.com/github/huggingface/blog/blob/main/notebooks/115_introducing_contrastive_search.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

### 1. Introduction:

Natural language generation (i.e. text generation) is one of the core tasks in natural language processing (NLP). In this blog, we introduce the current state-of-the-art decoding method, ___Contrastive Search___, for neural text generation. Contrastive search is originally proposed in _"A Contrastive Framework for Neural Text Generation"_ <a href='#references'>[1]</a> ([[Paper]](https://arxiv.org/abs/2202.06417)[[Official Implementation]](https://github.com/yxuansu/SimCTG)) at NeurIPS 2022. Moreover, in this follow-up work, _"Contrastive Search Is What You Need For Neural Text Generation"_ <a href='#references'>[2]</a> ([[Paper]](https://arxiv.org/abs/2210.14140) [[Official Implementation]](https://github.com/yxuansu/Contrastive_Search_Is_What_You_Need)), the authors further demonstrate that contrastive search can generate human-level text using **off-the-shelf** language models across **16** languages.

**[Remark]** For users who are not familiar with text generation, please refer more details to [this blog post](https://huggingface.co./blog/how-to-generate).

****

<span id='demo'/>

### 2. Hugging Face 🤗 Demo of Contrastive Search:

Contrastive Search is now available on 🤗 `transformers`, both on PyTorch and TensorFlow. You can interact with the examples shown in this blog post using your framework of choice in [this Colab notebook](https://colab.research.google.com/github/huggingface/blog/blob/main/notebooks/115_introducing_contrastive_search.ipynb), which is linked at the top. We have also built this awesome [demo](https://huggingface.co./spaces/joaogante/contrastive_search_generation) which directly compares contrastive search with other popular decoding methods (e.g. beam search, top-k sampling <a href='#references'>[3]</a>, and nucleus sampling <a href='#references'>[4]</a>).

****

<span id='installation'/>

### 3. Environment Installation:

Before running the experiments in the following sections, please install the update-to-date version of `transformers` as

```yaml

pip install torch

pip install "transformers==4.24.0"

```

****

<span id='problems_of_decoding_methods'/>

### 4. Problems of Existing Decoding Methods:

Decoding methods can be divided into two categories: (i) deterministic methods and (ii) stochastic methods. Let's discuss both!

<span id='deterministic_methods'/>

#### 4.1. Deterministic Methods:

Deterministic methods, e.g. greedy search and beam search, generate text by selecting the text continuation with the highest likelihood measured by the language model. However, as widely discussed in previous studies <a href='#references'>[3]</a><a href='#references'>[4]</a>, deterministic methods often lead to the problem of _model degeneration_, i.e., the generated text is unnatural and contains undesirable repetitions.

Below, let's see an example of generated text from greedy search using GPT-2 model.

```python

from transformers import AutoTokenizer, GPT2LMHeadModel

tokenizer = AutoTokenizer.from_pretrained('gpt2-large')

input_ids = tokenizer('DeepMind Company is', return_tensors='pt').input_ids

model = GPT2LMHeadModel.from_pretrained('gpt2-large')

output = model.generate(input_ids, max_length=128)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(output[0], skip_special_tokens=True))

print("" + 100 * '-')

```

<details open>

<summary><b>Model Output:</b></summary>

```

Output: | [

[

"llm",

"transformers",

"research",

"text_generation"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"transformers",

"text_generation",

"research"

] | null | null |

197170c8-6576-4b49-9006-bb14c11f8aaa | completed | 2025-01-16T03:09:11.596478 | 2025-01-19T18:53:16.009848 | cf29eb3f-9b2f-4fe7-9053-02f895c59df9 | Summer at Hugging Face | huggingface | summer-at-huggingface.md | Summer is now officially over and these last few months have been quite busy at Hugging Face. From new features in the Hub to research and Open Source development, our team has been working hard to empower the community through open and collaborative technology.

In this blog post you'll catch up on everything that happened at Hugging Face in June, July and August!

This post covers a wide range of areas our team has been working on, so don't hesitate to skip to the parts that interest you the most 🤗

1. [New Features](#new-features)

2. [Community](#community)

3. [Open Source](#open-source)

4. [Solutions](#solutions)

5. [Research](#research)

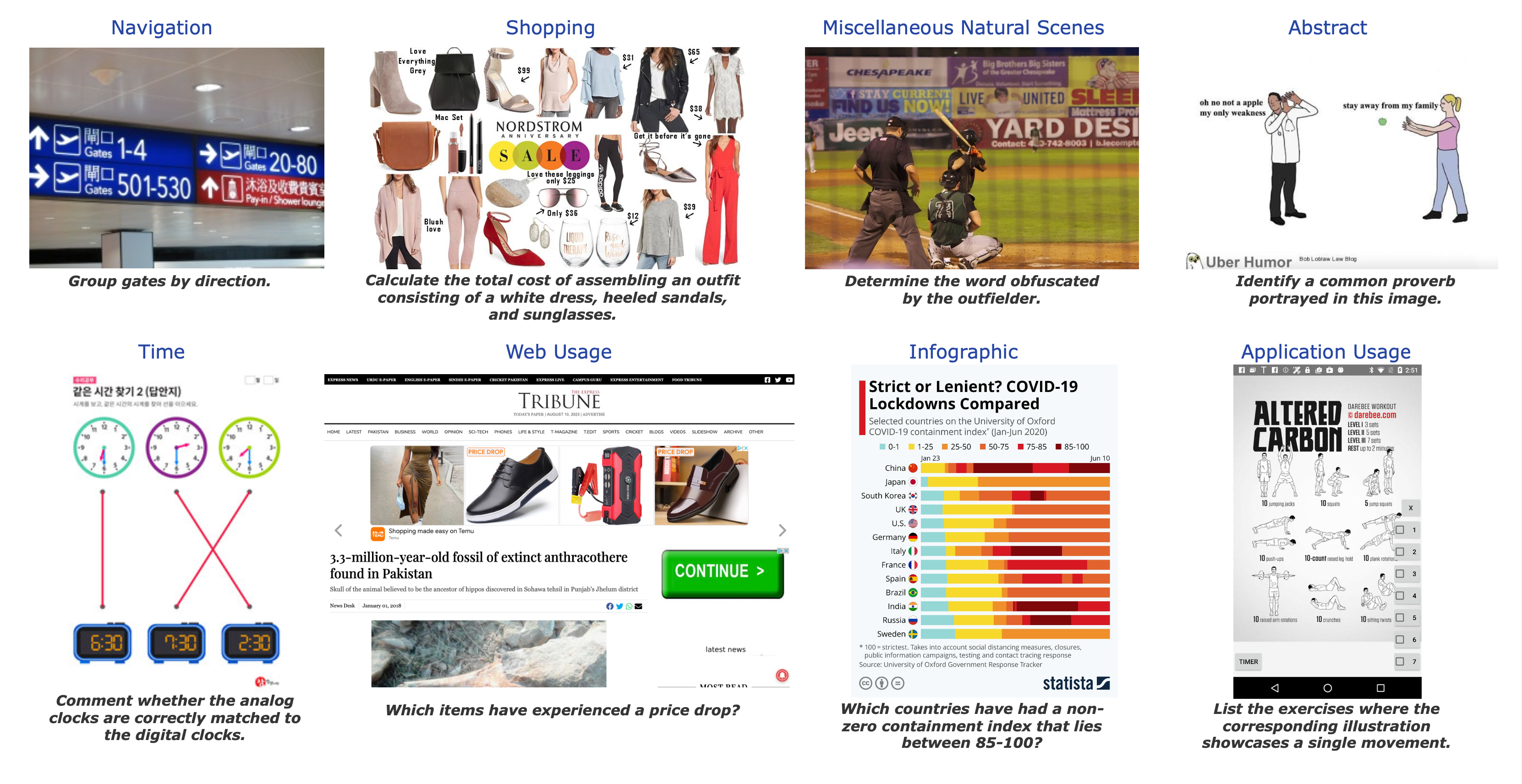

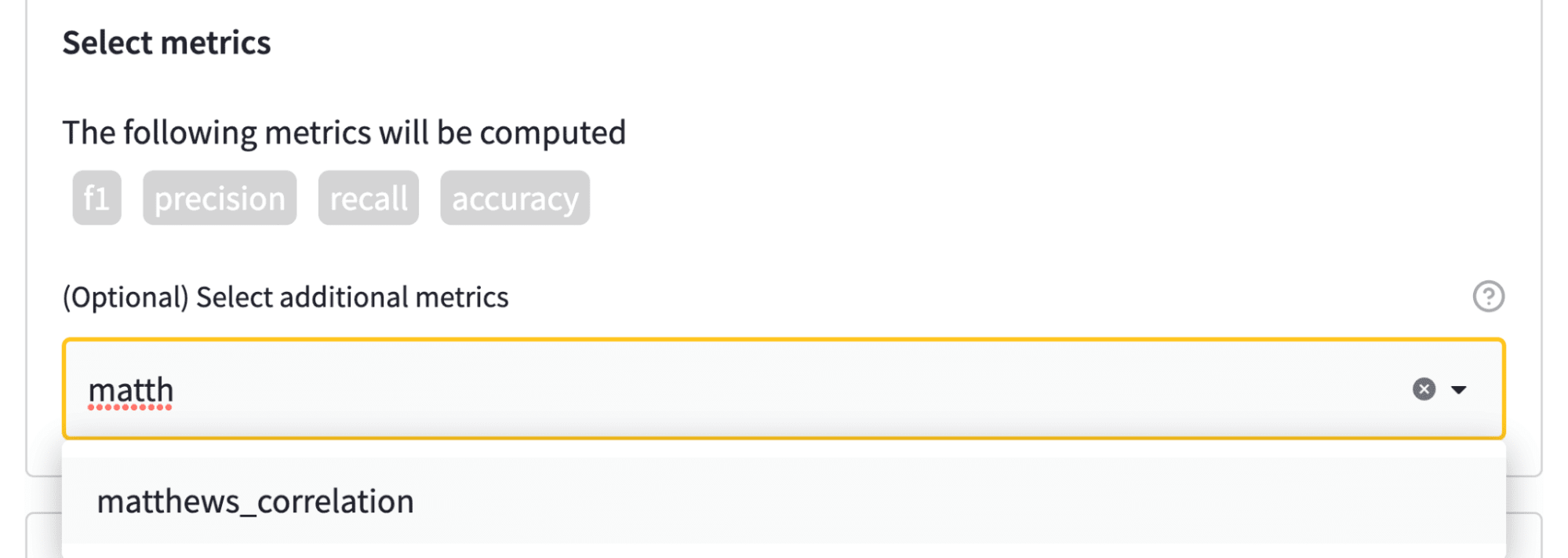

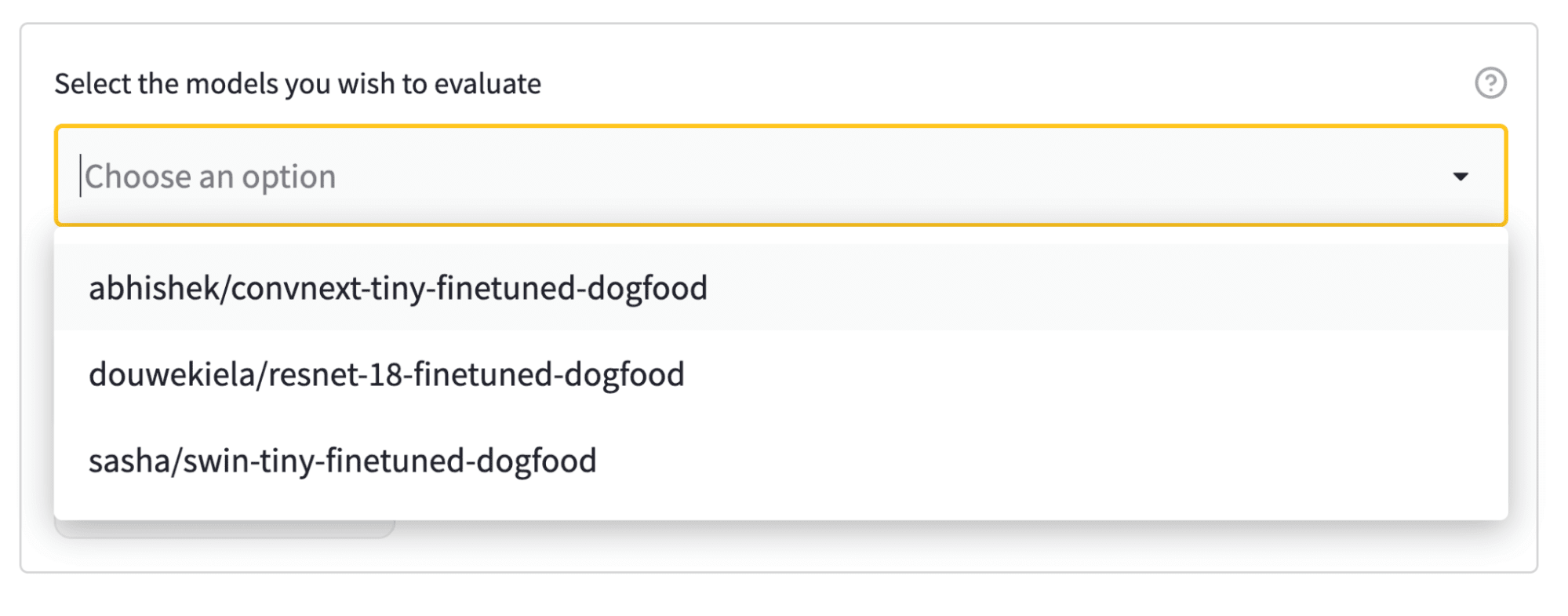

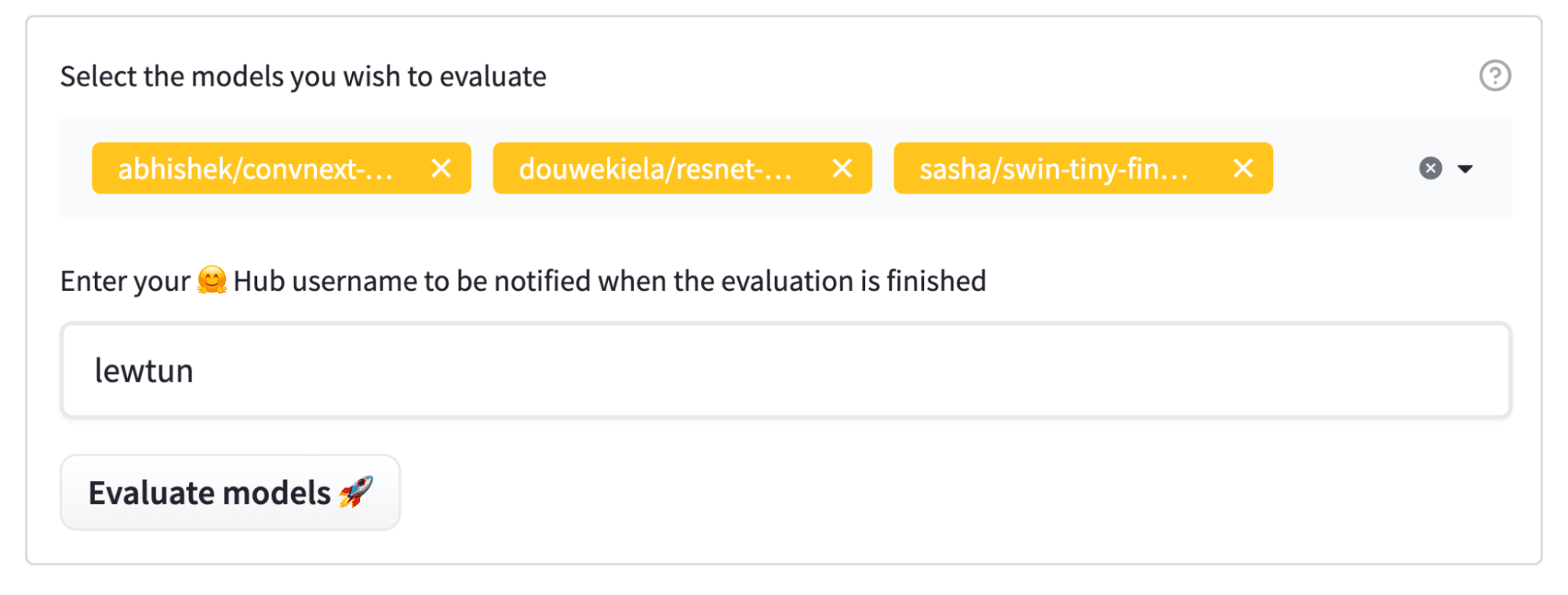

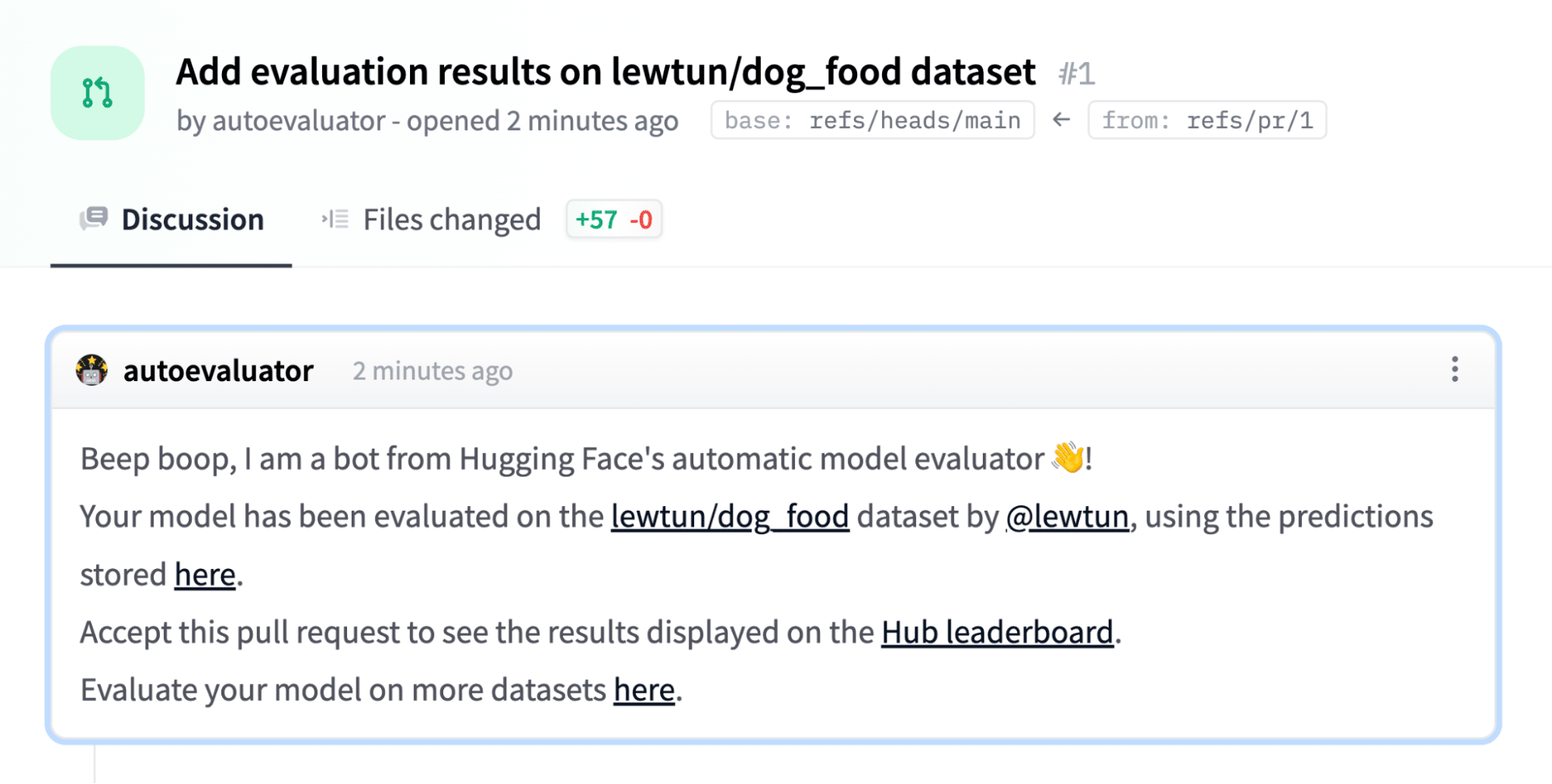

## New Features

In the last few months, the Hub went from 10,000 public model repositories to over 16,000 models! Kudos to our community for sharing so many amazing models with the world. And beyond the numbers, we have a ton of cool new features to share with you!

### Spaces Beta ([hf.co/spaces](/spaces))

Spaces is a simple and free solution to host Machine Learning demo applications directly on your user profile or your organization [hf.co](http://hf.co/) profile. We support two awesome SDKs that let you build cool apps easily in Python: [Gradio](https://gradio.app/) and [Streamlit](https://streamlit.io/). In a matter of minutes you can deploy an app and share it with the community! 🚀

Spaces lets you [set up secrets](/docs/hub/spaces-overview#managing-secrets), permits [custom requirements](/docs/hub/spaces-dependencies), and can even be managed [directly from GitHub repos](/docs/hub/spaces-github-actions). You can sign up for the beta at [hf.co/spaces](/spaces). Here are some of our favorites!

- Create recipes with the help of [Chef Transformer](/spaces/flax-community/chef-transformer)

- Transcribe speech to text with [HuBERT](https://huggingface.co./spaces/osanseviero/HUBERT)

- Do segmentation in a video with the [DINO model](/spaces/nateraw/dino-clips)

- Use [Paint Transformer](/spaces/akhaliq/PaintTransformer) to make paintings from a given picture

- Or you can just explore any of the over [100 existing Spaces](/spaces)!

### Share Some Love

You can now like any model, dataset, or Space on [http://huggingface.co](http://huggingface.co/), meaning you can share some love with the community ❤️. You can also keep an eye on who's liking what by clicking on the likes box 👀. Go ahead and like your own repos, we're not judging 😉.

### TensorBoard Integration

In late June, we launched a TensorBoard integration for all our models. If there are TensorBoard traces in the repo, an automatic, free TensorBoard instance is launched for you. This works with both public and private repositories and for any library that has TensorBoard traces!

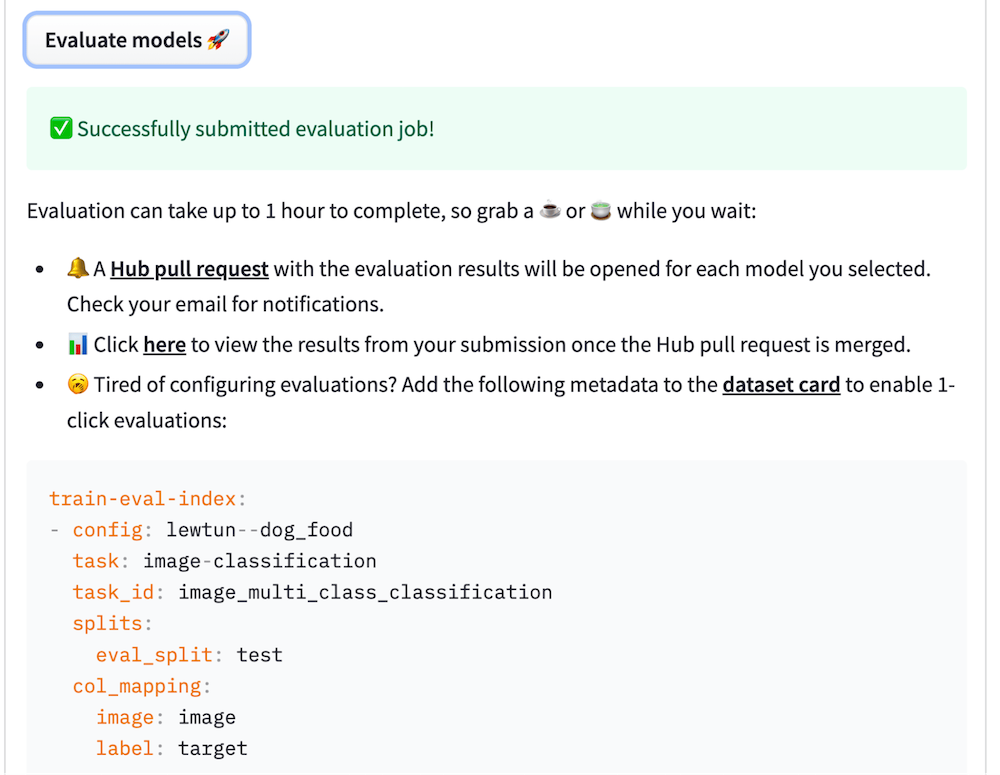

### Metrics

In July, we added the ability to list evaluation metrics in model repos by adding them to their model card📈. If you add an evaluation metric under the `model-index` section of your model card, it will be displayed proudly in your model repo.

If that wasn't enough, these metrics will be automatically linked to the corresponding [Papers With Code](https://paperswithcode.com/) leaderboard. That means as soon as you share your model on the Hub, you can compare your results side-by-side with others in the community. 💪

Check out [this repo](https://huggingface.co./nateraw/vit-base-beans-demo) as an example, paying close attention to `model-index` section of its [model card](https://huggingface.co./nateraw/vit-base-beans-demo/blob/main/README.md#L12-L25) to see how you can do this yourself and find the metrics in Papers with Code [automatically](https://paperswithcode.com/sota/image-classification-on-beans).

### New Widgets

The Hub has 18 widgets that allow users to try out models directly in the browser.

With our latest integrations to Sentence Transformers, we also introduced two new widgets: feature extraction and sentence similarity.

The latest **audio classification** widget enables many cool use cases: language identification, [street sound detection](https://huggingface.co./speechbrain/urbansound8k_ecapa) 🚨, [command recognition](https://huggingface.co./speechbrain/google_speech_command_xvector), [speaker identification](https://huggingface.co./speechbrain/spkrec-xvect-voxceleb), and more! You can try this out with `transformers` and `speechbrain` models today! 🔊 (Beware, when you try some of the models, you might need to bark out loud)

You can try our early demo of [structured data classification](https://huggingface.co./julien-c/wine-quality) with Scikit-learn. And finally, we also introduced new widgets for image-related models: **text to image**, **image classification**, and **object detection**. Try image classification with Google's ViT model [here](https://huggingface.co./google/vit-base-patch16-224) and object detection with Facebook AI's DETR model [here](https://huggingface.co./facebook/detr-resnet-50)!

### More Features

That's not everything that has happened in the Hub. We've introduced new and improved [documentation](https://huggingface.co./docs/hub/main) of the Hub. We also introduced two widely requested features: users can now transfer/rename repositories and directly upload new files to the Hub.

## Community

### Hugging Face Course

In June, we launched the first part of our [free online course](https://huggingface.co./course/chapter1)! The course teaches you everything about the 🤗 Ecosystem: Transformers, Tokenizers, Datasets, Accelerate, and the Hub. You can also find links to the course lessons in the official documentation of our libraries. The live sessions for all chapters can be found on our [YouTube channel](https://www.youtube.com/playlist?list=PLo2EIpI_JMQuQ8StH9RwKXwJVqLTDxwwy). Stay tuned for the next part of the course which we'll be launching later this year!

### JAX/FLAX Sprint

In July we hosted our biggest [community event](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104) ever with almost 800 participants! In this event co-organized with the JAX/Flax and Google Cloud teams, compute-intensive NLP, Computer Vision, and Speech projects were made accessible to a wider audience of engineers and researchers by providing free TPUv3s. The participants created over 170 models, 22 datasets, and 38 Spaces demos 🤯. You can explore all the amazing demos and projects [here](https://huggingface.co./flax-community).

There were talks around JAX/Flax, Transformers, large-scale language modeling, and more! You can find all recordings [here](https://github.com/huggingface/transformers/tree/master/examples/research_projects/jax-projects#talks).

We're really excited to share the work of the 3 winning teams!

1. [Dall-e mini](https://huggingface.co./spaces/flax-community/dalle-mini). DALL·E mini is a model that generates images from any prompt you give! DALL·E mini is 27 times smaller than the original DALL·E and still has impressive results.

2. [DietNerf](https://huggingface.co./spaces/flax-community/DietNerf-Demo). DietNerf is a 3D neural view synthesis model designed for few-shot learning of 3D scene reconstruction using 2D views. This is the first Open Source implementation of the "[Putting Nerf on a Diet](https://arxiv.org/abs/2104.00677)" paper.

3. [CLIP RSIC](https://huggingface.co./spaces/sujitpal/clip-rsicd-demo). CLIP RSIC is a CLIP model fine-tuned on remote sensing image data to enable zero-shot satellite image classification and captioning. This project demonstrates how effective fine-tuned CLIP models can be for specialized domains.

Apart from these very cool projects, we're excited about how these community events enable training large and multi-modal models for multiple languages. For example, we saw the first ever Open Source big LMs for some low-resource languages like [Swahili](https://huggingface.co./models?language=sw), [Polish](https://huggingface.co./flax-community/papuGaPT2) and [Marathi](https://huggingface.co./spaces/flax-community/roberta-base-mr).

## Bonus

On top of everything we just shared, our team has been doing lots of other things. Here are just some of them:

- 📖 This 3-part [video series](https://www.youtube.com/watch?time_continue=6&v=qmN1fJ7Fdmo&feature=emb_title&ab_channel=NilsR) shows the theory on how to train state-of-the-art sentence embedding models.

- We presented at PyTorch Community Voices and participated in a QA ([video](https://www.youtube.com/watch?v=wE3bk7JaH4E&ab_channel=PyTorch)).

- Hugging Face has collaborated with [NLP in Spanish](https://twitter.com/NLP_en_ES) and [SpainAI](https://twitter.com/Spain_AI_) in a Spanish [course](https://www.youtube.com/playlist?list=PLBILcz47fTtPspj9QDm2E0oHLe1p67tMz) that teaches concepts and state-of-the art architectures as well as their applications through use cases.

- We presented at [MLOps World Demo Days](https://www.youtube.com/watch?v=lWahHp5vpVg).

## Open Source

### New in Transformers

Summer has been an exciting time for 🤗 Transformers! The library reached 50,000 stars, 30 million total downloads, and almost 1000 contributors! 🤩

So what's new? JAX/Flax is now the 3rd supported framework with over [5000](https://huggingface.co./models?library=jax&sort=downloads) models in the Hub! You can find actively maintained [examples](https://github.com/huggingface/transformers/tree/master/examples/flax) for different tasks such as text classification. We're also working hard on improving our TensorFlow support: all our [examples](https://github.com/huggingface/transformers/tree/master/examples/tensorflow) have been reworked to be more robust, TensorFlow idiomatic, and clearer. This includes examples such as summarization, translation, and named entity recognition.

You can now easily publish your model to the Hub, including automatically authored model cards, evaluation metrics, and TensorBoard instances. There is also increased support for exporting models to ONNX with the new [`transformers.onnx` module](https://huggingface.co./transformers/serialization.html?highlight=onnx).

```bash

python -m transformers.onnx --model=bert-base-cased onnx/bert-base-cased/

```

The last 4 releases introduced many new cool models!

- [DETR](https://huggingface.co./transformers/model_doc/detr.html) can do fast end-to-end object detection and image segmentation. Check out some of our community [tutorials](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/DETR)!

- [ByT5](https://huggingface.co./transformers/model_doc/byt5.html) is the first tokenizer-free model in the Hub! You can find all available checkpoints [here](https://huggingface.co./models?search=byt5).

- [CANINE](https://huggingface.co./transformers/model_doc/canine.html) is another tokenizer-free encoder-only model by Google AI, operating directly at the character level. You can find all (multilingual) checkpoints [here](https://huggingface.co./models?search=canine).

- [HuBERT](https://huggingface.co./transformers/model_doc/hubert.html?highlight=hubert) shows exciting results for downstream audio tasks such as [command classification](https://huggingface.co./superb/hubert-base-superb-ks) and [emotion recognition](https://huggingface.co./superb/hubert-base-superb-er). Check the models [here](https://huggingface.co./models?filter=hubert).

- [LayoutLMv2](https://huggingface.co./transformers/model_doc/layoutlmv2.html) and [LayoutXLM](https://huggingface.co./transformers/model_doc/layoutxlm.html?highlight=layoutxlm) are two incredible models capable of parsing document images (like PDFs) by incorporating text, layout, and visual information. We built a [Space demo](https://huggingface.co./spaces/nielsr/LayoutLMv2-FUNSD) so you can directly try it out! Demo notebooks can be found [here](https://github.com/NielsRogge/Transformers-Tutorials/tree/master/LayoutLMv2).

- [BEiT](https://huggingface.co./transformers/model_doc/beit.html) by Microsoft Research makes self-supervised Vision Transformers outperform supervised ones, using a clever pre-training objective inspired by BERT.

- [RemBERT](https://huggingface.co./transformers/model_doc/rembert.html?), a large multilingual Transformer that outperforms XLM-R (and mT5 with a similar number of parameters) in zero-shot transfer.

- [Splinter](https://huggingface.co./transformers/model_doc/splinter.html) which can be used for few-shot question answering. Given only 128 examples, Splinter is able to reach ~73% F1 on SQuAD, outperforming MLM-based models by 24 points!

The Hub is now integrated into `transformers`, with the ability to push to the Hub configuration, model, and tokenizer files without leaving the Python runtime! The `Trainer` can now push directly to the Hub every time a checkpoint is saved:

### New in Datasets

You can find 1400 public datasets in [https://huggingface.co./datasets](https://huggingface.co./datasets) thanks to the awesome contributions from all our community. 💯

The support for `datasets` keeps growing: it can be used in JAX, process parquet files, use remote files, and has wider support for other domains such as Automatic Speech Recognition and Image Classification.

Users can also directly host and share their datasets to the community simply by uploading their data files in a repository on the Dataset Hub.

What are the new datasets highlights? Microsoft CodeXGlue [datasets](https://huggingface.co./datasets?search=code_x_glue) for multiple coding tasks (code completion, generation, search, etc), huge datasets such as [C4](https://huggingface.co./datasets/c4) and [MC4](https://huggingface.co./datasets/mc4), and many more such as [RussianSuperGLUE](https://huggingface.co./datasets/russian_super_glue) and [DISFL-QA](https://huggingface.co./datasets/disfl_qa).

### Welcoming new Libraries to the Hub

Apart from having deep integration with `transformers`-based models, the Hub is also building great partnerships with Open Source ML libraries to provide free model hosting and versioning. We've been achieving this with our [huggingface_hub](https://github.com/huggingface/huggingface_hub) Open-Source library as well as new Hub [documentation](https://huggingface.co./docs/hub/main).

All spaCy canonical pipelines can now be found in the official spaCy [organization](https://huggingface.co./spacy), and any user can share their pipelines with a single command `python -m spacy huggingface-hub`. To read more about it, head to [https://huggingface.co./blog/spacy](https://huggingface.co./blog/spacy). You can try all canonical spaCy models directly in the Hub in the demo [Space](https://huggingface.co./spaces/spacy/pipeline-visualizer)!

Another exciting integration is Sentence Transformers. You can read more about it in the [blog announcement](https://huggingface.co./blog/sentence-transformers-in-the-hub): you can find over 200 [models](https://huggingface.co./models?library=sentence-transformers) in the Hub, easily share your models with the rest of the community and reuse models from the community.

But that's not all! You can now find over 100 Adapter Transformers in the Hub and try out Speechbrain models with widgets directly in the browser for different tasks such as audio classification. If you're interested in our collaborations to integrate new ML libraries to the Hub, you can read more about them [here](https://huggingface.co./docs/hub/libraries).

## Solutions

### **Coming soon: Infinity**

Transformers latency down to 1ms? 🤯🤯🤯

We have been working on a really sleek solution to achieve unmatched efficiency for state-of-the-art Transformer models, for companies to deploy in their own infrastructure.

- Infinity comes as a single-container and can be deployed in any production environment.

- It can achieve 1ms latency for BERT-like models on GPU and 4-10ms on CPU 🤯🤯🤯

- Infinity meets the highest security requirements and can be integrated into your system without the need for internet access. You have control over all incoming and outgoing traffic.

⚠️ Join us for a [live announcement and demo on Sep 28](https://app.livestorm.co/hugging-face/hugging-face-infinity-launch?type=detailed), where we will be showcasing Infinity for the first time in public!

### **NEW: Hardware Acceleration**

Hugging Face is [partnering with leading AI hardware accelerators](http://hf.co/hardware) such as Intel, Qualcomm and GraphCore to make state-of-the-art production performance accessible and extend training capabilities on SOTA hardware. As the first step in this journey, we [introduced a new Open Source library](https://huggingface.co./blog/hardware-partners-program): 🤗 Optimum - the ML optimization toolkit for production performance 🏎. Learn more in this [blog post](https://huggingface.co./blog/graphcore).

### **NEW: Inference on SageMaker**

We launched a [new integration with AWS](https://huggingface.co./blog/deploy-hugging-face-models-easily-with-amazon-sagemaker) to make it easier than ever to deploy 🤗 Transformers in SageMaker 🔥. Pick up the code snippet right from the 🤗 Hub model page! Learn more about how to leverage transformers in SageMaker in our [docs](https://huggingface.co./docs/sagemaker/inference) or check out these [video tutorials](https://youtube.com/playlist?list=PLo2EIpI_JMQtPhGR5Eo2Ab0_Vb89XfhDJ).

For questions reach out to us on the forum: [https://discuss.huggingface.co/c/sagemaker/17](https://discuss.huggingface.co/c/sagemaker/17)

### **NEW: AutoNLP In Your Browser**

We released a new [AutoNLP](https://huggingface.co./autonlp) experience: a web interface to train models straight from your browser! Now all it takes is a few clicks to train, evaluate and deploy **🤗** Transformers models on your own data. [Try it out](https://ui.autonlp.huggingface.co/) - NO CODE needed!

### Inference API

**Webinar**:

We hosted a [live webinar](https://youtu.be/p055U0dnEos) to show how to add Machine Learning capabilities with just a few lines of code. We also built a VSCode extension that leverages the Hugging Face Inference API to generate comments describing Python code.

<div class="aspect-w-16 aspect-h-9">

<iframe

src="https://www.youtube.com/embed/p055U0dnEos"

frameborder="0"

allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture"

allowfullscreen></iframe>

</div>

**Hugging Face** + **Zapier Demo**

20,000+ Machine Learning models connected to 3,000+ apps? 🤯 By leveraging the [Inference API](https://huggingface.co./landing/inference-api/startups), you can now easily connect models right into apps like Gmail, Slack, Twitter, and more. In this demo video, we created a zap that uses this [code snippet](https://gist.github.com/feconroses/3476a91dc524fdb930a726b3894a1d08) to analyze your Twitter mentions and alerts you on Slack about the negative ones.

<div class="aspect-w-16 aspect-h-9">

<iframe

src="https://www.youtube.com/embed/sjfpOJ4KA78"

frameborder="0"

allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture"

allowfullscreen></iframe>

</div>

**Hugging Face + Google Sheets Demo**

With the [Inference API](https://huggingface.co./landing/inference-api/startups), you can easily use zero-shot classification right into your spreadsheets in Google Sheets. Just [add this script](https://gist.github.com/feconroses/302474ddd3f3c466dc069ecf16bb09d7) in Tools -> Script Editor:

<div class="aspect-w-16 aspect-h-9">

<iframe

src="https://www.youtube.com/embed/-A-X3aUYkDs"

frameborder="0"

allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture"

allowfullscreen></iframe>

</div>

**Few-shot learning in practice**

We wrote a [blog post](https://huggingface.co./blog/few-shot-learning-gpt-neo-and-inference-api) about what Few-Shot Learning is and explores how GPT-Neo and 🤗 Accelerated Inference API are used to generate your own predictions.

### **Expert Acceleration Program**

Check out out the brand [new home for the Expert Acceleration Program](https://huggingface.co./landing/premium-support); you can now get direct, premium support from our Machine Learning experts and build better ML solutions, faster.

## Research

At BigScience we held our first live event (since the kick off) in July BigScience Episode #1. Our second event BigScience Episode #2 was held on September 20th, 2021 with technical talks and updates by the BigScience working groups and invited talks by Jade Abbott (Masakhane), Percy Liang (Stanford CRFM), Stella Biderman (EleutherAI) and more. We have completed the first large-scale training on Jean Zay, a 13B English only decoder model (you can find the details [here](https://github.com/bigscience-workshop/bigscience/blob/master/train/tr1-13B-base/chronicles.md)), and we're currently deciding on the architecture of the second model. The organization working group has filed the application for the second half of the compute budget: Jean Zay V100 : 2,500,000 GPU hours. 🚀

In June, we shared the result of our collaboration with the Yandex research team: [DeDLOC](https://arxiv.org/abs/2106.10207), a method to collaboratively train your large neural networks, i.e. without using an HPC cluster, but with various accessible resources such as Google Colaboratory or Kaggle notebooks, personal computers or preemptible VMs. Thanks to this method, we were able to train [sahajBERT](https://huggingface.co./neuropark/sahajBERT), a Bengali language model, with 40 volunteers! And our model competes with the state of the art, and even is [the best for the downstream task of classification](https://huggingface.co./neuropark/sahajBERT-NCC) on Soham News Article Classification dataset. You can read more about it in this [blog](https://huggingface.co./blog/collaborative-training) post. This is a fascinating line of research because it would make model pre-training much more accessible (financially speaking)!

<div class="aspect-w-16 aspect-h-9">

<iframe

src="https://www.youtube.com/embed/v8ShbLasRF8"

frameborder="0"

allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture"

allowfullscreen></iframe>

</div>

In June our [paper](https://arxiv.org/abs/2103.08493), How Many Data Points is a Prompt Worth?, got a Best Paper award at NAACL! In it, we reconcile and compare traditional and prompting approaches to adapt pre-trained models, finding that human-written prompts are worth up to thousands of supervised data points on new tasks. You can also read its blog [post](https://huggingface.co./blog/how_many_data_points/).

We're looking forward to EMNLP this year where we have four accepted papers!

- Our [paper](https://arxiv.org/abs/2109.02846) "[Datasets: A Community Library for Natural Language Processing](https://arxiv.org/abs/2109.02846)" documents the Hugging Face Datasets project that has over 300 contributors. This community project gives easy access to hundreds of datasets to researchers. It has facilitated new use cases of cross-dataset NLP, and has advanced features for tasks like indexing and streaming large datasets.

- Our collaboration with researchers from TU Darmstadt lead to another paper accepted at the conference (["Avoiding Inference Heuristics in Few-shot Prompt-based Finetuning"](https://arxiv.org/abs/2109.04144)). In this paper, we show that prompt-based fine-tuned language models (which achieve strong performance in few-shot setups) still suffer from learning surface heuristics (sometimes called *dataset biases*), a pitfall that zero-shot models don't exhibit.

- Our submission "[Block Pruning For Faster Transformers](https://arxiv.org/abs/2109.04838v1)" has also been accepted as a long paper. In this paper, we show how to use block sparsity to obtain both fast and small Transformer models. Our experiments yield models which are 2.4x faster and 74% smaller than BERT on SQuAD.

## Last words

😎 🔥 Summer was fun! So many things have happened! We hope you enjoyed reading this blog post and looking forward to share the new projects we're working on. See you in the winter! ❄️ | [

[

"research",

"community",

"tools"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"community",

"research",

"tools"

] | null | null |

0aa257a0-36cf-4e1a-86cb-37aa082bbe21 | completed | 2025-01-16T03:09:11.596484 | 2025-01-16T15:16:02.250253 | 2a3cd58c-3c34-481a-94fb-43fe7e41b67e | AudioLDM 2, but faster ⚡️ | sanchit-gandhi | audioldm2.md | <a target="_blank" href="https://colab.research.google.com/github/sanchit-gandhi/notebooks/blob/main/AudioLDM-2.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

AudioLDM 2 was proposed in [AudioLDM 2: Learning Holistic Audio Generation with Self-supervised Pretraining](https://arxiv.org/abs/2308.05734)

by Haohe Liu et al. AudioLDM 2 takes a text prompt as input and predicts the corresponding audio. It can generate realistic

sound effects, human speech and music.

While the generated audios are of high quality, running inference with the original implementation is very slow: a 10

second audio sample takes upwards of 30 seconds to generate. This is due to a combination of factors, including a deep

multi-stage modelling approach, large checkpoint sizes, and un-optimised code.

In this blog post, we showcase how to use AudioLDM 2 in the Hugging Face 🧨 Diffusers library, exploring a range of code

optimisations such as half-precision, flash attention, and compilation, and model optimisations such as scheduler choice

and negative prompting, to reduce the inference time by over **10 times**, with minimal degradation in quality of the

output audio. The blog post is also accompanied by a more streamlined [Colab notebook](https://colab.research.google.com/github/sanchit-gandhi/notebooks/blob/main/AudioLDM-2.ipynb),

that contains all the code but fewer explanations.

Read to the end to find out how to generate a 10 second audio sample in just 1 second!

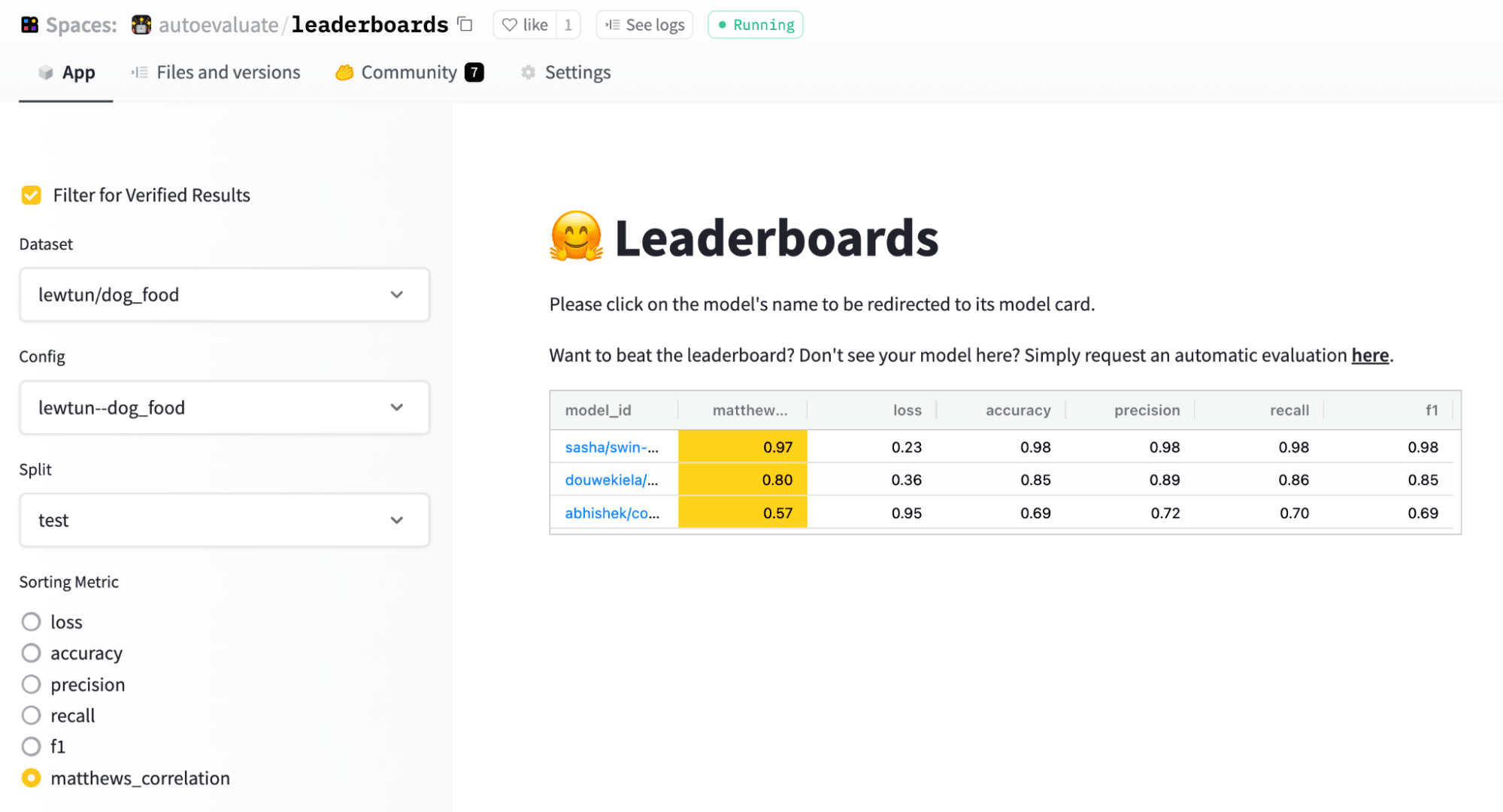

## Model overview

Inspired by [Stable Diffusion](https://huggingface.co./docs/diffusers/api/pipelines/stable_diffusion/overview), AudioLDM 2

is a text-to-audio _latent diffusion model (LDM)_ that learns continuous audio representations from text embeddings.

The overall generation process is summarised as follows:

1. Given a text input \\(\boldsymbol{x}\\), two text encoder models are used to compute the text embeddings: the text-branch of [CLAP](https://huggingface.co./docs/transformers/main/en/model_doc/clap), and the text-encoder of [Flan-T5](https://huggingface.co./docs/transformers/main/en/model_doc/flan-t5)

$$

\boldsymbol{E}_{1} = \text{CLAP}\left(\boldsymbol{x} \right); \quad \boldsymbol{E}_{2} = \text{T5}\left(\boldsymbol{x}\right)

$$

The CLAP text embeddings are trained to be aligned with the embeddings of the corresponding audio sample, whereas the Flan-T5 embeddings give a better representation of the semantics of the text.

2. These text embeddings are projected to a shared embedding space through individual linear projections:

$$

\boldsymbol{P}_{1} = \boldsymbol{W}_{\text{CLAP}} \boldsymbol{E}_{1}; \quad \boldsymbol{P}_{2} = \boldsymbol{W}_{\text{T5}}\boldsymbol{E}_{2}

$$

In the `diffusers` implementation, these projections are defined by the [AudioLDM2ProjectionModel](https://huggingface.co./docs/diffusers/api/pipelines/audioldm2/AudioLDM2ProjectionModel).

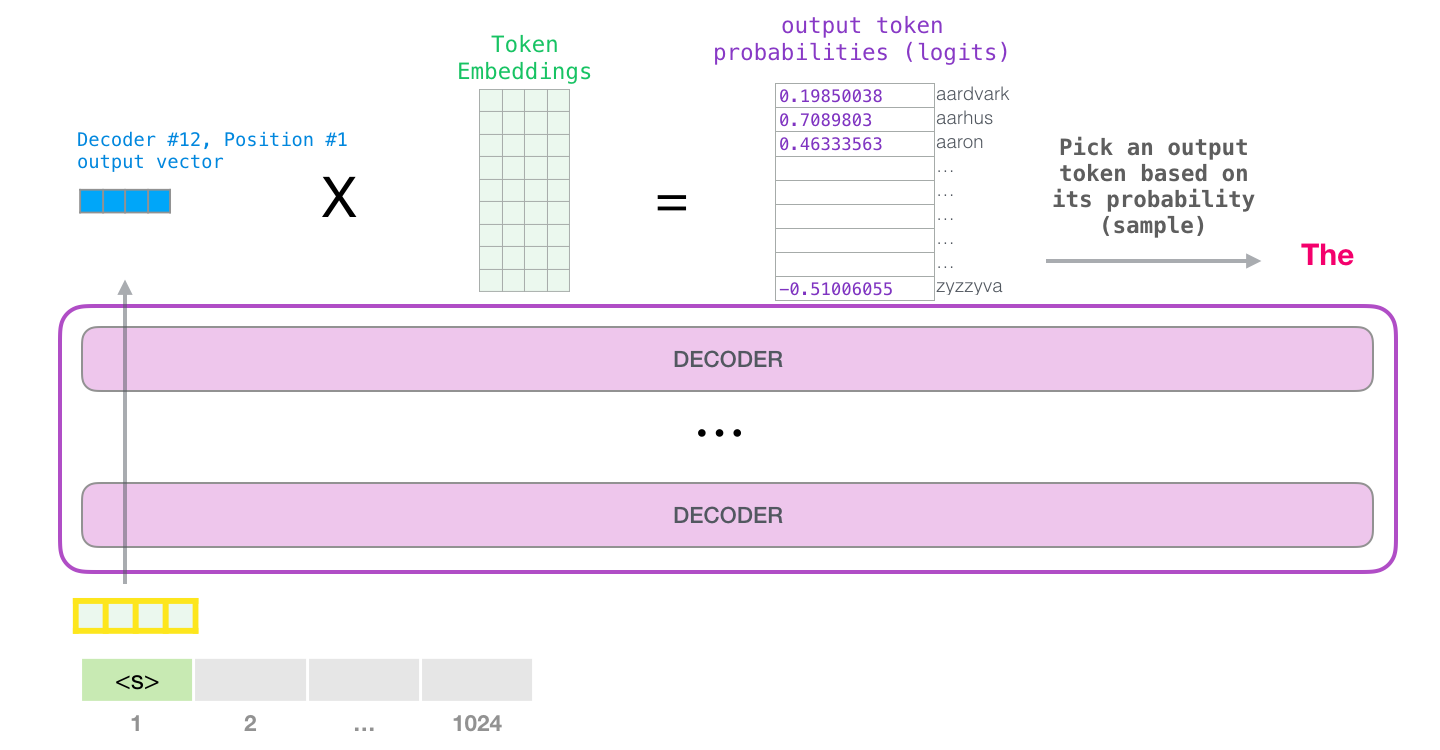

3. A [GPT2](https://huggingface.co./docs/transformers/main/en/model_doc/gpt2) language model (LM) is used to auto-regressively generate a sequence of \\(N\\) new embedding vectors, conditional on the projected CLAP and Flan-T5 embeddings:

$$

\tilde{\boldsymbol{E}}_{i} = \text{GPT2}\left(\boldsymbol{P}_{1}, \boldsymbol{P}_{2}, \tilde{\boldsymbol{E}}_{1:i-1}\right) \qquad \text{for } i=1,\dots,N

$$

4. The generated embedding vectors \\(\tilde{\boldsymbol{E}}_{1:N}\\) and Flan-T5 text embeddings \\(\boldsymbol{E}_{2}\\) are used as cross-attention conditioning in the LDM, which *de-noises*

a random latent via a reverse diffusion process. The LDM is run in the reverse diffusion process for a total of \\(T\\) inference steps:

$$

\boldsymbol{z}_{t} = \text{LDM}\left(\boldsymbol{z}_{t-1} | \tilde{\boldsymbol{E}}_{1:N}, \boldsymbol{E}_{2}\right) \qquad \text{for } t = 1, \dots, T

$$

where the initial latent variable \\(\boldsymbol{z}_{0}\\) is drawn from a normal distribution \\(\mathcal{N} \left(\boldsymbol{0}, \boldsymbol{I} \right)\\).

The [UNet](https://huggingface.co./docs/diffusers/api/pipelines/audioldm2/AudioLDM2UNet2DConditionModel) of the LDM is unique in

the sense that it takes **two** sets of cross-attention embeddings, \\(\tilde{\boldsymbol{E}}_{1:N}\\) from the GPT2 language model and \\(\boldsymbol{E}_{2}\\)

from Flan-T5, as opposed to one cross-attention conditioning as in most other LDMs.

5. The final de-noised latents \\(\boldsymbol{z}_{T}\\) are passed to the VAE decoder to recover the Mel spectrogram \\(\boldsymbol{s}\\):

$$

\boldsymbol{s} = \text{VAE}_{\text{dec}} \left(\boldsymbol{z}_{T}\right)

$$

6. The Mel spectrogram is passed to the vocoder to obtain the output audio waveform \\(\mathbf{y}\\):

$$

\boldsymbol{y} = \text{Vocoder}\left(\boldsymbol{s}\right)

$$

The diagram below demonstrates how a text input is passed through the text conditioning models, with the two prompt embeddings used as cross-conditioning in the LDM:

<p align="center">

<img src="https://huggingface.co./datasets/huggingface/documentation-images/resolve/main/blog/161_audioldm2/audioldm2.png?raw=true" width="600"/>

</p>

For full details on how the AudioLDM 2 model is trained, the reader is referred to the [AudioLDM 2 paper](https://arxiv.org/abs/2308.05734).

Hugging Face 🧨 Diffusers provides an end-to-end inference pipeline class [`AudioLDM2Pipeline`](https://huggingface.co./docs/diffusers/main/en/api/pipelines/audioldm2) that wraps this multi-stage generation process into a single callable object, enabling you to generate audio samples from text in just a few lines of code.

AudioLDM 2 comes in three variants. Two of these checkpoints are applicable to the general task of text-to-audio generation. The third checkpoint is trained exclusively on text-to-music generation. See the table below for details on the three official checkpoints, which can all be found on the [Hugging Face Hub](https://huggingface.co./models?search=cvssp/audioldm2):

| Checkpoint | Task | Model Size | Training Data / h |

| | [

[

"audio",

"research",

"implementation",

"optimization"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"audio",

"implementation",

"optimization",

"research"

] | null | null |

52178b63-05af-4cfc-bdaf-453b268b7ffd | completed | 2025-01-16T03:09:11.596489 | 2025-01-19T19:13:35.377297 | 856ae484-db41-42df-980a-515a295dcb74 | Using Machine Learning to Aid Survivors and Race through Time | merve, adirik | using-ml-for-disasters.md | On February 6, 2023, earthquakes measuring 7.7 and 7.6 hit South Eastern Turkey, affecting 10 cities and resulting in more than 42,000 deaths and 120,000 injured as of February 21.

A few hours after the earthquake, a group of programmers started a Discord server to roll out an application called *afetharita*, literally meaning, *disaster map*. This application would serve search & rescue teams and volunteers to find survivors and bring them help. The need for such an app arose when survivors posted screenshots of texts with their addresses and what they needed (including rescue) on social media. Some survivors also tweeted what they needed so their relatives knew they were alive and that they need rescue. Needing to extract information from these tweets, we developed various applications to turn them into structured data and raced against time in developing and deploying these apps.

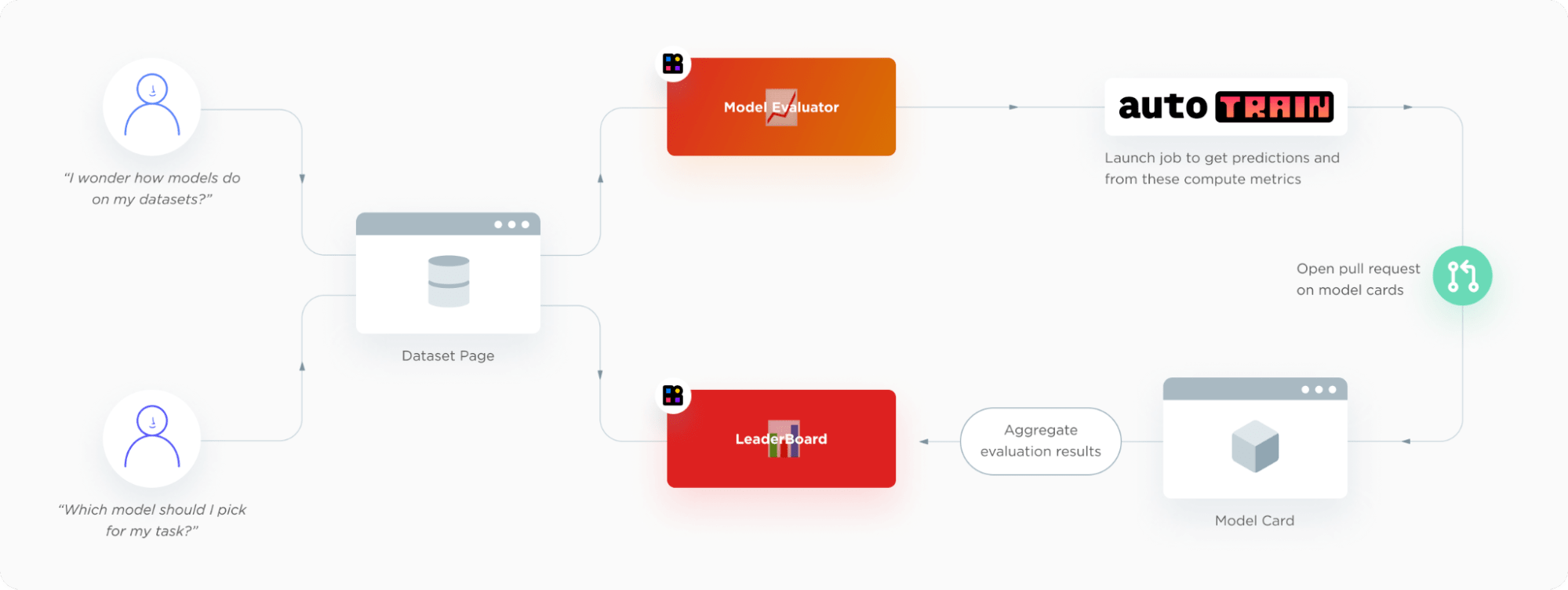

When I got invited to the discord server, there was quite a lot of chaos regarding how we (volunteers) would operate and what we would do. We decided to collaboratively train models so we needed a model and dataset registry. We opened a Hugging Face organization account and collaborated through pull requests as to build ML-based applications to receive and process information.

We had been told by volunteers in other teams that there's a need for an application to post screenshots, extract information from the screenshots, structure it and write the structured information to the database. We started developing an application that would take a given image, extract the text first, and from text, extract a name, telephone number, and address and write these informations to a database that would be handed to authorities. After experimenting with various open-source OCR tools, we started using `easyocr` for OCR part and `Gradio` for building an interface for this application. We were asked to build a standalone application for OCR as well so we opened endpoints from the interface. The text output from OCR is parsed using transformers-based fine-tuned NER model.

To collaborate and improve the application, we hosted it on Hugging Face Spaces and we've received a GPU grant to keep the application up and running. Hugging Face Hub team has set us up a CI bot for us to have an ephemeral environment, so we could see how a pull request would affect the Space, and it helped us during pull request reviews.

Later on, we were given labeled content from various channels (e.g. twitter, discord) with raw tweets of survivors' calls for help, along with the addresses and personal information extracted from them. We started experimenting both with few-shot prompting of closed-source models and fine-tuning our own token classification model from transformers. We’ve used [bert-base-turkish-cased](https://huggingface.co./dbmdz/bert-base-turkish-cased) as a base model for token classification and came up with the first address extraction model.

The model was later used in `afetharita` to extract addresses. The parsed addresses would be sent to a geocoding API to obtain longitude and latitude, and the geolocation would then be displayed on the front-end map. For inference, we have used Inference API, which is an API that hosts model for inference and is automatically enabled when the model is pushed to Hugging Face Hub. Using Inference API for serving has saved us from pulling the model, writing an app, building a docker image, setting up CI/CD, and deploying the model to a cloud instance, where it would be extra overhead work for the DevOps and cloud teams as well. Hugging Face teams have provided us with more replicas so that there would be no downtime and the application would be robust against a lot of traffic.

Later on, we were asked if we could extract what earthquake survivors need from a given tweet. We were given data with multiple labels for multiple needs in a given tweet, and these needs could be shelter, food, or logistics, as it was freezing cold over there. We’ve started experimenting first with zero-shot experimentations with open-source NLI models on Hugging Face Hub and few-shot experimentations with closed-source generative model endpoints. We have tried [xlm-roberta-large-xnli](https://huggingface.co./joeddav/xlm-roberta-large-xnli) and [convbert-base-turkish-mc4-cased-allnli_tr](https://huggingface.co./emrecan/convbert-base-turkish-mc4-cased-allnli_tr). NLI models were particularly useful as we could directly infer with candidate labels and change the labels as data drift occurs, whereas generative models could have made up labels and cause mismatches when giving responses to the backend. We initially didn’t have labeled data so anything would work.

In the end, we decided to fine-tune our own model as it would take roughly three minutes to fine-tune BERT’s text classification head on a single GPU. We had a labelling effort to develop the dataset to train this model. We logged our experiments in the model card’s metadata so we could later come up with a leaderboard to keep track of which model should be deployed to production. For base model, we have tried [bert-base-turkish-uncased](https://huggingface.co./loodos/bert-base-turkish-uncased) and [bert-base-turkish-128k-cased](https://huggingface.co./dbmdz/bert-base-turkish-128k-cased) and realized they perform better than [bert-base-turkish-cased](https://huggingface.co./dbmdz/bert-base-turkish-cased). You can find our leaderboard [here](https://huggingface.co./spaces/deprem-ml/intent-leaderboard).

Considering the task at hand and the imbalance of our data classes, we focused on eliminating false negatives and created a Space to benchmark the recall and F1-scores of all models. To do this, we added the metadata tag `deprem-clf-v1` to all relevant model repos and used this tag to automatically retrieve the logged F1 and recall scores and rank models. We had a separate benchmark set to avoid leakage to the train set and consistently benchmark our models. We also benchmarked each model to identify the best threshold per label for deployment.

We wanted our NER model to be evaluated and crowd-sourced the effort because the data labelers were working to give us better and updated intent datasets. To evaluate the NER model, we’ve set up a labeling interface using `Argilla` and `Gradio`, where people could input a tweet and flag the output as correct/incorrect/ambiguous.

Later, the dataset was deduplicated and used to benchmark our further experiments.

Another team under machine learning has worked with generative models (behind a gated API) to get the specific needs (as labels were too broad) as free text and pass the text as an additional context to each posting. For this, they’ve done prompt engineering and wrapped the API endpoints as a separate API, and deployed them on the cloud. We found that using few-shot prompting with LLMs helps adjust to fine-grained needs in the presence of rapidly developing data drift, as the only thing we need to adjust is the prompt and we do not need any labeled data for this.

These models are currently being used in production to create the points in the heat map below so that volunteers and search and rescue teams can bring the needs to survivors.

We’ve realized that if it wasn’t for Hugging Face Hub and the ecosystem, we wouldn’t be able to collaborate, prototype, and deploy this fast. Below is our MLOps pipeline for address recognition and intent classification models.

There are tens of volunteers behind this application and its individual components, who worked with no sleep to get these out in such a short time.

## Remote Sensing Applications

Other teams worked on remote sensing applications to assess the damage to buildings and infrastructure in an effort to direct search and rescue operations. The lack of electricity and stable mobile networks during the first 48 hours of the earthquake, combined with collapsed roads, made it extremely difficult to assess the extent of the damage and where help was needed. The search and rescue operations were also heavily affected by false reports of collapsed and damaged buildings due to the difficulties in communication and transportation.

To address these issues and create open source tools that can be leveraged in the future, we started by collecting pre and post-earthquake satellite images of the affected zones from Planet Labs, Maxar and Copernicus Open Access Hub.

Our initial approach was to rapidly label satellite images for object detection and instance segmentation, with a single category for "buildings". The aim was to evaluate the extent of damage by comparing the number of surviving buildings in pre- and post-earthquake images collected from the same area. In order to make it easier to train models, we started by cropping 1080x1080 satellite images into smaller 640x640 chunks. Next, we fine-tuned [YOLOv5](https://huggingface.co./spaces/deprem-ml/deprem_satellite_test), YOLOv8 and EfficientNet models for building detection and a [SegFormer](https://huggingface.co./spaces/deprem-ml/deprem_satellite_semantic_whu) model for semantic segmentation of buildings, and deployed these apps as Hugging Face Spaces.

Once again, dozens of volunteers worked on labeling, preparing data, and training models. In addition to individual volunteers, companies like [Co-One](https://co-one.co/) volunteered to label satellite data with more detailed annotations for buildings and infrastructure, including *no damage*, *destroyed*, *damaged*, *damaged facility,* and *undamaged facility* labels. Our current objective is to release an extensive open-source dataset that can expedite search and rescue operations worldwide in the future.

## Wrapping Up

For this extreme use case, we had to move fast and optimize over classification metrics where even one percent improvement mattered. There were many ethical discussions in the progress, as even picking the metric to optimize over was an ethical question. We have seen how open-source machine learning and democratization enables individuals to build life-saving applications.

We are thankful for the community behind Hugging Face for releasing these models and datasets, and team at Hugging Face for their infrastructure and MLOps support. | [

[

"data",

"implementation",

"community",

"text_classification"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"data",

"text_classification",

"community",

"implementation"

] | null | null |

5fa1a269-bacb-410c-9a09-5be50175f97a | completed | 2025-01-16T03:09:11.596494 | 2025-01-19T19:12:11.142761 | 610edbad-fe3c-493c-a9e9-3d95a5b4d895 | An Introduction to Q-Learning Part 1 | ThomasSimonini | deep-rl-q-part1.md | <h2>Unit 2, part 1 of the <a href="https://github.com/huggingface/deep-rl-class">Deep Reinforcement Learning Class with Hugging Face 🤗</a></h2>

⚠️ A **new updated version of this article is available here** 👉 [https://huggingface.co./deep-rl-course/unit1/introduction](https://huggingface.co./deep-rl-course/unit2/introduction)

*This article is part of the Deep Reinforcement Learning Class. A free course from beginner to expert. Check the syllabus [here.](https://huggingface.co./deep-rl-course/unit0/introduction)*

<img src="assets/70_deep_rl_q_part1/thumbnail.gif" alt="Thumbnail"/> | [

[

"research",

"implementation",

"tutorial",

"robotics"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"tutorial",

"implementation",

"research",

"robotics"

] | null | null |

ac3dbcb1-7a08-4028-b08c-071553560797 | completed | 2025-01-16T03:09:11.596499 | 2025-01-19T18:56:46.042767 | 78d5ff5e-e703-418c-860b-5237090c90b5 | Huggy Lingo: Using Machine Learning to Improve Language Metadata on the Hugging Face Hub | davanstrien | huggylingo.md | **tl;dr**: We're using machine learning to detect the language of Hub datasets with no language metadata, and [librarian-bots](https://huggingface.co./librarian-bots) to make pull requests to add this metadata.

The Hugging Face Hub has become the repository where the community shares machine learning models, datasets, and applications. As the number of datasets grows, metadata becomes increasingly important as a tool for finding the right resource for your use case.

In this blog post, I'm excited to share some early experiments which seek to use machine learning to improve the metadata for datasets hosted on the Hugging Face Hub.

### Language metadata for datasets on the Hub

There are currently ~50K public datasets on the Hugging Face Hub. Metadata about the language used in a dataset can be specified using a [YAML](https://en.wikipedia.org/wiki/YAML) field at the top of the [dataset card](https://huggingface.co./docs/datasets/upload_dataset#create-a-dataset-card).

All public datasets specify 1,716 unique languages via a language tag in their metadata. Note that some of them will be the result of languages being specified in different ways i.e. `en` vs `eng` vs `english` vs `English`.

For example, the [IMDB dataset](https://huggingface.co./datasets/imdb) specifies `en` in the YAML metadata (indicating English):

* Section of the YAML metadata for the IMDB dataset*

It is perhaps unsurprising that English is by far the most common language for datasets on the Hub, with around 19% of datasets on the Hub listing their language as `en` (not including any variations of `en`, so the actual percentage is likely much higher).

*The frequency and percentage frequency for datasets on the Hugging Face Hub*

What does the distribution of languages look like if we exclude English? We can see that there is a grouping of a few dominant languages and after that there is a pretty smooth fall in the frequencies at which languages appear.

*Distribution of language tags for datasets on the hub excluding English*

However, there is a major caveat to this. Most datasets (around 87%) do not specify any language at all!

*The percent of datasets which have language metadata. True indicates language metadata is specified, False means no language data is listed. No card data means that there isn't any metadata or it couldn't be loaded by the `huggingface_hub` Python library.*

#### Why is language metadata important?

Language metadata can be a vital tool for finding relevant datasets. The Hugging Face Hub allows you to filter datasets by language. For example, if we want to find datasets with Dutch language we can use [a filter](https://huggingface.co./datasets?language=language:nl&sort=trending) on the Hub to include only datasets with Dutch data.

Currently this filter returns 184 datasets. However, there are datasets on the Hub which include Dutch but don't specify this in the metadata. These datasets become more difficult to find, particularly as the number of datasets on the Hub grows.

Many people want to be able to find datasets for a particular language. One of the major barriers to training good open source LLMs for a particular language is a lack of high quality training data.

If we switch to the task of finding relevant machine learning models, knowing what languages were included in the training data for a model can help us find models for the language we are interested in. This relies on the dataset specifying this information.

Finally, knowing what languages are represented on the Hub (and which are not), helps us understand the language biases of the Hub and helps inform community efforts to address gaps in particular languages.

### Predicting the languages of datasets using machine learning

We’ve already seen that many of the datasets on the Hugging Face Hub haven’t included metadata for the language used. However, since these datasets are already shared openly, perhaps we can look at the dataset and try to identify the language using machine learning.

#### Getting the data

One way we could access some examples from a dataset is by using the datasets library to download the datasets i.e.

```python

from datasets import load_dataset

dataset = load_dataset("biglam/on_the_books")

```

However, for some of the datasets on the Hub, we might be keen not to download the whole dataset. We could instead try to load a sample of the dataset. However, depending on how the dataset was created, we might still end up downloading more data than we’d need onto the machine we’re working on.

Luckily, many datasets on the Hub are available via the [dataset viewer API](https://huggingface.co./docs/datasets-server/index). It allows us to access datasets hosted on the Hub without downloading the dataset locally. The API powers the dataset viewer you will see for many datasets hosted on the Hub.

For this first experiment with predicting language for datasets, we define a list of column names and data types likely to contain textual content i.e. `text` or `prompt` column names and `string` features are likely to be relevant `image` is not. This means we can avoid predicting the language for datasets where language information is less relevant, for example, image classification datasets. We use the dataset viewer API to get 20 rows of text data to pass to a machine learning model (we could modify this to take more or fewer examples from the dataset).

This approach means that for the majority of datasets on the Hub we can quickly request the contents of likely text columns for the first 20 rows in a dataset.

#### Predicting the language of a dataset

Once we have some examples of text from a dataset, we need to predict the language. There are various options here, but for this work, we used the [facebook/fasttext-language-identification](https://huggingface.co./facebook/fasttext-language-identification) fastText model created by [Meta](https://huggingface.co./facebook) as part of the [No Language Left Behind](https://ai.facebook.com/research/no-language-left-behind/) work. This model can detect 217 languages which will likely represent the majority of languages for datasets hosted on the Hub.

We pass 20 examples to the model representing rows from a dataset. This results in 20 individual language predictions (one per row) for each dataset.

Once we have these predictions, we do some additional filtering to determine if we will accept the predictions as a metadata suggestion. This roughly consists of:

- Grouping the predictions for each dataset by language: some datasets return predictions for multiple languages. We group these predictions by the language predicted i.e. if a dataset returns predictions for English and Dutch, we group the English and Dutch predictions together.

- For datasets with multiple languages predicted, we count how many predictions we have for each language. If a language is predicted less than 20% of the time, we discard this prediction. i.e. if we have 18 predictions for English and only 2 for Dutch we discard the Dutch predictions.

- We calculate the mean score for all predictions for a language. If the mean score associated with a languages prediction is below 80% we discard this prediction.

Once we’ve done this filtering, we have a further step of deciding how to use these predictions. The fastText language prediction model returns predictions as an [ISO 639-3](https://en.wikipedia.org/wiki/ISO_639-3) code (an international standard for language codes) along with a script type. i.e. `kor_Hang` is the ISO 693-3 language code for Korean (kor) + Hangul script (Hang) a [ISO 15924](https://en.wikipedia.org/wiki/ISO_15924) code representing the script of a language.

We discard the script information since this isn't currently captured consistently as metadata on the Hub and, where possible, we convert the language prediction returned by the model from [ISO 639-3](https://en.wikipedia.org/wiki/ISO_639-3) to [ISO 639-1](https://en.wikipedia.org/wiki/ISO_639-1) language codes. This is largely done because these language codes have better support in the Hub UI for navigating datasets.

For some ISO 639-3 codes, there is no ISO 639-1 equivalent. For these cases we manually specify a mapping if we deem it to make sense, for example Standard Arabic (`arb`) is mapped to Arabic (`ar`). Where an obvious mapping is not possible, we currently don't suggest metadata for this dataset. In future iterations of this work we may take a different approach. It is important to recognise this approach does come with downsides, since it reduces the diversity of languages which might be suggested and also relies on subjective judgments about what languages can be mapped to others.

But the process doesn't stop here. After all, what use is predicting the language of the datasets if we can't share that information with the rest of the community?

### Using Librarian-Bot to Update Metadata

To ensure this valuable language metadata is incorporated back into the Hub, we turn to Librarian-Bot! Librarian-Bot takes the language predictions generated by Meta's [facebook/fasttext-language-identification](https://huggingface.co./facebook/fasttext-language-identification) fastText model and opens pull requests to add this information to the metadata of each respective dataset.

This automated system not only updates the datasets with language information, but also does it swiftly and efficiently, without requiring manual work from humans. Once these pull requests are approved and merged, the language metadata becomes available for all users, significantly enhancing the usability of the Hugging Face Hub. You can keep track of what the librarian-bot is doing [here](https://huggingface.co./librarian-bot/activity/community)!

#### Next steps

As the number of datasets on the Hub grows, metadata becomes increasingly important. Language metadata, in particular, can be incredibly valuable for identifying the correct dataset for your use case.

With the assistance of the dataset viewer API and the [Librarian-Bots](https://huggingface.co./librarian-bots), we can update our dataset metadata at a scale that wouldn't be possible manually. As a result, we're enriching the Hub and making it an even more powerful tool for data scientists, linguists, and AI enthusiasts around the world.

As the machine learning librarian at Hugging Face, I continue exploring opportunities for automatic metadata enrichment for machine learning artefacts hosted on the Hub. Feel free to reach out (daniel at thiswebsite dot co) if you have ideas or want to collaborate on this effort! | [

[

"data",

"implementation",

"community",

"tools"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"data",

"tools",

"community",

"implementation"

] | null | null |

eca4dc4b-ba79-4050-b063-1534ef96f331 | completed | 2025-01-16T03:09:11.596505 | 2025-01-19T17:19:01.245026 | 75481bad-44e8-49d0-8964-692927239a80 | Fine-tuning 20B LLMs with RLHF on a 24GB consumer GPU | edbeeching, ybelkada, lvwerra, smangrul, lewtun, kashif | trl-peft.md | We are excited to officially release the integration of `trl` with `peft` to make Large Language Model (LLM) fine-tuning with Reinforcement Learning more accessible to anyone! In this post, we explain why this is a competitive alternative to existing fine-tuning approaches.

Note `peft` is a general tool that can be applied to many ML use-cases but it’s particularly interesting for RLHF as this method is especially memory-hungry!

If you want to directly deep dive into the code, check out the example scripts directly on the [documentation page of TRL](https://huggingface.co./docs/trl/main/en/sentiment_tuning_peft).

## Introduction

### LLMs & RLHF

LLMs combined with RLHF (Reinforcement Learning with Human Feedback) seems to be the next go-to approach for building very powerful AI systems such as ChatGPT.

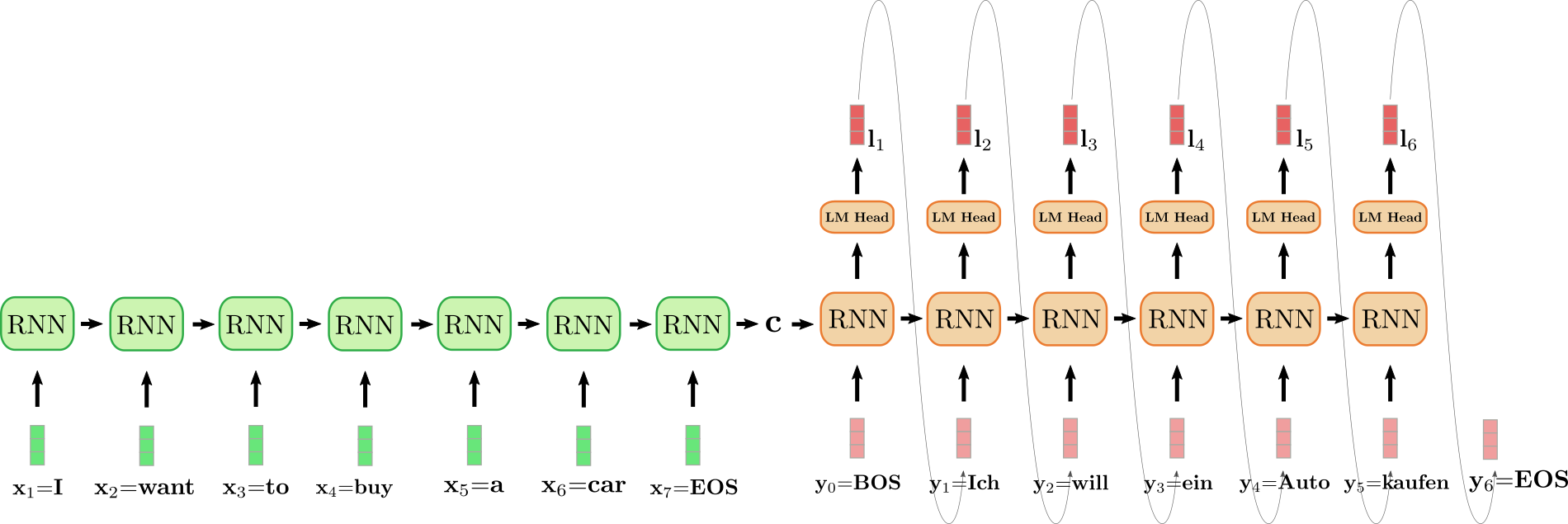

Training a language model with RLHF typically involves the following three steps:

1- Fine-tune a pretrained LLM on a specific domain or corpus of instructions and human demonstrations

2- Collect a human annotated dataset and train a reward model

3- Further fine-tune the LLM from step 1 with the reward model and this dataset using RL (e.g. PPO)

|  |

|:--:|

| <b>Overview of ChatGPT's training protocol, from the data collection to the RL part. Source: <a href="https://openai.com/blog/chatgpt" rel="noopener" target="_blank" >OpenAI's ChatGPT blogpost</a> </b>|

The choice of the base LLM is quite crucial here. At this time of writing, the “best” open-source LLM that can be used “out-of-the-box” for many tasks are instruction finetuned LLMs. Notable models being: [BLOOMZ](https://huggingface.co./bigscience/bloomz), [Flan-T5](https://huggingface.co./google/flan-t5-xxl), [Flan-UL2](https://huggingface.co./google/flan-ul2), and [OPT-IML](https://huggingface.co./facebook/opt-iml-max-30b). The downside of these models is their size. To get a decent model, you need at least to play with 10B+ scale models which would require up to 40GB GPU memory in full precision, just to fit the model on a single GPU device without doing any training at all!

### What is TRL?

The `trl` library aims at making the RL step much easier and more flexible so that anyone can fine-tune their LM using RL on their custom dataset and training setup. Among many other applications, you can use this algorithm to fine-tune a model to generate [positive movie reviews](https://huggingface.co./docs/trl/sentiment_tuning), do [controlled generation](https://github.com/lvwerra/trl/blob/main/examples/sentiment/notebooks/gpt2-sentiment-control.ipynb) or [make the model less toxic](https://huggingface.co./docs/trl/detoxifying_a_lm).

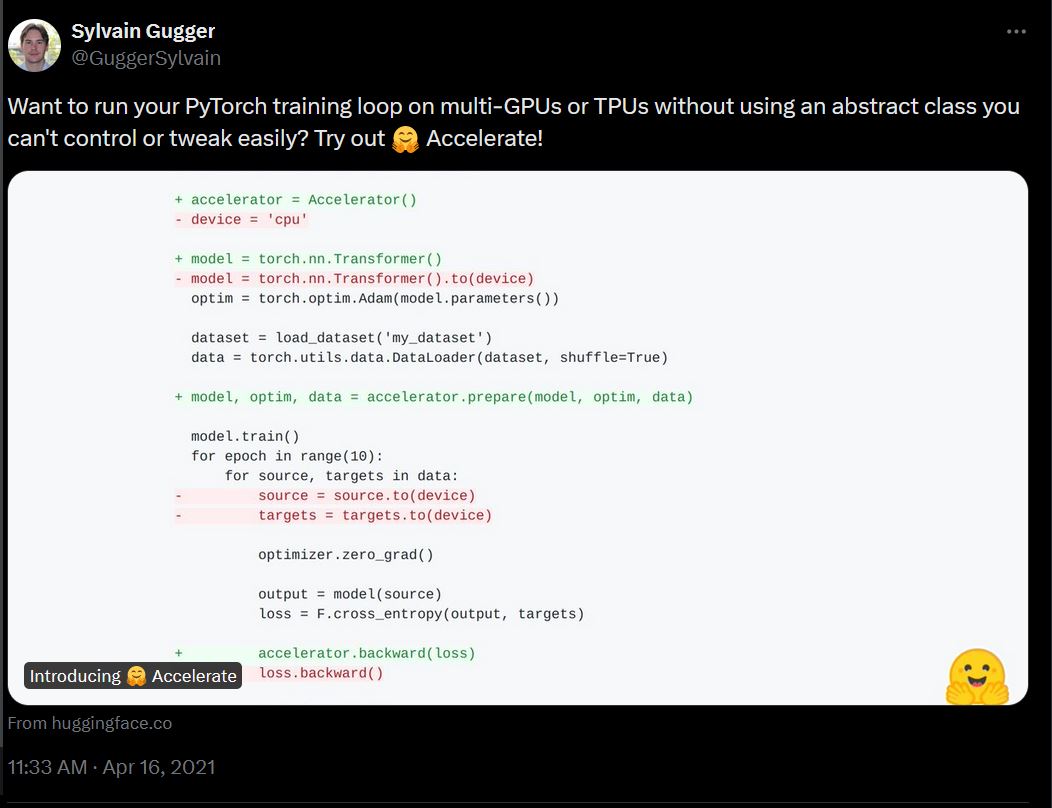

Using `trl` you can run one of the most popular Deep RL algorithms, [PPO](https://huggingface.co./deep-rl-course/unit8/introduction?fw=pt), in a distributed manner or on a single device! We leverage `accelerate` from the Hugging Face ecosystem to make this possible, so that any user can scale up the experiments up to an interesting scale.

Fine-tuning a language model with RL follows roughly the protocol detailed below. This requires having 2 copies of the original model; to avoid the active model deviating too much from its original behavior / distribution you need to compute the logits of the reference model at each optimization step. This adds a hard constraint on the optimization process as you need always at least two copies of the model per GPU device. If the model grows in size, it becomes more and more tricky to fit the setup on a single GPU.

|  |

|:--:|

| <b>Overview of the PPO training setup in TRL.</b>|

In `trl` you can also use shared layers between reference and active models to avoid entire copies. A concrete example of this feature is showcased in the detoxification example.

### Training at scale

Training at scale can be challenging. The first challenge is fitting the model and its optimizer states on the available GPU devices. The amount of GPU memory a single parameter takes depends on its “precision” (or more specifically `dtype`). The most common `dtype` being `float32` (32-bit), `float16`, and `bfloat16` (16-bit). More recently “exotic” precisions are supported out-of-the-box for training and inference (with certain conditions and constraints) such as `int8` (8-bit). In a nutshell, to load a model on a GPU device each billion parameters costs 4GB in float32 precision, 2GB in float16, and 1GB in int8. If you would like to learn more about this topic, have a look at this blogpost which dives deeper: [https://huggingface.co./blog/hf-bitsandbytes-integration](https://huggingface.co./blog/hf-bitsandbytes-integration).

If you use an AdamW optimizer each parameter needs 8 bytes (e.g. if your model has 1B parameters, the full AdamW optimizer of the model would require 8GB GPU memory - [source](https://huggingface.co./docs/transformers/v4.20.1/en/perf_train_gpu_one)).

Many techniques have been adopted to tackle these challenges at scale. The most familiar paradigms are Pipeline Parallelism, Tensor Parallelism, and Data Parallelism.

|  |

|:--:|

| <b>Image Credits to <a href="https://towardsdatascience.com/distributed-parallel-training-data-parallelism-and-model-parallelism-ec2d234e3214" rel="noopener" target="_blank" >this blogpost</a> </b>|

With data parallelism the same model is hosted in parallel on several machines and each instance is fed a different data batch. This is the most straight forward parallelism strategy essentially replicating the single-GPU case and is already supported by `trl`. With Pipeline and Tensor Parallelism the model itself is distributed across machines: in Pipeline Parallelism the model is split layer-wise, whereas Tensor Parallelism splits tensor operations across GPUs (e.g. matrix multiplications). With these Model Parallelism strategies, you need to shard the model weights across many devices which requires you to define a communication protocol of the activations and gradients across processes. This is not trivial to implement and might need the adoption of some frameworks such as [`Megatron-DeepSpeed`](https://github.com/microsoft/Megatron-DeepSpeed) or [`Nemo`](https://github.com/NVIDIA/NeMo). It is also important to highlight other tools that are essential for scaling LLM training such as Adaptive activation checkpointing and fused kernels. Further reading about parallelism paradigms can be found [here](https://huggingface.co./docs/transformers/v4.17.0/en/parallelism).

Therefore, we asked ourselves the following question: how far can we go with just data parallelism? Can we use existing tools to fit super-large training processes (including active model, reference model and optimizer states) in a single device? The answer appears to be yes. The main ingredients are: adapters and 8bit matrix multiplication! Let us cover these topics in the following sections:

### 8-bit matrix multiplication

Efficient 8-bit matrix multiplication is a method that has been first introduced in the paper LLM.int8() and aims to solve the performance degradation issue when quantizing large-scale models. The proposed method breaks down the matrix multiplications that are applied under the hood in Linear layers in two stages: the outlier hidden states part that is going to be performed in float16 & the “non-outlier” part that is performed in int8.

|  |

|:--:|

| <b>Efficient 8-bit matrix multiplication is a method that has been first introduced in the paper [LLM.int8()](https://arxiv.org/abs/2208.07339) and aims to solve the performance degradation issue when quantizing large-scale models. The proposed method breaks down the matrix multiplications that are applied under the hood in Linear layers in two stages: the outlier hidden states part that is going to be performed in float16 & the “non-outlier” part that is performed in int8. </b>|

In a nutshell, you can reduce the size of a full-precision model by 4 (thus, by 2 for half-precision models) if you use 8-bit matrix multiplication.

### Low rank adaptation and PEFT

In 2021, a paper called LoRA: Low-Rank Adaption of Large Language Models demonstrated that fine tuning of large language models can be performed by freezing the pretrained weights and creating low rank versions of the query and value layers attention matrices. These low rank matrices have far fewer parameters than the original model, enabling fine-tuning with far less GPU memory. The authors demonstrate that fine-tuning of low-rank adapters achieved comparable results to fine-tuning the full pretrained model.

|  |

|:--:|

| <b>The output activations original (frozen) pretrained weights (left) are augmented by a low rank adapter comprised of weight matrics A and B (right). </b>|

This technique allows the fine tuning of LLMs using a fraction of the memory requirements. There are, however, some downsides. The forward and backward pass is approximately twice as slow, due to the additional matrix multiplications in the adapter layers.

### What is PEFT?

[Parameter-Efficient Fine-Tuning (PEFT)](https://github.com/huggingface/peft), is a Hugging Face library, created to support the creation and fine tuning of adapter layers on LLMs.`peft` is seamlessly integrated with 🤗 Accelerate for large scale models leveraging DeepSpeed and Big Model Inference.

The library supports many state of the art models and has an extensive set of examples, including:

- Causal language modeling

- Conditional generation

- Image classification

- 8-bit int8 training

- Low Rank adaption of Dreambooth models

- Semantic segmentation

- Sequence classification

- Token classification

The library is still under extensive and active development, with many upcoming features to be announced in the coming months.

## Fine-tuning 20B parameter models with Low Rank Adapters

Now that the prerequisites are out of the way, let us go through the entire pipeline step by step, and explain with figures how you can fine-tune a 20B parameter LLM with RL using the tools mentioned above on a single 24GB GPU!

### Step 1: Load your active model in 8-bit precision

|  |

|:--:|

| <b> Loading a model in 8-bit precision can save up to 4x memory compared to full precision model</b>|

A “free-lunch” memory reduction of a LLM using `transformers` is to load your model in 8-bit precision using the method described in LLM.int8. This can be performed by simply adding the flag `load_in_8bit=True` when calling the `from_pretrained` method (you can read more about that [here](https://huggingface.co./docs/transformers/main/en/main_classes/quantization)).

As stated in the previous section, a “hack” to compute the amount of GPU memory you should need to load your model is to think in terms of “billions of parameters”. As one byte needs 8 bits, you need 4GB per billion parameters for a full-precision model (32bit = 4bytes), 2GB per billion parameters for a half-precision model, and 1GB per billion parameters for an int8 model.

So in the first place, let’s just load the active model in 8-bit. Let’s see what we need to do for the second step!

### Step 2: Add extra trainable adapters using `peft`

|  |

|:--:|

| <b> You easily add adapters on a frozen 8-bit model thus reducing the memory requirements of the optimizer states, by training a small fraction of parameters</b>|

The second step is to load adapters inside the model and make these adapters trainable. This enables a drastic reduction of the number of trainable weights that are needed for the active model. This step leverages `peft` library and can be performed with a few lines of code. Note that once the adapters are trained, you can easily push them to the Hub to use them later.

### Step 3: Use the same model to get the reference and active logits

|  |

|:--:|

| <b> You can easily disable and enable adapters using the `peft` API.</b>|

Since adapters can be deactivated, we can use the same model to get the reference and active logits for PPO, without having to create two copies of the same model! This leverages a feature in `peft` library, which is the `disable_adapters` context manager.

### Overview of the training scripts:

We will now describe how we trained a 20B parameter [gpt-neox model](https://huggingface.co./EleutherAI/gpt-neox-20b) using `transformers`, `peft` and `trl`. The end goal of this example was to fine-tune a LLM to generate positive movie reviews in a memory constrained settting. Similar steps could be applied for other tasks, such as dialogue models.

Overall there were three key steps and training scripts:

1. **[Script](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/clm_finetune_peft_imdb.py)** - Fine tuning a Low Rank Adapter on a frozen 8-bit model for text generation on the imdb dataset.

2. **[Script](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/merge_peft_adapter.py)** - Merging of the adapter layers into the base model’s weights and storing these on the hub.

3. **[Script](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/gpt-neo-20b_sentiment_peft.py)** - Sentiment fine-tuning of a Low Rank Adapter to create positive reviews.

We tested these steps on a 24GB NVIDIA 4090 GPU. While it is possible to perform the entire training run on a 24 GB GPU, the full training runs were untaken on a single A100 on the 🤗 reseach cluster.

The first step in the training process was fine-tuning on the pretrained model. Typically this would require several high-end 80GB A100 GPUs, so we chose to train a low rank adapter. We treated this as a Causal Language modeling setting and trained for one epoch of examples from the [imdb](https://huggingface.co./datasets/imdb) dataset, which features movie reviews and labels indicating whether they are of positive or negative sentiment.

|  |

|:--:|

| <b> Training loss during one epoch of training of a gpt-neox-20b model for one epoch on the imdb dataset</b>|

In order to take the adapted model and perform further finetuning with RL, we first needed to combine the adapted weights, this was achieved by loading the pretrained model and adapter in 16-bit floating point and summary with weight matrices (with the appropriate scaling applied).

Finally, we could then fine-tune another low-rank adapter, on top of the frozen imdb-finetuned model. We use an [imdb sentiment classifier](https://huggingface.co./lvwerra/distilbert-imdb) to provide the rewards for the RL algorithm.

|  |

|:--:|

| <b> Mean of rewards when RL fine-tuning of a peft adapted 20B parameter model to generate positive movie reviews.</b>|

The full Weights and Biases report is available for this experiment [here](https://wandb.ai/edbeeching/trl/runs/l8e7uwm6?workspace=user-edbeeching), if you want to check out more plots and text generations.

## Conclusion

We have implemented a new functionality in `trl` that allows users to fine-tune large language models using RLHF at a reasonable cost by leveraging the `peft` and `bitsandbytes` libraries. We demonstrated that fine-tuning `gpt-neo-x` (40GB in `bfloat16`!) on a 24GB consumer GPU is possible, and we expect that this integration will be widely used by the community to fine-tune larger models utilizing RLHF and share great artifacts.

We have identified some interesting directions for the next steps to push the limits of this integration

- *How this will scale in the multi-GPU setting?* We’ll mainly explore how this integration will scale with respect to the number of GPUs, whether it is possible to apply Data Parallelism out-of-the-box or if it’ll require some new feature adoption on any of the involved libraries.

- *What tools can we leverage to increase training speed?* We have observed that the main downside of this integration is the overall training speed. In the future we would be keen to explore the possible directions to make the training much faster.

## References

- parallelism paradigms: [https://huggingface.co./docs/transformers/v4.17.0/en/parallelism](https://huggingface.co./docs/transformers/v4.17.0/en/parallelism)

- 8-bit integration in `transformers`: [https://huggingface.co./blog/hf-bitsandbytes-integration](https://huggingface.co./blog/hf-bitsandbytes-integration)

- LLM.int8 paper: [https://arxiv.org/abs/2208.07339](https://arxiv.org/abs/2208.07339)

- Gradient checkpoiting explained: [https://docs.aws.amazon.com/sagemaker/latest/dg/model-parallel-extended-features-pytorch-activation-checkpointing.html](https://docs.aws.amazon.com/sagemaker/latest/dg/model-parallel-extended-features-pytorch-activation-checkpointing.html) | [

[

"llm",

"implementation",

"optimization",