metadata

license: apache-2.0

widget:

- text: >-

Converts a simple image description into a prompt. Prompts are formatted

as multiple related tags separated by commas, plus you can use () to

increase the weight, [] to decrease the weight, or use a number to specify

the weight. You should add appropriate words to make the images described

in the prompt more aesthetically pleasing, but make sure there is a

correlation between the input and output.

### Input: 1 girl

### Output:

tags:

- pytorch

- transformers

- text-generation

BeautifulPrompt-v2

简介 Brief Introduction

我们开源了一个自动Prompt生成模型,您可以直接输入一个极其简单的Prompt,就可以得到经过语言模型优化过的Prompt,帮助您更简单地生成高颜值图像。相比v1, 我们提升了复杂场景下的表现以及增加了生成权重(配合sd-webui使用)的能力。

We release an automatic Prompt generation model, you can directly enter an extremely simple Prompt and get a Prompt optimized by the language model to help you generate more beautiful images simply. Compared with v1, we have improved the performance in complex scenarios and increased the ability to generate weights (use with sd-webui).

- Github: EasyNLP

使用 Usage

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained('alibaba-pai/pai-bloom-1b1-text2prompt-sd-v2')

model = AutoModelForCausalLM.from_pretrained('alibaba-pai/pai-bloom-1b1-text2prompt-sd-v2').eval().cuda()

raw_prompt = '1 girl'

TEMPLATE_V2 = 'Converts a simple image description into a prompt. \

Prompts are formatted as multiple related tags separated by commas, plus you can use () to increase the weight, [] to decrease the weight, \

or use a number to specify the weight. You should add appropriate words to make the images described in the prompt more aesthetically pleasing, \

but make sure there is a correlation between the input and output.\n\

### Input: {raw_prompt}\n### Output:'

input = TEMPLATE_V2.format(raw_prompt=raw_prompt)

input_ids = tokenizer.encode(input, return_tensors='pt').cuda()

outputs = model.generate(

input_ids,

max_new_tokens=384,

do_sample=True,

temperature=0.9,

top_k=50,

top_p=0.95,

repetition_penalty=1.1,

num_return_sequences=5)

prompts = tokenizer.batch_decode(outputs[:, input_ids.size(1):], skip_special_tokens=True)

prompts = [p.strip() for p in prompts]

print(prompts)

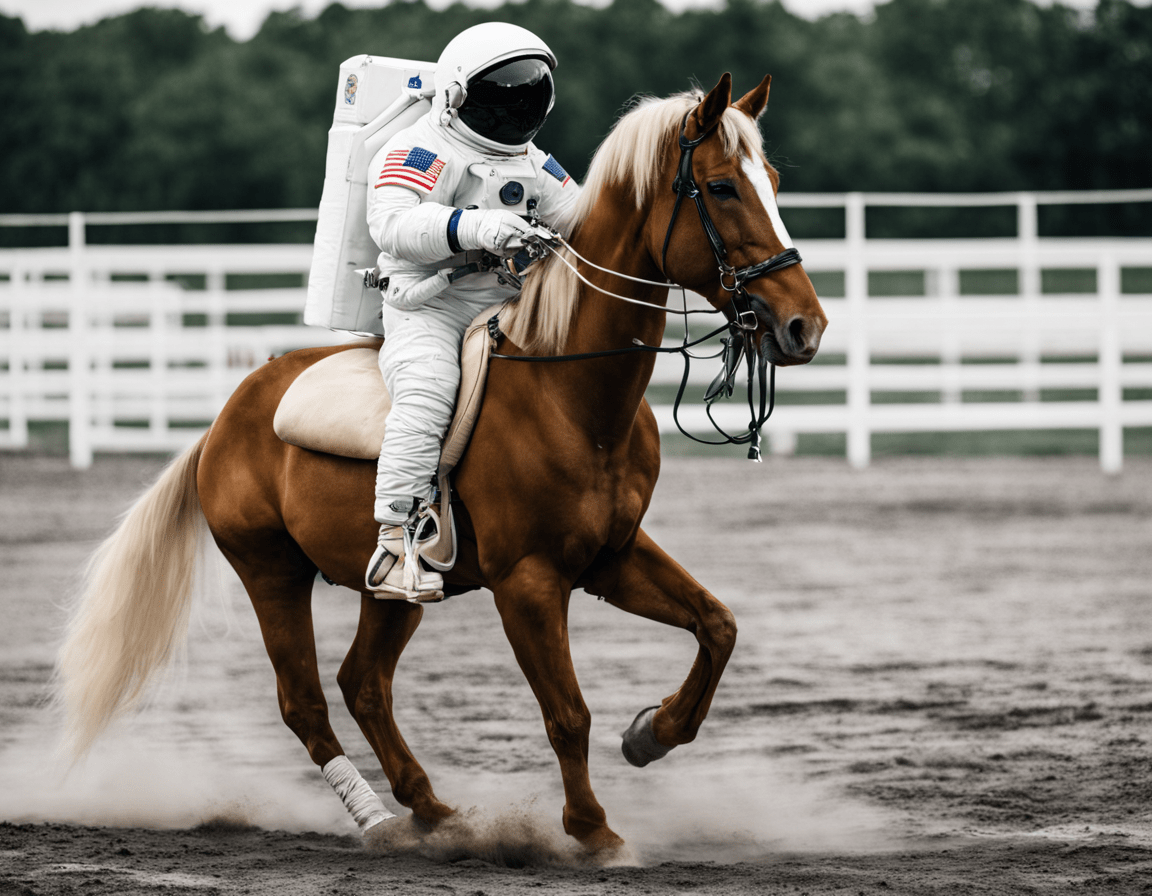

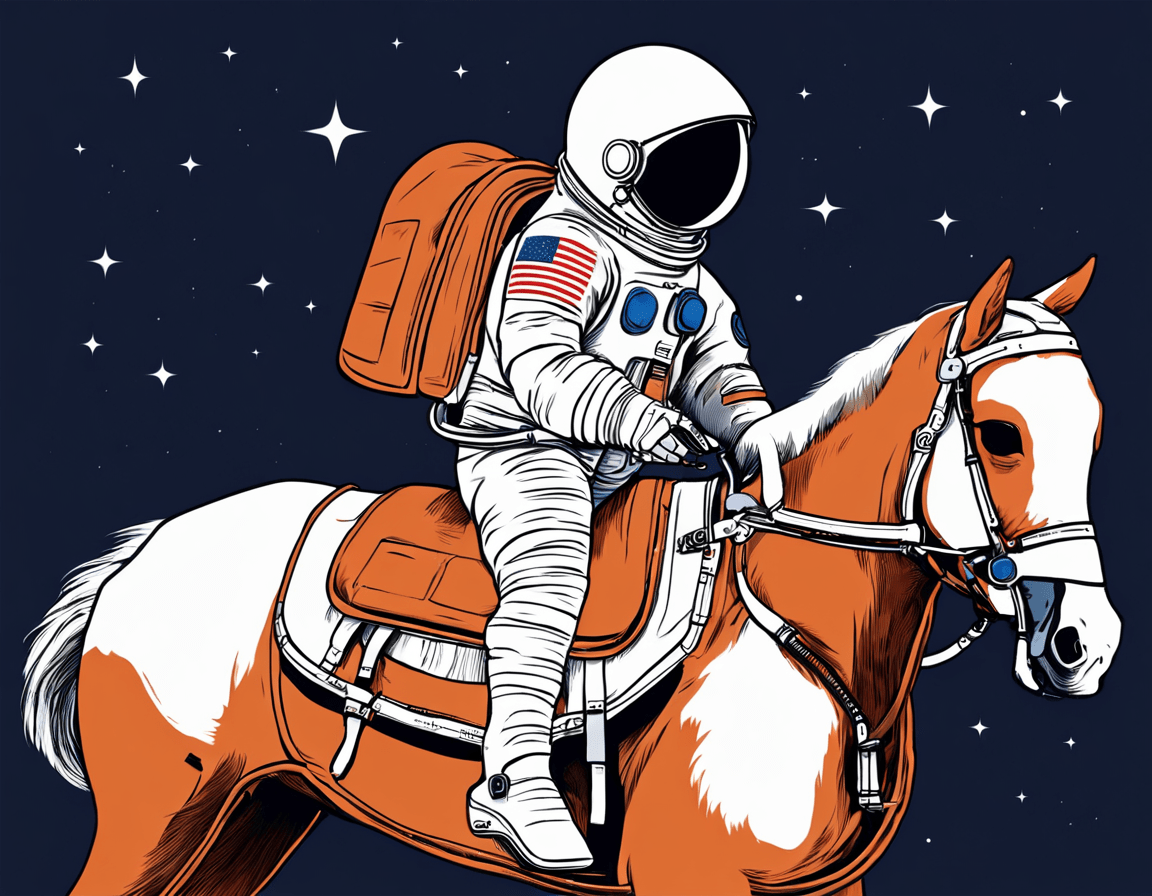

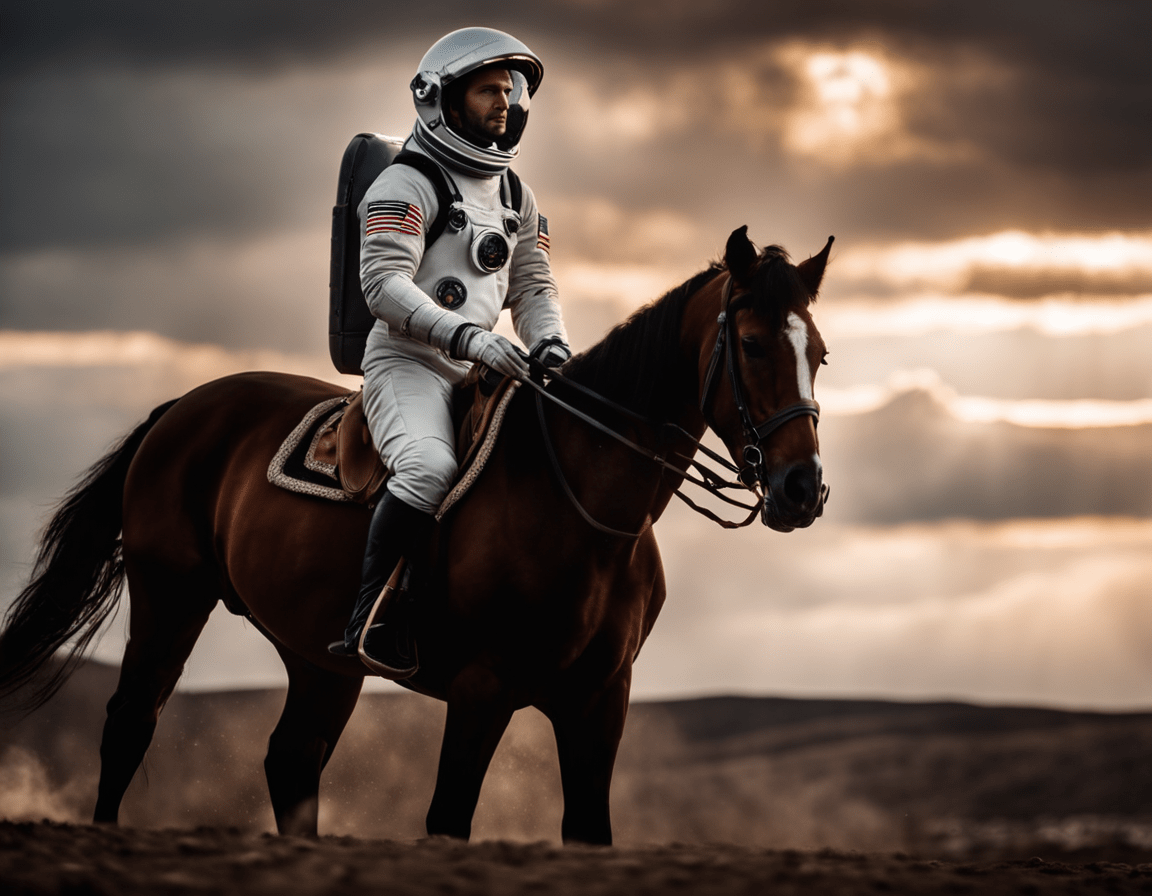

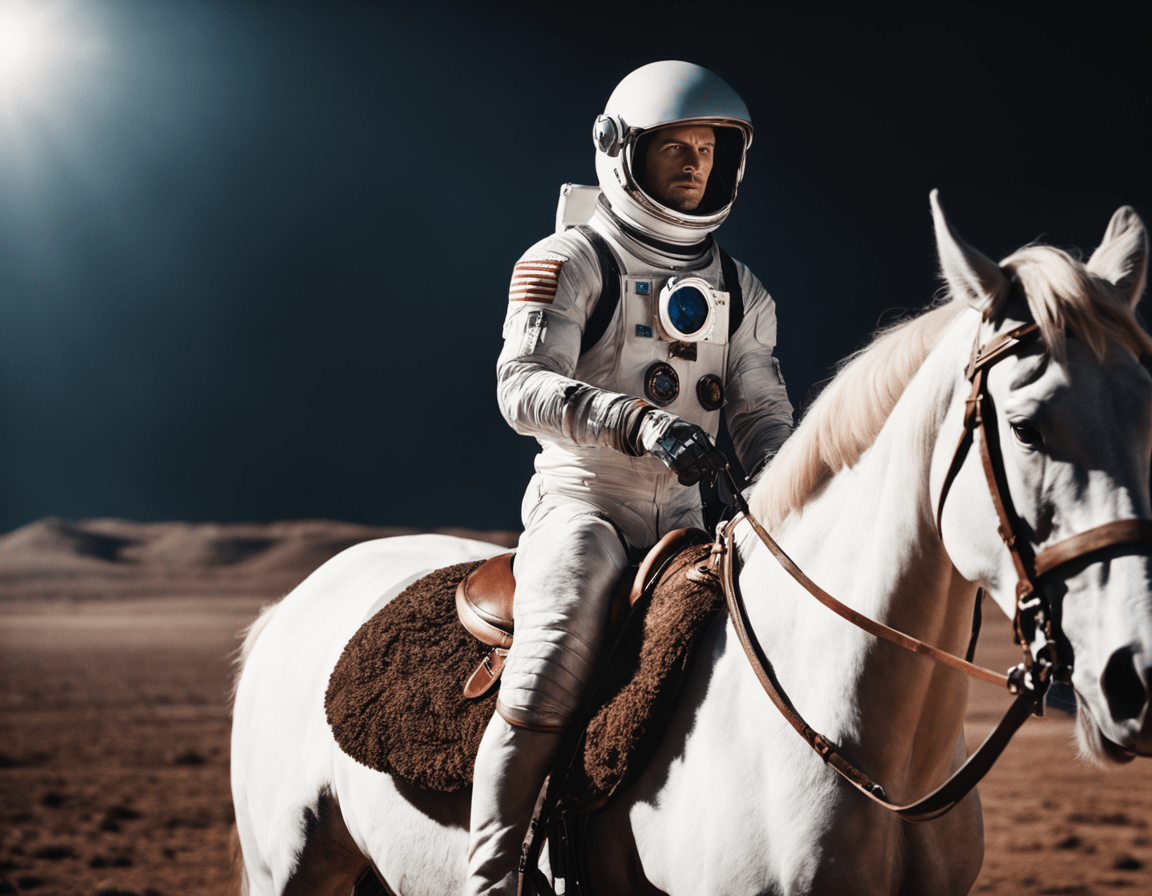

作品展示 Gallery

generated by sd-xl-1.0

使用须知 Notice for Use

使用上述模型需遵守AIGC模型开源特别条款。

If you want to use this model, please read this document carefully and abide by the terms.