CogAgent

🌐 Github | 🤗 Huggingface Space | 📄 Technical Report | 📜 arxiv paper

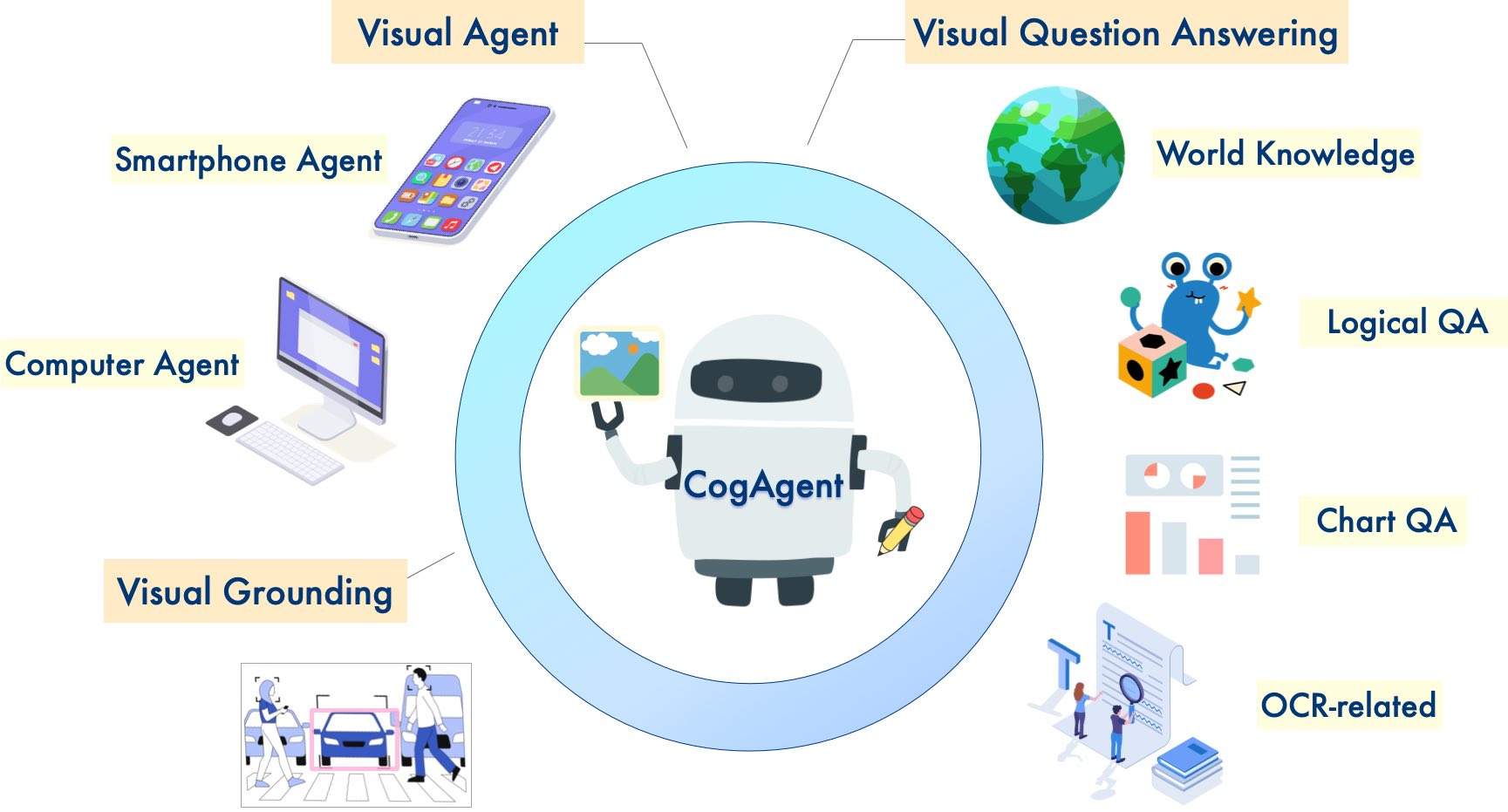

About the Model

The CogAgent-9B-20241220 model is based on GLM-4V-9B, a bilingual

open-source VLM base model. Through data collection and optimization, multi-stage training, and strategy improvements,

CogAgent-9B-20241220 achieves significant advancements in GUI perception, inference prediction accuracy, action space

completeness, and task generalizability. The model supports bilingual (Chinese and English) interaction with both

screenshots and language input.

This version of the CogAgent model has already been applied in ZhipuAI's GLM-PC product. We hope this release will assist researchers and developers in advancing the research and applications of GUI agents based on vision-language models.

Running the Model

Please refer to our GitHub for specific examples of running the model.

Input and Output

cogagent-9b-20241220 is an agent execution model rather than a conversational model. It does not support continuous

conversations but does support continuous execution history. Below are guidelines on how users should format their input

for the model and interpret the formatted output.

Run the Model

Please visit our github for specific running examples, as well as the part for prompt concatenation (this directly affects whether the model runs correctly).

In particular, pay attention to the prompt concatenation process. You can refer to app/client.py#L115 for concatenating user input prompts.

current_platform = identify_os() # "Mac" or "WIN" or "Mobile",注意大小写

platform_str = f"(Platform: {current_platform})\n"

format_str = "(Answer in Action-Operation-Sensitive format.)\n" # You can use other format to replace "Action-Operation-Sensitive"

history_str = "\nHistory steps: "

for index, (grounded_op_func, action) in enumerate(zip(history_grounded_op_funcs, history_actions)):

history_str += f"\n{index}. {grounded_op_func}\t{action}" # start from 0.

query = f"Task: {task}{history_str}\n{platform_str}{format_str}" # Be careful about the \n

A minimal user input concatenation code is as follows:

"Task: Search for doors, click doors on sale and filter by brands \"Mastercraft\".\nHistory steps: \n0. CLICK(box=[[352,102,786,139]], element_info='Search')\tLeft click on the search box located in the middle top of the screen next to the Menards logo.\n1. TYPE(box=[[352,102,786,139]], text='doors', element_info='Search')\tIn the search input box at the top, type 'doors'.\n2. CLICK(box=[[787,102,809,139]], element_info='SEARCH')\tLeft click on the magnifying glass icon next to the search bar to perform the search.\n3. SCROLL_DOWN(box=[[0,209,998,952]], step_count=5, element_info='[None]')\tScroll down the page to see the available doors.\n4. CLICK(box=[[280,708,710,809]], element_info='Doors on Sale')\tClick the \"Doors On Sale\" button in the middle of the page to view the doors that are currently on sale.\n(Platform: WIN)\n(Answer in Action-Operation format.)\n"

The concatenated Python string will look like:

"Task: Search for doors, click doors on sale and filter by brands \"Mastercraft\".\nHistory steps: \n0. CLICK(box=[[352,102,786,139]], element_info='Search')\tLeft click on the search box located in the middle top of the screen next to the Menards logo.\n1. TYPE(box=[[352,102,786,139]], text='doors', element_info='Search')\tIn the search input box at the top, type 'doors'.\n2. CLICK(box=[[787,102,809,139]], element_info='SEARCH')\tLeft click on the magnifying glass icon next to the search bar to perform the search.\n3. SCROLL_DOWN(box=[[0,209,998,952]], step_count=5, element_info='[None]')\tScroll down the page to see the available doors.\n4. CLICK(box=[[280,708,710,809]], element_info='Doors on Sale')\tClick the \"Doors On Sale\" button in the middle of the page to view the doors that are currently on sale.\n(Platform: WIN)\n(Answer in Action-Operation format.)\n"

Due to the length, if you would like to understand the meaning and representation of each field in detail, please refer to the GitHub.

Previous Work

In November 2023, we released the first generation of CogAgent. You can find related code and weights in the CogVLM & CogAgent Official Repository.

CogVLM📖 Paper: CogVLM: Visual Expert for Pretrained Language Models CogVLM is a powerful open-source vision-language model (VLM). CogVLM-17B has 10 billion vision parameters and 7 billion language parameters, supporting 490x490 resolution image understanding and multi-turn conversations. CogVLM-17B achieved state-of-the-art performance on 10 classic cross-modal benchmarks including NoCaps, Flicker30k captioning, RefCOCO, RefCOCO+, RefCOCOg, Visual7W, GQA, ScienceQA, VizWiz VQA, and TDIUC benchmarks. |

CogAgent📖 Paper: CogAgent: A Visual Language Model for GUI Agents CogAgent is an improved open-source vision-language model based on CogVLM. CogAgent-18B has 11 billion vision parameters and 7 billion language parameters, supporting image understanding at 1120x1120 resolution. Beyond CogVLM's capabilities, it also incorporates GUI agent capabilities. CogAgent-18B achieved state-of-the-art performance on 9 classic cross-modal benchmarks, including VQAv2, OK-VQ, TextVQA, ST-VQA, ChartQA, infoVQA, DocVQA, MM-Vet, and POPE benchmarks. It significantly outperformed existing models on GUI operation datasets like AITW and Mind2Web. |

License

Please follow the Model License for using the model weights.

- Downloads last month

- 4

Model tree for THUDM/cogagent-9b-20241220

Base model

THUDM/glm-4v-9b