Commit

·

b16e4a7

1

Parent(s):

5f808e8

update

Browse files- .gitattributes +10 -0

- README.md +19 -3

- all_results.json +3 -0

- config.json +3 -0

- generation_config.json +3 -0

- model.safetensors +3 -0

- special_tokens_map.json +3 -0

- tokenizer.json +3 -0

- tokenizer_config.json +3 -0

- train_results.json +3 -0

- trainer_log.jsonl +36 -0

- trainer_state.json +3 -0

- training_args.bin +3 -0

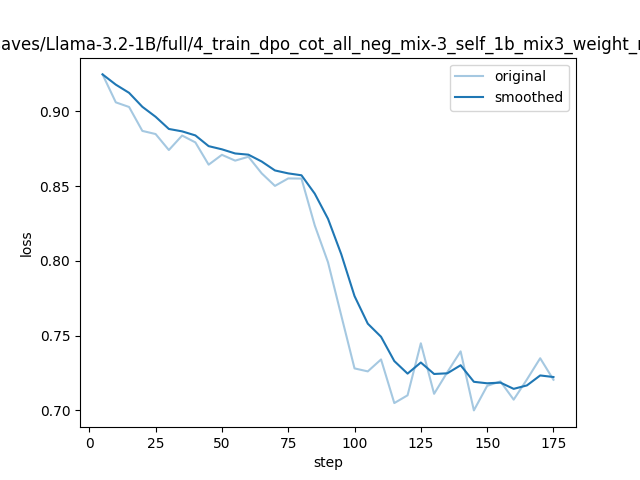

- training_loss.png +0 -0

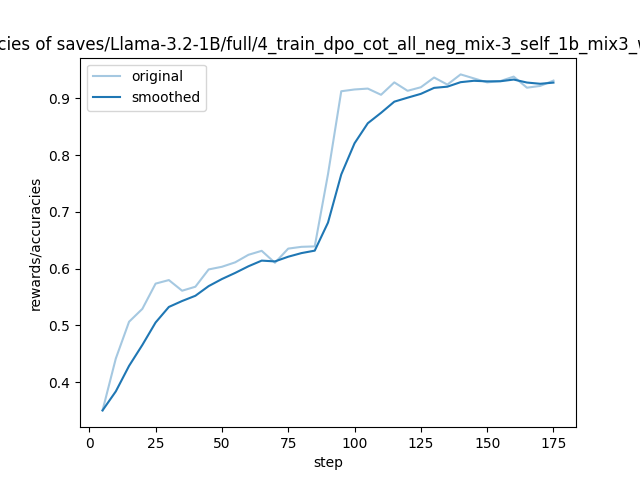

- training_rewards_accuracies.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,13 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

training_args.bin filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

model.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

config.json filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

generation_config.json filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

special_tokens_map.json filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

tokenizer_config.json filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

trainer_state.json filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

train_results.json filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

all_results.json filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,3 +1,19 @@

|

|

| 1 |

-

---

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

library_name: transformers

|

| 3 |

+

license: other

|

| 4 |

+

base_model: meta-llama/Llama-3.2-1B

|

| 5 |

+

tags:

|

| 6 |

+

- llama-factory

|

| 7 |

+

- full

|

| 8 |

+

- generated_from_trainer

|

| 9 |

+

model-index:

|

| 10 |

+

- name: GuardReasoner 1B

|

| 11 |

+

results: []

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# GuardReasoner 1B

|

| 16 |

+

|

| 17 |

+

This model is a fine-tuned version of [meta-llama/Llama-3.2-1B](https://huggingface.co/meta-llama/Llama-3.2-1B) via R-SFT and HS-DPO.

|

| 18 |

+

|

| 19 |

+

|

all_results.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c1c01ba8e11f961977664cf17b13668dae7491c79ac8fc4d360ebe0d79ef7a08

|

| 3 |

+

size 257

|

config.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:aa4cc06647fff1ac36c45cb8cfc5d400cc8f58211e66c7ee0c8bb86b0f1804db

|

| 3 |

+

size 905

|

generation_config.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e6bd0b30e743618c41de600b71fe491ba7060cd6f728d737371e55c6cd544352

|

| 3 |

+

size 180

|

model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:936dfa3336d3d0f390a69baaa669025f022f400d721e6a11c09717d856c4e866

|

| 3 |

+

size 2471645608

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:208d307467cabecb563e033fdb478b7c11a1bc6eca9a9c761bf6a303ccfce4c1

|

| 3 |

+

size 439

|

tokenizer.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6b9e4e7fb171f92fd137b777cc2714bf87d11576700a1dcd7a399e7bbe39537b

|

| 3 |

+

size 17209920

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fdd2ab776c0ad7171e4ba6ed31aba7c04843bc531da2875d91f49b35db660f4a

|

| 3 |

+

size 51269

|

train_results.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c1c01ba8e11f961977664cf17b13668dae7491c79ac8fc4d360ebe0d79ef7a08

|

| 3 |

+

size 257

|

trainer_log.jsonl

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{"current_steps": 5, "total_steps": 176, "loss": 0.9248, "accuracy": 0.3500000238418579, "lr": 4.990049701020116e-06, "epoch": 0.056657223796033995, "percentage": 2.84, "elapsed_time": "0:02:05", "remaining_time": "1:11:19", "throughput": 13431.65, "total_tokens": 1680560}

|

| 2 |

+

{"current_steps": 10, "total_steps": 176, "loss": 0.9061, "accuracy": 0.4414062798023224, "lr": 4.96027801084029e-06, "epoch": 0.11331444759206799, "percentage": 5.68, "elapsed_time": "0:03:47", "remaining_time": "1:02:56", "throughput": 14560.3, "total_tokens": 3312576}

|

| 3 |

+

{"current_steps": 15, "total_steps": 176, "loss": 0.903, "accuracy": 0.5062500238418579, "lr": 4.910921919235268e-06, "epoch": 0.16997167138810199, "percentage": 8.52, "elapsed_time": "0:05:32", "remaining_time": "0:59:26", "throughput": 14950.39, "total_tokens": 4967920}

|

| 4 |

+

{"current_steps": 20, "total_steps": 176, "loss": 0.887, "accuracy": 0.5289062261581421, "lr": 4.842374312499405e-06, "epoch": 0.22662889518413598, "percentage": 11.36, "elapsed_time": "0:07:15", "remaining_time": "0:56:36", "throughput": 15245.78, "total_tokens": 6638032}

|

| 5 |

+

{"current_steps": 25, "total_steps": 176, "loss": 0.8849, "accuracy": 0.573437511920929, "lr": 4.7551808459778035e-06, "epoch": 0.28328611898017, "percentage": 14.2, "elapsed_time": "0:08:55", "remaining_time": "0:53:55", "throughput": 15497.14, "total_tokens": 8300864}

|

| 6 |

+

{"current_steps": 30, "total_steps": 176, "loss": 0.8742, "accuracy": 0.5796874761581421, "lr": 4.6500356005192514e-06, "epoch": 0.33994334277620397, "percentage": 17.05, "elapsed_time": "0:10:38", "remaining_time": "0:51:46", "throughput": 15623.98, "total_tokens": 9974336}

|

| 7 |

+

{"current_steps": 35, "total_steps": 176, "loss": 0.8839, "accuracy": 0.5609375238418579, "lr": 4.527775557426648e-06, "epoch": 0.39660056657223797, "percentage": 19.89, "elapsed_time": "0:12:22", "remaining_time": "0:49:50", "throughput": 15676.07, "total_tokens": 11635904}

|

| 8 |

+

{"current_steps": 40, "total_steps": 176, "loss": 0.8793, "accuracy": 0.5679687857627869, "lr": 4.3893739358856465e-06, "epoch": 0.45325779036827196, "percentage": 22.73, "elapsed_time": "0:14:01", "remaining_time": "0:47:40", "throughput": 15848.72, "total_tokens": 13334624}

|

| 9 |

+

{"current_steps": 45, "total_steps": 176, "loss": 0.8644, "accuracy": 0.598437488079071, "lr": 4.235932445907152e-06, "epoch": 0.509915014164306, "percentage": 25.57, "elapsed_time": "0:15:46", "remaining_time": "0:45:54", "throughput": 15875.54, "total_tokens": 15020256}

|

| 10 |

+

{"current_steps": 50, "total_steps": 176, "loss": 0.871, "accuracy": 0.6031250357627869, "lr": 4.06867251845213e-06, "epoch": 0.56657223796034, "percentage": 28.41, "elapsed_time": "0:17:28", "remaining_time": "0:44:02", "throughput": 15881.0, "total_tokens": 16652784}

|

| 11 |

+

{"current_steps": 55, "total_steps": 176, "loss": 0.8671, "accuracy": 0.6109375357627869, "lr": 3.888925582549006e-06, "epoch": 0.623229461756374, "percentage": 31.25, "elapsed_time": "0:19:12", "remaining_time": "0:42:14", "throughput": 15892.05, "total_tokens": 18309696}

|

| 12 |

+

{"current_steps": 60, "total_steps": 176, "loss": 0.8697, "accuracy": 0.624218761920929, "lr": 3.6981224668001427e-06, "epoch": 0.6798866855524079, "percentage": 34.09, "elapsed_time": "0:20:55", "remaining_time": "0:40:26", "throughput": 15926.9, "total_tokens": 19989248}

|

| 13 |

+

{"current_steps": 65, "total_steps": 176, "loss": 0.8586, "accuracy": 0.6312500238418579, "lr": 3.4977820096439353e-06, "epoch": 0.7365439093484419, "percentage": 36.93, "elapsed_time": "0:22:37", "remaining_time": "0:38:38", "throughput": 15957.2, "total_tokens": 21666400}

|

| 14 |

+

{"current_steps": 70, "total_steps": 176, "loss": 0.8502, "accuracy": 0.610156238079071, "lr": 3.289498969037563e-06, "epoch": 0.7932011331444759, "percentage": 39.77, "elapsed_time": "0:24:19", "remaining_time": "0:36:49", "throughput": 15996.61, "total_tokens": 23339808}

|

| 15 |

+

{"current_steps": 75, "total_steps": 176, "loss": 0.8552, "accuracy": 0.6351562738418579, "lr": 3.074931327802202e-06, "epoch": 0.8498583569405099, "percentage": 42.61, "elapsed_time": "0:26:02", "remaining_time": "0:35:04", "throughput": 16020.85, "total_tokens": 25040384}

|

| 16 |

+

{"current_steps": 80, "total_steps": 176, "loss": 0.8551, "accuracy": 0.6382812261581421, "lr": 2.8557870956832135e-06, "epoch": 0.9065155807365439, "percentage": 45.45, "elapsed_time": "0:27:44", "remaining_time": "0:33:17", "throughput": 16050.37, "total_tokens": 26711328}

|

| 17 |

+

{"current_steps": 85, "total_steps": 176, "loss": 0.8238, "accuracy": 0.6390625238418579, "lr": 2.633810713184038e-06, "epoch": 0.9631728045325779, "percentage": 48.3, "elapsed_time": "0:29:23", "remaining_time": "0:31:27", "throughput": 16065.84, "total_tokens": 28331408}

|

| 18 |

+

{"current_steps": 90, "total_steps": 176, "loss": 0.7991, "accuracy": 0.7664062976837158, "lr": 2.410769165402549e-06, "epoch": 1.019830028328612, "percentage": 51.14, "elapsed_time": "0:31:05", "remaining_time": "0:29:42", "throughput": 16058.29, "total_tokens": 29960176}

|

| 19 |

+

{"current_steps": 95, "total_steps": 176, "loss": 0.7635, "accuracy": 0.9125000238418579, "lr": 2.1884379164070353e-06, "epoch": 1.0764872521246458, "percentage": 53.98, "elapsed_time": "0:32:49", "remaining_time": "0:27:58", "throughput": 16047.45, "total_tokens": 31600560}

|

| 20 |

+

{"current_steps": 100, "total_steps": 176, "loss": 0.7281, "accuracy": 0.9156250357627869, "lr": 1.9685867761175584e-06, "epoch": 1.13314447592068, "percentage": 56.82, "elapsed_time": "0:34:33", "remaining_time": "0:26:15", "throughput": 16041.98, "total_tokens": 33264656}

|

| 21 |

+

{"current_steps": 105, "total_steps": 176, "loss": 0.7261, "accuracy": 0.9171874523162842, "lr": 1.7529658121956778e-06, "epoch": 1.1898016997167138, "percentage": 59.66, "elapsed_time": "0:36:17", "remaining_time": "0:24:32", "throughput": 16040.86, "total_tokens": 34926400}

|

| 22 |

+

{"current_steps": 110, "total_steps": 176, "loss": 0.7341, "accuracy": 0.90625, "lr": 1.5432914190872757e-06, "epoch": 1.246458923512748, "percentage": 62.5, "elapsed_time": "0:38:02", "remaining_time": "0:22:49", "throughput": 16049.33, "total_tokens": 36625152}

|

| 23 |

+

{"current_steps": 115, "total_steps": 176, "loss": 0.7049, "accuracy": 0.9281249642372131, "lr": 1.3412326551122365e-06, "epoch": 1.3031161473087818, "percentage": 65.34, "elapsed_time": "0:39:44", "remaining_time": "0:21:04", "throughput": 16061.83, "total_tokens": 38291568}

|

| 24 |

+

{"current_steps": 120, "total_steps": 176, "loss": 0.7101, "accuracy": 0.9132812023162842, "lr": 1.148397956361007e-06, "epoch": 1.3597733711048159, "percentage": 68.18, "elapsed_time": "0:41:26", "remaining_time": "0:19:20", "throughput": 16074.93, "total_tokens": 39968640}

|

| 25 |

+

{"current_steps": 125, "total_steps": 176, "loss": 0.7449, "accuracy": 0.9195312857627869, "lr": 9.663223331586018e-07, "epoch": 1.41643059490085, "percentage": 71.02, "elapsed_time": "0:43:13", "remaining_time": "0:17:38", "throughput": 16049.8, "total_tokens": 41625872}

|

| 26 |

+

{"current_steps": 130, "total_steps": 176, "loss": 0.7111, "accuracy": 0.9367187023162842, "lr": 7.964551510152721e-07, "epoch": 1.4730878186968839, "percentage": 73.86, "elapsed_time": "0:45:02", "remaining_time": "0:15:56", "throughput": 16013.74, "total_tokens": 43275232}

|

| 27 |

+

{"current_steps": 135, "total_steps": 176, "loss": 0.7256, "accuracy": 0.9242187738418579, "lr": 6.401485933303761e-07, "epoch": 1.5297450424929178, "percentage": 76.7, "elapsed_time": "0:46:44", "remaining_time": "0:14:11", "throughput": 16028.67, "total_tokens": 44948016}

|

| 28 |

+

{"current_steps": 140, "total_steps": 176, "loss": 0.7395, "accuracy": 0.9421875476837158, "lr": 4.986468976890993e-07, "epoch": 1.5864022662889519, "percentage": 79.55, "elapsed_time": "0:48:27", "remaining_time": "0:12:27", "throughput": 16037.06, "total_tokens": 46625616}

|

| 29 |

+

{"current_steps": 145, "total_steps": 176, "loss": 0.7, "accuracy": 0.9351562261581421, "lr": 3.7307645143367324e-07, "epoch": 1.643059490084986, "percentage": 82.39, "elapsed_time": "0:50:11", "remaining_time": "0:10:43", "throughput": 16042.02, "total_tokens": 48305888}

|

| 30 |

+

{"current_steps": 150, "total_steps": 176, "loss": 0.7164, "accuracy": 0.9281250238418579, "lr": 2.644368253507218e-07, "epoch": 1.6997167138810199, "percentage": 85.23, "elapsed_time": "0:51:52", "remaining_time": "0:08:59", "throughput": 16062.91, "total_tokens": 49996656}

|

| 31 |

+

{"current_steps": 155, "total_steps": 176, "loss": 0.7194, "accuracy": 0.9304687976837158, "lr": 1.7359281684871609e-07, "epoch": 1.7563739376770537, "percentage": 88.07, "elapsed_time": "0:53:34", "remaining_time": "0:07:15", "throughput": 16085.16, "total_tokens": 51713152}

|

| 32 |

+

{"current_steps": 160, "total_steps": 176, "loss": 0.7072, "accuracy": 0.938281238079071, "lr": 1.0126756596375687e-07, "epoch": 1.8130311614730878, "percentage": 90.91, "elapsed_time": "0:55:16", "remaining_time": "0:05:31", "throughput": 16082.49, "total_tokens": 53343936}

|

| 33 |

+

{"current_steps": 165, "total_steps": 176, "loss": 0.7207, "accuracy": 0.918749988079071, "lr": 4.8036798991923925e-08, "epoch": 1.869688385269122, "percentage": 93.75, "elapsed_time": "0:56:59", "remaining_time": "0:03:47", "throughput": 16084.57, "total_tokens": 55005216}

|

| 34 |

+

{"current_steps": 170, "total_steps": 176, "loss": 0.7349, "accuracy": 0.921875, "lr": 1.4324245570256634e-08, "epoch": 1.9263456090651558, "percentage": 96.59, "elapsed_time": "0:58:45", "remaining_time": "0:02:04", "throughput": 16073.59, "total_tokens": 56660496}

|

| 35 |

+

{"current_steps": 175, "total_steps": 176, "loss": 0.7205, "accuracy": 0.9312500357627869, "lr": 3.982656874917945e-10, "epoch": 1.9830028328611897, "percentage": 99.43, "elapsed_time": "1:00:28", "remaining_time": "0:00:20", "throughput": 16069.43, "total_tokens": 58302912}

|

| 36 |

+

{"current_steps": 176, "total_steps": 176, "epoch": 1.9943342776203967, "percentage": 100.0, "elapsed_time": "1:00:59", "remaining_time": "0:00:00", "throughput": 16023.95, "total_tokens": 58638160}

|

trainer_state.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:598c66082b60d4b5d847858a5dbfb142d43724c0216779a19315ab133f2f9347

|

| 3 |

+

size 21150

|

training_args.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0ff7a50e065c6f9c04994a2cd6af2446c829484cc8c1f48bf8fc375d8546488f

|

| 3 |

+

size 7288

|

training_loss.png

ADDED

|

training_rewards_accuracies.png

ADDED

|