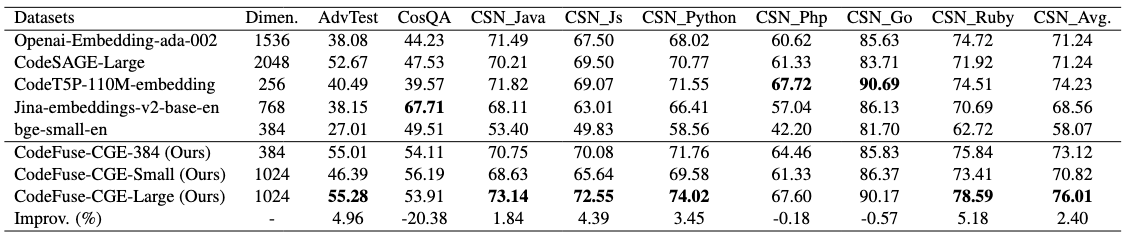

**Homepage**: 🏡 https://github.com/codefuse-ai/CodeFuse-CGE (**Please give us your support with a Star🌟 + Fork🚀 + Watch👀**) ## Model Description CodeFuse-CGE-Small is the Small version of the CodeFuse-CGE family which is fine-tuned based on Phi-3.5-mini-instruct. CodeFuse-CGE-Small is distinguish on text2code task for it's powerful ability of capturing the semantic relationship between code and text. This model has the following notable features: ● Instruction-tuning is enabled for both query and code snippet sides. ● The model obtains sentence-level and code-level representations through a layer of cross-attention computation module. ● The model has a smaller dimensional size without significant degradation in performance. Model Configuration Model Size: 3.8B Embedding Dimension: 1024 Hidden Layers: 32 Max Input Tokens: 1024 Requirements ``` flash_attn==2.4.2 torch==2.1.0 accelerate==0.28.0 transformers==4.43.0 ``` ## How to Use ### transformers ``` from transformers import AutoTokenizer, AutoModel import torch model_name_or_path = "codefuse-ai/CodeFuse-CGE-Small" model = AutoModel.from_pretrained(model_name_or_path, trust_remote_code=True) tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, trust_remote_code=True, truncation_side='right', padding_side='right') if torch.cuda.is_available(): device = 'cuda' else: device = 'cpu' model.to(device) prefix_dict = {'python':{'query':'Retrieve the Python code that solves the following query:', 'passage':'Python code:'}, 'java':{'query':'Retrieve the Java code that solves the following query:', 'passage':'Java code:'}, 'go':{'query':'Retrieve the Go code that solves the following query:', 'passage':'Go code:'}, 'c++':{'query':'Retrieve the C++ code that solves the following query:', 'passage':'C++ code:'}, 'javascript':{'query':'Retrieve the Javascript code that solves the following query:', 'passage':'Javascript code:'}, 'php':{'query':'Retrieve the PHP code that solves the following query:', 'passage':'PHP code:'}, 'ruby':{'query':'Retrieve the Ruby code that solves the following query:', 'passage':'Ruby code:'}, 'default':{'query':'Retrieve the code that solves the following query:', 'passage':'Code:'} } text = ["Writes a Boolean to the stream.", "def writeBoolean(self, n): t = TYPE_BOOL_TRUE if n is False: t = TYPE_BOOL_FALSE self.stream.write(t)"] text[0] += prefix_dict['python']['query'] text[1] += prefix_dict['python']['passage'] embed = model.encode(tokenizer, text) score = embed[0] @ embed[1].T print("score", score) ``` ## Benchmark the Performance We use MRR metric to evaluate the ability on text2code retrieval tasks: AdvTest, CosQA, CSN  ## Acknowledgement Thanks to the authors of open-sourced datasets, including CSN, Adv, CoSQA. ## License Since CodeFuse-CGE-Small is fine-tuned based on Phi3 model, our usage license follows the same terms as that of Phi3 model.