Commit

•

6c1d00f

1

Parent(s):

9852211

Q4 gguf, config.json, image processing

Browse files- .gitattributes +2 -0

- NousResearch_Nous-Hermes-2-Vision-GGUF_Q4_0.gguf +3 -0

- README.md +131 -0

- config.json +37 -0

- mmproj-model-f16.gguf +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

mmproj-model-f16.gguf filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

NousResearch_Nous-Hermes-2-Vision-GGUF_Q4_0.gguf filter=lfs diff=lfs merge=lfs -text

|

NousResearch_Nous-Hermes-2-Vision-GGUF_Q4_0.gguf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b3fa9f25cb31da1746288f8bf2e88ab110d28d6510e662efd9f4100c2ea83ba4

|

| 3 |

+

size 4108928000

|

README.md

ADDED

|

@@ -0,0 +1,131 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

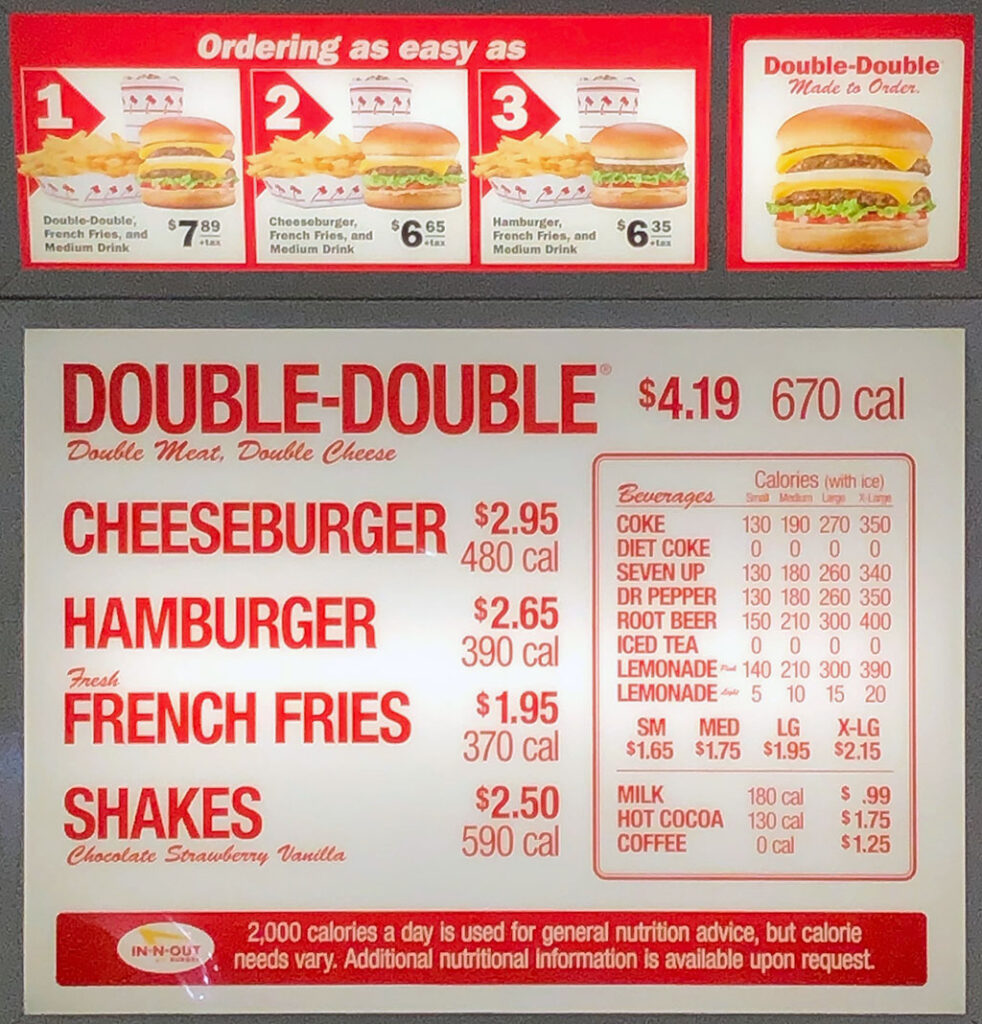

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model: mistralai/Mistral-7B-v0.1

|

| 3 |

+

tags:

|

| 4 |

+

- mistral

|

| 5 |

+

- instruct

|

| 6 |

+

- finetune

|

| 7 |

+

- chatml

|

| 8 |

+

- gpt4

|

| 9 |

+

- synthetic data

|

| 10 |

+

- distillation

|

| 11 |

+

- multimodal

|

| 12 |

+

- llava

|

| 13 |

+

model-index:

|

| 14 |

+

- name: Nous-Hermes-2-Vision

|

| 15 |

+

results: []

|

| 16 |

+

license: apache-2.0

|

| 17 |

+

language:

|

| 18 |

+

- en

|

| 19 |

+

---

|

| 20 |

+

|

| 21 |

+

GGUF Quants by Twobob, Thanks to @jartine and @cmp-nct for the assists

|

| 22 |

+

|

| 23 |

+

It's vicuna ref: [here](https://github.com/qnguyen3/hermes-llava/blob/173b4ef441b5371c1e7d99da7a2e7c14c77ad12f/llava/conversation.py#L252)

|

| 24 |

+

|

| 25 |

+

Caveat emptor: There is still some kind of bug in the inference that is likely to get fixed upstream. Just FYI

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# Nous-Hermes-2-Vision - Mistral 7B

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

*In the tapestry of Greek mythology, Hermes reigns as the eloquent Messenger of the Gods, a deity who deftly bridges the realms through the art of communication. It is in homage to this divine mediator that I name this advanced LLM "Hermes," a system crafted to navigate the complex intricacies of human discourse with celestial finesse.*

|

| 35 |

+

|

| 36 |

+

## Model description

|

| 37 |

+

|

| 38 |

+

Nous-Hermes-2-Vision stands as a pioneering Vision-Language Model, leveraging advancements from the renowned **OpenHermes-2.5-Mistral-7B** by teknium. This model incorporates two pivotal enhancements, setting it apart as a cutting-edge solution:

|

| 39 |

+

|

| 40 |

+

- **SigLIP-400M Integration**: Diverging from traditional approaches that rely on substantial 3B vision encoders, Nous-Hermes-2-Vision harnesses the formidable SigLIP-400M. This strategic choice not only streamlines the model's architecture, making it more lightweight, but also capitalizes on SigLIP's remarkable capabilities. The result? A remarkable boost in performance that defies conventional expectations.

|

| 41 |

+

|

| 42 |

+

- **Custom Dataset Enriched with Function Calling**: Our model's training data includes a unique feature – function calling. This distinctive addition transforms Nous-Hermes-2-Vision into a **Vision-Language Action Model**. Developers now have a versatile tool at their disposal, primed for crafting a myriad of ingenious automations.

|

| 43 |

+

|

| 44 |

+

This project is led by [qnguyen3](https://twitter.com/stablequan) and [teknium](https://twitter.com/Teknium1).

|

| 45 |

+

## Training

|

| 46 |

+

### Dataset

|

| 47 |

+

- 220K from **LVIS-INSTRUCT4V**

|

| 48 |

+

- 60K from **ShareGPT4V**

|

| 49 |

+

- 150K Private **Function Calling Data**

|

| 50 |

+

- 50K conversations from teknium's **OpenHermes-2.5**

|

| 51 |

+

|

| 52 |

+

## Usage

|

| 53 |

+

### Prompt Format

|

| 54 |

+

- Like other LLaVA's variants, this model uses Vicuna-V1 as its prompt template. Please refer to `conv_llava_v1` in [this file](https://github.com/qnguyen3/hermes-llava/blob/main/llava/conversation.py)

|

| 55 |

+

- For Gradio UI, please visit this [GitHub Repo](https://github.com/qnguyen3/hermes-llava)

|

| 56 |

+

|

| 57 |

+

### Function Calling

|

| 58 |

+

- For functiong calling, the message should start with a `<fn_call>` tag. Here is an example:

|

| 59 |

+

|

| 60 |

+

```json

|

| 61 |

+

<fn_call>{

|

| 62 |

+

"type": "object",

|

| 63 |

+

"properties": {

|

| 64 |

+

"bus_colors": {

|

| 65 |

+

"type": "array",

|

| 66 |

+

"description": "The colors of the bus in the image.",

|

| 67 |

+

"items": {

|

| 68 |

+

"type": "string",

|

| 69 |

+

"enum": ["red", "blue", "green", "white"]

|

| 70 |

+

}

|

| 71 |

+

},

|

| 72 |

+

"bus_features": {

|

| 73 |

+

"type": "string",

|

| 74 |

+

"description": "The features seen on the back of the bus."

|

| 75 |

+

},

|

| 76 |

+

"bus_location": {

|

| 77 |

+

"type": "string",

|

| 78 |

+

"description": "The location of the bus (driving or pulled off to the side).",

|

| 79 |

+

"enum": ["driving", "pulled off to the side"]

|

| 80 |

+

}

|

| 81 |

+

}

|

| 82 |

+

}

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

Output:

|

| 86 |

+

```json

|

| 87 |

+

{

|

| 88 |

+

"bus_colors": ["red", "white"],

|

| 89 |

+

"bus_features": "An advertisement",

|

| 90 |

+

"bus_location": "driving"

|

| 91 |

+

}

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

## Example

|

| 95 |

+

|

| 96 |

+

### Chat

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

### Function Calling

|

| 100 |

+

Input image:

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

Input message:

|

| 105 |

+

```json

|

| 106 |

+

<fn_call>{

|

| 107 |

+

"type": "object",

|

| 108 |

+

"properties": {

|

| 109 |

+

"food_list": {

|

| 110 |

+

"type": "array",

|

| 111 |

+

"description": "List of all the food",

|

| 112 |

+

"items": {

|

| 113 |

+

"type": "string",

|

| 114 |

+

}

|

| 115 |

+

},

|

| 116 |

+

}

|

| 117 |

+

}

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

Output:

|

| 121 |

+

```json

|

| 122 |

+

{

|

| 123 |

+

"food_list": [

|

| 124 |

+

"Double Burger",

|

| 125 |

+

"Cheeseburger",

|

| 126 |

+

"French Fries",

|

| 127 |

+

"Shakes",

|

| 128 |

+

"Coffee"

|

| 129 |

+

]

|

| 130 |

+

}

|

| 131 |

+

```

|

config.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "teknium/OpenHermes-2.5-Mistral-7B",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"LlavaMistralForCausalLM"

|

| 5 |

+

],

|

| 6 |

+

"bos_token_id": 1,

|

| 7 |

+

"eos_token_id": 32000,

|

| 8 |

+

"freeze_mm_mlp_adapter": false,

|

| 9 |

+

"hidden_act": "silu",

|

| 10 |

+

"hidden_size": 4096,

|

| 11 |

+

"image_aspect_ratio": "pad",

|

| 12 |

+

"image_grid_pinpoints": null,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 14336,

|

| 15 |

+

"max_position_embeddings": 32768,

|

| 16 |

+

"mm_hidden_size": 1152,

|

| 17 |

+

"mm_projector_type": "mlp2x_gelu",

|

| 18 |

+

"mm_use_im_patch_token": false,

|

| 19 |

+

"mm_use_im_start_end": false,

|

| 20 |

+

"mm_vision_select_feature": "patch",

|

| 21 |

+

"mm_vision_select_layer": -2,

|

| 22 |

+

"mm_vision_tower": "ikala/ViT-SO400M-14-SigLIP-384-hf",

|

| 23 |

+

"model_type": "llava_mistral",

|

| 24 |

+

"num_attention_heads": 32,

|

| 25 |

+

"num_hidden_layers": 32,

|

| 26 |

+

"num_key_value_heads": 8,

|

| 27 |

+

"rms_norm_eps": 1e-05,

|

| 28 |

+

"rope_theta": 10000.0,

|

| 29 |

+

"sliding_window": 4096,

|

| 30 |

+

"tie_word_embeddings": false,

|

| 31 |

+

"torch_dtype": "bfloat16",

|

| 32 |

+

"transformers_version": "4.34.1",

|

| 33 |

+

"tune_mm_mlp_adapter": false,

|

| 34 |

+

"use_cache": true,

|

| 35 |

+

"use_mm_proj": true,

|

| 36 |

+

"vocab_size": 32002

|

| 37 |

+

}

|

mmproj-model-f16.gguf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5c2a602b13c155db542d26fa5884e98e1ca4f2bb947e18957fc32c8f87492182

|

| 3 |

+

size 839316352

|