Aaron C Wacker PRO

AI & ML interests

Recent Activity

Organizations

awacke1's activity

" design a coral defense mechanism that upon sensing say an acid that's causing coral reefs to have a carbon dioxide issue it develops... please create a plan and a design for this\n

"

It picks these three as best combined solution.

1. [Reef-insight: A framework for reef habitat mapping with clustering methods via remote sensing]...

2. Phone a friend: [Learning to Communicate and Collaborate in a Competitive Multi-Agent Setup to Clean the Ocean from Macroplastics]...

3. World Solve: [Dependence of Physiochemical Features on Marine Chlorophyll Analysis with Learning Techniques]

To design a system that allows coralows coral reefs to respond to increased acidity levels in their environment, we can create a network of pH sensors and dispersal units that can detect changes in pH levels and release a base solution to neutralize the acid.

1. pH Sensors: The first component of the system would be a network of pH sensors placed strategically throughout the coral reef. These sensors would be small, durable, and able to withstand the harsh conditions of the ocean. They would be placed at various depths and locations within the reef to ensure accurate and comprehensive monitoring of pH levels.

2. Base Dispersal Units: Once the pH sensors detect a decrease in pH levels, they would trigger the base dispersal units to release a base solution into the water. These units would be strategically placed around the reef and would be able to release a controlled amount of base solution to neutralize the acidity in the water.

3. Water Dispersal Mechanism: The base dispersal units would be connected to a water dispersal mechanism that would allow the base solution to be distributed evenly around the reef. This could be achieved through a series of pipes or channels that would distribute the base solution in a controlled and targeted manner.

This is a read-aloud lecture to answer questions of using language reasoning techniques in advanced AGI style chain of thought AI pipelines.

Produced using DeepResearchEvaluator located here: awacke1/DeepResearchEvaluator

Videos:

https://x.com/Aaron_Wacker/status/1874835790087463063

https://www.youtube.com/watch?v=fW_A1hH_7RM

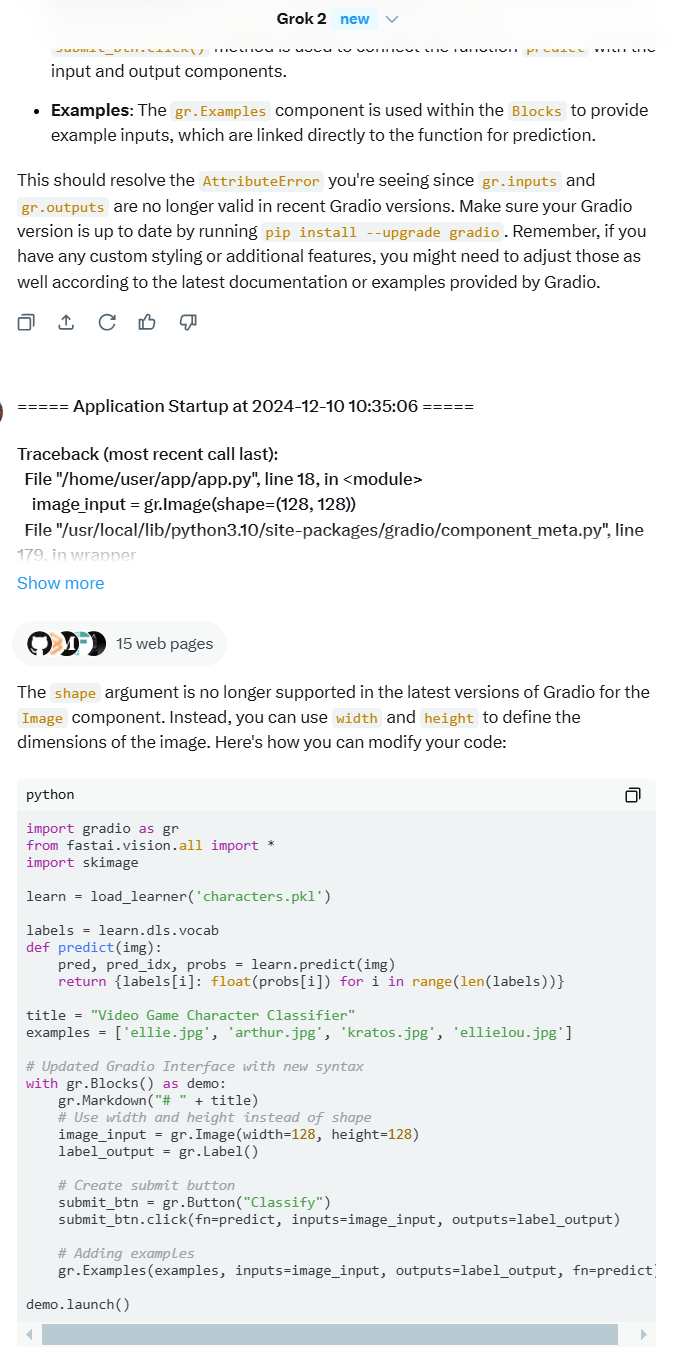

After trying pretty much everything I gave the latest Grok2 a spin at converting my old malfunctioning Python/Gradio code which no longer adheres to syntax innovations and feature adds within python and gradio.

Grok2 in my opinion is the only one which could do this. Examine below instant one shot win by Grok2 with gradio! Greatest thing about it is it shows references via tweet so you can find where the intelligence was added so Grok2 is using modern syntax and unlike OpenAI and Anthropic, it produces innovative modern results aware of recent innovation.

Grok2 for the win I guess!

Tried a straw man example shown below which fails on others but works on Grok2:

Original which fails now if you upgrade Gradio:

import gradio as gr

from fastai.vision.all import *

import skimage

learn = load_learner('characters.pkl')

labels = learn.dls.vocab

def predict(img):

pred,pred_idx,probs = learn.predict(img)

return {labels[i]: float(probs[i]) for i in range(len(labels))}

title = "Video Game Character Classifier"

# description = "A pet breed classifier trained on the Oxford Pets dataset with fastai. Created as a demo for Gradio and HuggingFace Spaces."

#article="<p style='text-align: center'><a href='https://tmabraham.github.io/blog/gradio_hf_spaces_tutorial' target='_blank'>Blog post</a></p>"

examples = ['ellie.jpg','arthur.jpg','kratos.jpg','ellielou.jpg']

interpretation='default'

enable_queue=True

gr.Interface(fn=predict,inputs=gr.inputs.Image(shape=(128, 128)),outputs=gr.outputs.Label(),title=title,examples=examples,interpretation=interpretation,enable_queue=enable_queue).launch()

Second Grok2 prompt (first switched to blocks shown below).

Grok2's second shot with examples of errors gave working code and also tweet references!!

import gradio as gr

from fastai.vision.all import *

import skimage

learn = load_learner('characters.pkl')

labels = learn.dls.vocab

def predict(img):

pred, pred_idx, probs = learn.predict(img)

return {labels[i]: float(probs[i]) for i in range(len(labels))}

title = "Video Game Character Classifier"

examples = ['ellie.jpg', 'arthur.jpg', 'kratos.jpg', 'ellielou.jpg']

# Updated Gradio Interface with new syntax

with gr.Blocks() as demo:

gr.Markdown("# " + title)

# Use width and height instead of shape

image_input = gr.Image(width=128, height=128)

label_output = gr.Label()

# Create submit button

submit_btn = gr.Button("Classify")

submit_btn.click(fn=predict, inputs=image_input, outputs=label_output)

# Adding examples

gr.Examples(examples, inputs=image_input, outputs=label_output, fn=predict)

demo.launch()

🚲 Stolen bike in Denver FOUND - Sometimes hope & justice DO prevail.

🎬 So I Created an AI+Art+Music tribute:

-🧠 AI App that Evaluates GPT-4o vs Claude:

awacke1/RescuerOfStolenBikes

https://x.com/Aaron_Wacker/status/1857640877986033980?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1857640877986033980%7Ctwgr%5E203a5022b0eb4c41ee8c1dd9f158330216ac5be1%7Ctwcon%5Es1_c10&ref_url=https%3A%2F%2Fpublish.twitter.com%2F%3Furl%3Dhttps%3A%2F%2Ftwitter.com%2FAaron_Wacker%2Fstatus%2F1857640877986033980

<blockquote class="twitter-tweet"><p lang="en" dir="ltr">QT your 🕊️Hope🕊️ and ⚖️Justice⚖️ art🎨<br><br>🚲 Stolen bike in Denver FOUND! <br> - Sometimes hope & justice DO prevail! <br><br>🎬 Created an AI+Art+Music tribute: <br> -🧠 AI App that Evaluates GPT-4o vs Claude: <a href="https://t.co/odrYdaeizZ">https://t.co/odrYdaeizZ</a><br> <a href="https://twitter.com/hashtag/GPT?src=hash&ref_src=twsrc%5Etfw">#GPT</a> <a href="https://twitter.com/hashtag/Claude?src=hash&ref_src=twsrc%5Etfw">#Claude</a> <a href="https://twitter.com/hashtag/Huggingface?src=hash&ref_src=twsrc%5Etfw">#Huggingface</a> <a href="https://twitter.com/OpenAI?ref_src=twsrc%5Etfw">@OpenAI</a> <a href="https://twitter.com/AnthropicAI?ref_src=twsrc%5Etfw">@AnthropicAI</a> <a href="https://t.co/Q9wGNzLm5C">pic.twitter.com/Q9wGNzLm5C</a></p>— Aaron Wacker (@Aaron_Wacker) <a href="https://twitter.com/Aaron_Wacker/status/1857640877986033980?ref_src=twsrc%5Etfw">November 16, 2024</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>#GPT #Claude #Huggingface

@OpenAI

@AnthropicAI

Most excellent Dataset sir. This is quite useful. Thanks! Aaron

Link: ajibawa-2023/Software-Architecture

I am releasing a Large Dataset covering topics related to Software-Architecture. This dataset consists of around 450,000 lines of data in jsonl.

I have included following topics:

Architectural Frameworks

Architectural Patterns for Reliability

Architectural Patterns for Scalability

Architectural Patterns

Architectural Quality Attributes

Architectural Testing

Architectural Views

Architectural Decision-Making

Advanced Research

Cloud-Based Architectures

Component-Based Architecture

Data Architecture

Emerging Trends

Event-Driven Architecture

Evolvability and Maintainability

Microservices and Monolithic

Microservices Architecture

Security Architecture

Service-Oriented Architecture

Software Design Principles

and Many More!

This dataset is useful in LLM development. Also those who are working on developing Software development related LLMs then this dataset can be useful.

This dataset is very useful to Researchers as well.

open-llm-leaderboard/comparator

Dive into multi-model evaluations, pinpoint the best model for your needs, and explore insights across top open LLMs all in one place. Ready to level up your model comparison game?

Thankyou for the tips and insight on Gradio 5. Much appreciated,

1. Streamlit

2. Gradio

The reason I chose them in this order was the fact that the streamlit library had the timing drop on gradio by being available with near perfection about a year or two before training data tap of GPT.

Nowadays its important that if you want current code to be right on generation it requires understanding of consistency in code method names so no manual intervention is required with each try.

With GPT and Claude being my top two for best AI pair programming models, I gravitate towards streamlit since aside from common repeat errors on cache and experimental functions circa 2022 were not solidified.

Its consistency therefore lacks human correction needs. Old dataset error situations are minimal.

Now, I seek to make it consistent on gradio side. Why? Gradio lapped streamlit for blocks paradigm and API for free which are I feel are amazing features which change software engineering forever.

For a few months I thought BigCode would become the new best model due to its training corpus datasets, yet I never felt it got to market as the next best AI coder model.

I am curious on Gradio's future and how. If the two main models (GPT and Claude) pick up the last few years, I could then code with AI without manual intervention. As it stands today Gradio is better if you could get the best coding models to not repeatedly confuse old syntax as current syntax yet we do live in an imperfect world!

Is anyone using an AI pair programming model that rocks with Gradio's latest syntax? I would like to code with a model that knows how to not miss the advancements and syntax changes that gradio has had in the past few years. Trying grok2 as well.

My IDE coding love is HF. Its hands down faster (100x) than other cloud paradigms. Any tips on models best for gradio coding I can use?

--Aaron

Have you ever dreamt of an improbable books crossover, like Frodo from 𝘓𝘰𝘳𝘥 𝘰𝘧 𝘵𝘩𝘦 𝘙𝘪𝘯𝘨𝘴 becoming the main character of the 𝘖𝘥𝘺𝘴𝘴𝘦𝘺 or Emma Bovary from 𝘔𝘢𝘥𝘢𝘮𝘦 𝘉𝘰𝘷𝘢𝘳𝘺 acting as a modern-days Shakespearean Juliet?

Well, all of this is now possible! I'm thrilled to introduce my latest opensource product for storytelling: 𝐛𝐨𝐨𝐤𝐬-𝐦𝐢𝐱𝐞𝐫-𝐚𝐢 𝐯𝟎.𝟎.𝟎 !

Built with ReactJS and shipped directly to you on Spaces thanks to Docker, this webapp combines the power of two AI tools:

- gpt-4o-mini by OpenAI, which takes care of cooking new and intriguing plots starting from the user's instructions, the titles and the summaries of the two books to mix (summaries are scraped through Wikipedia)

- text2img realtime API by ModelsLab, which provides a stable diffusion pipeline to create a thumbnail for your newly-generated story

Everything is provided under a simple and intuitive UI, which uses chatscope's React template kit.

Curious of trying? The app is already live at:

as-cle-bert/books-mixer-ai

And you can also have a tour of the GitHub repo (and leave a little ⭐ while you're there):

https://github.com/AstraBert/books-mixer-ai

The documentation is still under construction, but will become available soon😊

Have fun!📚📚

Yes, they may be subpar and may require changes to llama.cpp to support the interleaved sliding window

Yes, I got excited when a conversion worked and released them ASAP

That said, generation seems to work right now and seems to mimic the output from spaces that are running the original model

I have appended -TEST to the model names in an attempt to indicate that they are not final or perfect, but if people still feel mislead and that it's not the right thing to do, please post (civilly) below your thoughts, I will highly consider pulling the conversions if that's what people think is best. After all, that's what I'm here for, in service to you all !

Hope you enjoy! Cheers, Aaron.

Also here are my favorite last 4 spaces I am working on:

1. GPT4O: awacke1/GPT-4o-omni-text-audio-image-video

2. Claude:

awacke1/AnthropicClaude3.5Sonnet-ACW

3. MSGraph M365: awacke1/MSGraphAPI

4. Azure Cosmos DB: Now with Research AI! awacke1/AzureCosmosDBUI

# 🚀 OpenAI's O1 Models: A Quantum Leap in AI

## 1. 🤔 From 🦜 to 🧠: O1's Evolution

- **Thinking AI**: O1 ponders before replying; GPT models just predict. 💡

## 2. 📚 AI Memory: 💾 + 🧩 = 🧠

- **Embeddings & Tokens**: Words ➡️ vectors, building knowledge. 📖

## 3. 🔍 Swift Knowledge Retrieval

- **Vector Search & Indexing**: O1 finds info fast, citing reliable sources. 🔎📖

## 4. 🌳 Logic Trees with Mermaid Models

- **Flowchart Reasoning**: O1 structures thoughts like diagrams. 🎨🌐

## 5. 💻 Coding Mastery

- **Multilingual & Current**: Speaks many code languages, always up-to-date. 💻🔄

## 6. 🏆 Breaking Records

- **92.3% MMLU Score**: O1 outperforms humans, setting new AI standards. 🏅

## 7. 💡 Versatile Applications

- **Ultimate Assistant**: From fixing code to advancing research. 🛠️🔬

## 8. 🏁 Racing Toward AGI

- **OpenAI Leads**: O1 brings us closer to true AI intelligence. 🚀

## 9. 🤖 O1's Reasoning Pillars

- **🧠 Chain of Thought**: Step-by-step logic.

- **🎲 MCTS**: Simulates options, picks best path.

- **🔍 Reflection**: Self-improves autonomously.

- **🏋️♂️ Reinforcement Learning**: Gets smarter over time.

---

*Stay curious, keep coding!* 🚀

This app shows initial implementation of security, authentication, scopes, and access to Outlook, Calendar, Tasks, Onedrive and other apps for CRUD pattern as AI agent service skills to integrate with your AI workflow.

Below are initial screens showing integration:

URL: awacke1/MSGraphAPI

Discussion: awacke1/MSGraphAPI#5

Best of AI on @Azure and @Microsoft on @HuggingFace :

https://huggingface.co./microsoft

https://www.microsoft.com/en-us/research/

---

Aaron

URL: https://huggingface.co./spaces/awacke1/stable-video-diffusion

It uses Stable Diffusion to dynamically create videos from images in input directory or uploaded using A10 GPU on Huggingface.

Samples below.

I may transition this to Zero GPU if I can. During Christmas when I revised this I had my highest billing from HF yet due to GPU usage. It is still the best turn key GPU out and Image2Video is a killer app. Thanks HF for the possibilities!

For anyone learning AI model development - this is a great starter!

awacke1/Image-to-Line-Drawings

While initially using it for research prompts and research outputs using my GPT-4o client here which can interface and search ArXiv, I am excited to try out some new features specifically for AI at scale. Research on memory augmentation is shown. awacke1/GPT-4o-omni-text-audio-image-video

awacke1/AzureCosmosDBUI

features + how it works:

✍️ Generate the dataset content you want just by entering a file name

💡 Optionally specify the column names you need

💨 The dataset is streamed and generated on-the-fly in JSON Lines format

✅ Generation is constrained to always output valid JSON

How does this work ?

1/ Enter a file name

2/ The model generates column names for such a file. Using structured generation, it can generate 2 to 5 column names using lower case characters and underscores. I use a prompt that asks to generate column names for a realistic dataset and low temperature.

3/ The columns are used to update the Finite State Machine for the dataset content structured generation, so that it is used to generate JSON objects using those columns

4/ The model generates JSON objects using structured generation again, using the updated Finite State Machine. I use a prompt that asks for realistic data and a temperature of 1.

> Why update a Finite State Machine instead of re-creating one ?

Creating one can take up to 30sec, while updating one takes 0.1s (though it requires to manipulate a graph which is not easy to implement)

> Batched generation is faster, why not use it ?

Generate in batches is faster but tends to generate duplicates for this demo.

Further work can be to provide different prompts (one per sequence in the batch) to end up with a different distribution of sequences in each batch. Or implement a custom sampler that would forbid generating the same data in sequences of the same batch.

> How does structured generation work ?

I used the

outlines library with transformers to to define a JSON schema that the generation has to follow. It uses a Finite State Machine with token_id as transitions.Let me know what you think ! And feel free to duplicate/modify it to try other models/prompts or sampling methods :)

Question for the Community:

Which models should I use to generate images and audio samples for those datasets ? 🤗

### 🔗 Try It Out! 👉 Check out the GPT-4o Multiplayer App

Experience the future of collaborative AI by visiting our space on Hugging Face: awacke1/ChatStreamlitMultiplayer

🎉 This innovative tool lets you and your team reason over:

###📝 Text

###🖼️ Image

###🎵 Audio

###🎥 Video

## 🔍 Key Features

### Shared Contributions

Collaborate in real-time, seeing each other's inputs and contributions.

Enhances teamwork and fosters a collective approach to problem-solving.

### Diverse Media Integration

Seamlessly analyze and reason with text, images, audio, and video.

Breakthrough capabilities in handling complex media types, including air traffic control images and audio.

## 🛠️ Real-World Testing

This morning, we tested the app using images and audio from air traffic control—a challenge that was nearly impossible to handle with ease just a few years ago. 🚁💬

🌱 The Future of AI Collaboration

We believe AI Pair Programming is evolving into a new era of intelligence through shared contributions and teamwork. As we continue to develop, this app will enable groups to:

Generate detailed text responses 📝

Collaborate on code responses 💻

Develop new AI programs together 🤖