Abdullah Al Asif

commited on

Commit

·

78cb487

1

Parent(s):

b8fac3d

--base

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .env.template +41 -0

- .gitignore +166 -0

- LICENSE +21 -0

- README.md +101 -3

- assets/system_architecture.svg +0 -0

- assets/timing_chart.png +0 -0

- assets/video_demo.mov +3 -0

- data/models/kokoro.pth +3 -0

- data/voices/af.pt +3 -0

- data/voices/af_alloy.pt +3 -0

- data/voices/af_aoede.pt +3 -0

- data/voices/af_bella.pt +3 -0

- data/voices/af_bella_nicole.pt +3 -0

- data/voices/af_heart.pt +3 -0

- data/voices/af_jessica.pt +3 -0

- data/voices/af_kore.pt +3 -0

- data/voices/af_nicole.pt +3 -0

- data/voices/af_nicole_sky.pt +3 -0

- data/voices/af_nova.pt +3 -0

- data/voices/af_river.pt +3 -0

- data/voices/af_sarah.pt +3 -0

- data/voices/af_sarah_nicole.pt +3 -0

- data/voices/af_sky.pt +3 -0

- data/voices/af_sky_adam.pt +3 -0

- data/voices/af_sky_emma.pt +3 -0

- data/voices/af_sky_emma_isabella.pt +3 -0

- data/voices/am_adam.pt +3 -0

- data/voices/am_michael.pt +3 -0

- data/voices/bf_alice.pt +3 -0

- data/voices/bf_emma.pt +3 -0

- data/voices/bf_isabella.pt +3 -0

- data/voices/bm_george.pt +3 -0

- data/voices/bm_lewis.pt +3 -0

- data/voices/ef_dora.pt +3 -0

- data/voices/if_sara.pt +3 -0

- data/voices/jf_alpha.pt +3 -0

- data/voices/jf_gongitsune.pt +3 -0

- data/voices/pf_dora.pt +3 -0

- data/voices/zf_xiaoxiao.pt +3 -0

- data/voices/zf_xiaoyi.pt +3 -0

- requirements.txt +16 -0

- speech_to_speech.py +334 -0

- src/config/config.json +26 -0

- src/core/kokoro.py +156 -0

- src/models/istftnet.py +523 -0

- src/models/models.py +372 -0

- src/models/plbert.py +15 -0

- src/utils/__init__.py +35 -0

- src/utils/audio.py +42 -0

- src/utils/audio_io.py +48 -0

.env.template

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

HUGGINGFACE_TOKEN= TOKEN_GOES_HERE

|

| 2 |

+

OICE_NAME=af_nicole

|

| 3 |

+

SPEED=1.2

|

| 4 |

+

|

| 5 |

+

# LLM settings

|

| 6 |

+

LM_STUDIO_URL=http://localhost:1234/v1

|

| 7 |

+

OLLAMA_URL = http://localhost:11434/api/chat

|

| 8 |

+

DEFAULT_SYSTEM_PROMPT=You are a friendly, helpful, and intelligent assistant. Begin your responses with phrases like 'Umm,' 'So,' or similar. Focus on the user query and reply directly to the user in the first person ('I'), responding promptly and naturally. Do not include any additional information or context in your responses.

|

| 9 |

+

MAX_TOKENS=512

|

| 10 |

+

NUM_THREADS=2

|

| 11 |

+

LLM_TEMPERATURE=0.9

|

| 12 |

+

LLM_STREAM=true

|

| 13 |

+

LLM_RETRY_DELAY=0.5

|

| 14 |

+

MAX_RETRIES=3

|

| 15 |

+

|

| 16 |

+

# Model names

|

| 17 |

+

VAD_MODEL=pyannote/segmentation-3.0

|

| 18 |

+

WHISPER_MODEL=openai/whisper-tiny.en

|

| 19 |

+

LLM_MODEL=qwen2.5:0.5b-instruct-q8_0

|

| 20 |

+

TTS_MODEL=kokoro.pth

|

| 21 |

+

|

| 22 |

+

# VAD settings

|

| 23 |

+

VAD_MIN_DURATION_ON=0.1

|

| 24 |

+

VAD_MIN_DURATION_OFF=0.1

|

| 25 |

+

|

| 26 |

+

# Audio settings

|

| 27 |

+

CHUNK=256

|

| 28 |

+

FORMAT=pyaudio.paFloat32

|

| 29 |

+

CHANNELS=1

|

| 30 |

+

RATE=16000

|

| 31 |

+

OUTPUT_SAMPLE_RATE=24000

|

| 32 |

+

RECORD_DURATION=5

|

| 33 |

+

SILENCE_THRESHOLD=0.01

|

| 34 |

+

INTERRUPTION_THRESHOLD=0.01

|

| 35 |

+

MAX_SILENCE_DURATION=1

|

| 36 |

+

SPEECH_CHECK_TIMEOUT=0.1

|

| 37 |

+

SPEECH_CHECK_THRESHOLD=0.02

|

| 38 |

+

ROLLING_BUFFER_TIME=0.5

|

| 39 |

+

TARGET_SIZE = 25

|

| 40 |

+

PLAYBACK_DELAY = 0.001

|

| 41 |

+

FIRST_SENTENCE_SIZE = 2

|

.gitignore

ADDED

|

@@ -0,0 +1,166 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

output/

|

| 6 |

+

test/

|

| 7 |

+

test.py

|

| 8 |

+

data/logs/

|

| 9 |

+

examples/

|

| 10 |

+

generated_audio/

|

| 11 |

+

# C extensions

|

| 12 |

+

*.so

|

| 13 |

+

.vscode/

|

| 14 |

+

|

| 15 |

+

# Distribution / packaging

|

| 16 |

+

.Python

|

| 17 |

+

build/

|

| 18 |

+

develop-eggs/

|

| 19 |

+

dist/

|

| 20 |

+

downloads/

|

| 21 |

+

eggs/

|

| 22 |

+

.eggs/

|

| 23 |

+

lib/

|

| 24 |

+

lib64/

|

| 25 |

+

parts/

|

| 26 |

+

sdist/

|

| 27 |

+

var/

|

| 28 |

+

wheels/

|

| 29 |

+

share/python-wheels/

|

| 30 |

+

*.egg-info/

|

| 31 |

+

.installed.cfg

|

| 32 |

+

*.egg

|

| 33 |

+

MANIFEST

|

| 34 |

+

|

| 35 |

+

# PyInstaller

|

| 36 |

+

# Usually these files are written by a python script from a template

|

| 37 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 38 |

+

*.manifest

|

| 39 |

+

*.spec

|

| 40 |

+

|

| 41 |

+

# Installer logs

|

| 42 |

+

pip-log.txt

|

| 43 |

+

pip-delete-this-directory.txt

|

| 44 |

+

|

| 45 |

+

# Unit test / coverage reports

|

| 46 |

+

htmlcov/

|

| 47 |

+

.tox/

|

| 48 |

+

.nox/

|

| 49 |

+

.coverage

|

| 50 |

+

.coverage.*

|

| 51 |

+

.cache

|

| 52 |

+

nosetests.xml

|

| 53 |

+

coverage.xml

|

| 54 |

+

*.cover

|

| 55 |

+

*.py,cover

|

| 56 |

+

.hypothesis/

|

| 57 |

+

.pytest_cache/

|

| 58 |

+

cover/

|

| 59 |

+

|

| 60 |

+

# Translations

|

| 61 |

+

*.mo

|

| 62 |

+

*.pot

|

| 63 |

+

|

| 64 |

+

# Django stuff:

|

| 65 |

+

*.log

|

| 66 |

+

local_settings.py

|

| 67 |

+

db.sqlite3

|

| 68 |

+

db.sqlite3-journal

|

| 69 |

+

|

| 70 |

+

# Flask stuff:

|

| 71 |

+

instance/

|

| 72 |

+

.webassets-cache

|

| 73 |

+

|

| 74 |

+

# Scrapy stuff:

|

| 75 |

+

.scrapy

|

| 76 |

+

|

| 77 |

+

# Sphinx documentation

|

| 78 |

+

docs/_build/

|

| 79 |

+

|

| 80 |

+

# PyBuilder

|

| 81 |

+

.pybuilder/

|

| 82 |

+

target/

|

| 83 |

+

|

| 84 |

+

# Jupyter Notebook

|

| 85 |

+

.ipynb_checkpoints

|

| 86 |

+

|

| 87 |

+

# IPython

|

| 88 |

+

profile_default/

|

| 89 |

+

ipython_config.py

|

| 90 |

+

|

| 91 |

+

# pyenv

|

| 92 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 93 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 94 |

+

# .python-version

|

| 95 |

+

|

| 96 |

+

# pipenv

|

| 97 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 98 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 99 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 100 |

+

# install all needed dependencies.

|

| 101 |

+

#Pipfile.lock

|

| 102 |

+

|

| 103 |

+

# poetry

|

| 104 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 105 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 106 |

+

# commonly ignored for libraries.

|

| 107 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 108 |

+

#poetry.lock

|

| 109 |

+

|

| 110 |

+

# pdm

|

| 111 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 112 |

+

#pdm.lock

|

| 113 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 114 |

+

# in version control.

|

| 115 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 116 |

+

.pdm.toml

|

| 117 |

+

|

| 118 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 119 |

+

__pypackages__/

|

| 120 |

+

|

| 121 |

+

# Celery stuff

|

| 122 |

+

celerybeat-schedule

|

| 123 |

+

celerybeat.pid

|

| 124 |

+

|

| 125 |

+

# SageMath parsed files

|

| 126 |

+

*.sage.py

|

| 127 |

+

|

| 128 |

+

# Environments

|

| 129 |

+

.env

|

| 130 |

+

.venv

|

| 131 |

+

env/

|

| 132 |

+

venv/

|

| 133 |

+

ENV/

|

| 134 |

+

env.bak/

|

| 135 |

+

venv.bak/

|

| 136 |

+

|

| 137 |

+

# Spyder project settings

|

| 138 |

+

.spyderproject

|

| 139 |

+

.spyproject

|

| 140 |

+

|

| 141 |

+

# Rope project settings

|

| 142 |

+

.ropeproject

|

| 143 |

+

|

| 144 |

+

# mkdocs documentation

|

| 145 |

+

/site

|

| 146 |

+

|

| 147 |

+

# mypy

|

| 148 |

+

.mypy_cache/

|

| 149 |

+

.dmypy.json

|

| 150 |

+

dmypy.json

|

| 151 |

+

|

| 152 |

+

# Pyre type checker

|

| 153 |

+

.pyre/

|

| 154 |

+

|

| 155 |

+

# pytype static type analyzer

|

| 156 |

+

.pytype/

|

| 157 |

+

|

| 158 |

+

# Cython debug symbols

|

| 159 |

+

cython_debug/

|

| 160 |

+

|

| 161 |

+

# PyCharm

|

| 162 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 163 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 164 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 165 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 166 |

+

#.idea/

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2025 Abdullah Al Asif

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,3 +1,101 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

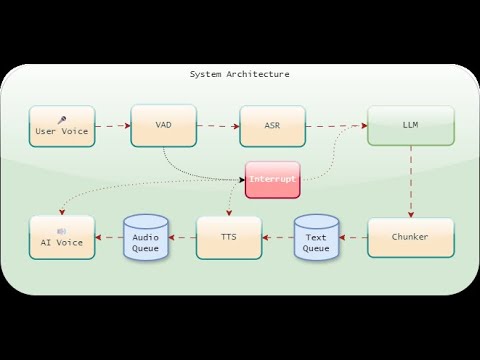

| 1 |

+

# On Device Speech to Speech Conversational AI

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

This is realtime on-device speech-to-speech AI model. It used a series to tools to achieve that. It uses a combination of voice activity detection, speech recognition, language models, and text-to-speech synthesis to create a seamless and responsive conversational AI experience. The system is designed to run on-device, ensuring low latency and minimal data usage.

|

| 5 |

+

|

| 6 |

+

<h2 style="color: yellow;">HOW TO RUN IT</h2>

|

| 7 |

+

|

| 8 |

+

1. **Prerequisites:**

|

| 9 |

+

- Install Python 3.8+ (tested with 3.12)

|

| 10 |

+

- Install [eSpeak NG](https://github.com/espeak-ng/espeak-ng/releases/tag/1.52.0) (required for voice synthesis)

|

| 11 |

+

- Install Ollama from https://ollama.ai/

|

| 12 |

+

|

| 13 |

+

2. **Setup:**

|

| 14 |

+

- Clone the repository `git clone https://github.com/asiff00/On-Device-Speech-to-Speech-Conversational-AI.git`

|

| 15 |

+

- Run `git lfs pull` to download the models and voices

|

| 16 |

+

- Copy `.env.template` to `.env`

|

| 17 |

+

- Add your HuggingFace token to `.env`

|

| 18 |

+

- Twin other parameters there, if needed [Optional]

|

| 19 |

+

- Install requirements: `pip install -r requirements.txt`

|

| 20 |

+

- Add any missing packages if not already installed `pip install <package_name>`

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

4. **Run Ollama:**

|

| 24 |

+

- Start Ollama service

|

| 25 |

+

- Run: `ollama run qwen2.5:0.5b-instruct-q8_0` or any other model of your choice

|

| 26 |

+

|

| 27 |

+

5. **Start Application:**

|

| 28 |

+

- Run: `python speech_to_speech.py`

|

| 29 |

+

- Wait for initialization (models loading)

|

| 30 |

+

- Start talking when you see "Voice Chat Bot Ready"

|

| 31 |

+

- Long press `Ctrl+C` to stop the application

|

| 32 |

+

</details>

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

We basically put a few models together to work in a multi-threaded architecture, where each component operates independently but is integrated through a queue management system to ensure performance and responsiveness.

|

| 36 |

+

|

| 37 |

+

## The flow works as follows: Loop (VAD -> Whisper -> LM -> TextChunker -> TTS)

|

| 38 |

+

To achieve that we use:

|

| 39 |

+

- **Voice Activity Detection**: Pyannote:pyannote/segmentation-3.0

|

| 40 |

+

- **Speech Recognition**: Whisper:whisper-tiny.en (OpenAI)

|

| 41 |

+

- **Language Model**: LM Studio/Ollama with qwen2.5:0.5b-instruct-q8_0

|

| 42 |

+

- **Voice Synthesis**: Kokoro:hexgrad/Kokoro-82M (Version 0.19, 16bit)

|

| 43 |

+

|

| 44 |

+

We use custom text processing and queues to manage data, with separate queues for text and audio. This setup allows the system to handle heavy tasks without slowing down. We also use an interrupt mechanism allowing the user to interrupt the AI at any time. This makes the conversation feel more natural and responsive rather than just a generic TTS engine.

|

| 45 |

+

|

| 46 |

+

## Demo Video:

|

| 47 |

+

A demo video is uploaded here. Either click on the thumbnail or click on the YouTube link: [https://youtu.be/x92FLnwf-nA](https://youtu.be/x92FLnwf-nA).

|

| 48 |

+

|

| 49 |

+

[](https://youtu.be/x92FLnwf-nA)

|

| 50 |

+

|

| 51 |

+

## Performance:

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

I ran this test on an AMD Ryzen 5600G, 16 GB, SSD, and No-GPU setup, achieving consistent ~2s latency. On average, it takes around 1.5s for the system to respond to a user query from the point the user says the last word. Although I haven't tested this on a GPU, I believe testing on a GPU would significantly improve performance and responsiveness.

|

| 55 |

+

|

| 56 |

+

## How do we reduce latency?

|

| 57 |

+

### Priority based text chunking

|

| 58 |

+

We capitalize on the streaming output of the language model to reduce latency. Instead of waiting for the entire response to be generated, we process and deliver each chunk of text as soon as they become available, form phrases, and send it to the TTS engine queue. We play the audio as soon as it becomes available. This way, the user gets a very fast response, while the rest of the response is being generated.

|

| 59 |

+

|

| 60 |

+

Our custom `TextChunker` analyzes incoming text streams from the language model and splits them into chunks suitable for the voice synthesizer. It uses a combination of sentence breaks (like periods, question marks, and exclamation points) and semantic breaks (like "and", "but", and "however") to determine the best places to split the text, ensuring natural-sounding speech output.

|

| 61 |

+

|

| 62 |

+

The `TextChunker` maintains a set of break points:

|

| 63 |

+

- **Sentence breaks**: `.`, `!`, `?` (highest priority)

|

| 64 |

+

- **Semantic breaks** with priority levels:

|

| 65 |

+

- Level 4: `however`, `therefore`, `furthermore`, `moreover`, `nevertheless`

|

| 66 |

+

- Level 3: `while`, `although`, `unless`, `since`

|

| 67 |

+

- Level 2: `and`, `but`, `because`, `then`

|

| 68 |

+

- **Punctuation breaks**: `;` (4), `:` (4), `,` (3), `-` (2)

|

| 69 |

+

|

| 70 |

+

When processing text, the `TextChunker` uses a priority-based system:

|

| 71 |

+

1. Looks for sentence-ending punctuation first (highest priority 5)

|

| 72 |

+

2. Checks for semantic break words with their associated priority levels

|

| 73 |

+

3. Falls back to punctuation marks with lower priorities

|

| 74 |

+

4. Splits at target word count if no natural breaks are found

|

| 75 |

+

|

| 76 |

+

The text chunking method significantly reduces perceived latency by processing and delivering the first chunk of text as soon as it becomes available. Let's consider a hypothetical system where the language model generates responses at a certain rate. If we imagine a scenario where the model produces a response of N words at a rate of R words per second, waiting for the complete response would introduce a delay of N/R seconds before any audio is produced. With text chunking, the system can start processing the first M words as soon as they are ready (after M/R seconds), while the remaining words continue to be generated. This means the user hears the initial part of the response in just M/R seconds, while the rest streams in naturally.

|

| 77 |

+

|

| 78 |

+

### Leading filler word LLM Prompting

|

| 79 |

+

We use a another little trick in the LLM prompt to speed up the system’s first response. We ask the LLM to start its reply with filler words like “umm,” “so,” or “well.” These words have a special role in language: they create natural pauses and breaks. Since these are single-word responses, they take only milliseconds to convert to audio. When we apply our chunking rules, the system splits the response at the filler word (e.g., “umm,”) and sends that tiny chunk to the TTS engine. This lets the bot play the audio for “umm” almost instantly, reducing perceived latency. The filler words act as natural “bridges” to mask processing delays. Even a short “umm” gives the illusion of a fluid conversation, while the system works on generating the rest of the response in the background. Longer chunks after the filler word might take more time to process, but the initial pause feels intentional and human-like.

|

| 80 |

+

|

| 81 |

+

We have fallback plans for cases when the LLM fails to start its response with fillers. In those cases, we put hand breaks at 2 to 5 words, which comes with a cost of a bit of choppiness at the beginning but that feels less painful than the system taking a long time to give the first response.

|

| 82 |

+

|

| 83 |

+

**In practice,** this approach can reduce perceived latency by up to 50-70%, depending on the length of the response and the speed of the language model. For example, in a typical conversation where responses average 15-20 words, our techniques can bring the initial response time down from 1.5-2 seconds to just `0.5-0.7` seconds, making the interaction feel much more natural and immediate.

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

## Resources

|

| 88 |

+

This project utilizes the following resources:

|

| 89 |

+

* **Text-to-Speech Model:** [Kokoro](https://huggingface.co/hexgrad/Kokoro-82M)

|

| 90 |

+

* **Speech-to-Text Model:** [Whisper](https://huggingface.co/openai/whisper-tiny.en)

|

| 91 |

+

* **Voice Activity Detection Model:** [Pyannote](https://huggingface.co/pyannote/segmentation-3.0)

|

| 92 |

+

* **Large Language Model Server:** [Ollama](https://ollama.ai/)

|

| 93 |

+

* **Fallback Text-to-Speech Engine:** [eSpeak NG](https://github.com/espeak-ng/espeak-ng/releases/tag/1.52.0)

|

| 94 |

+

|

| 95 |

+

## Acknowledgements

|

| 96 |

+

This project draws inspiration and guidance from the following articles and repositories, among others:

|

| 97 |

+

* [Realtime speech to speech conversation with MiniCPM-o](https://github.com/OpenBMB/MiniCPM-o)

|

| 98 |

+

* [A Comparative Guide to OpenAI and Ollama APIs](https://medium.com/@zakkyang/a-comparative-guide-to-openai-and-ollama-apis-with-cheathsheet-5aae6e515953)

|

| 99 |

+

* [Building Production-Ready TTS with Kokoro-82M](https://medium.com/@simeon.emanuilov/kokoro-82m-building-production-ready-tts-with-82m-parameters-unfoldai-98e36ff286b9)

|

| 100 |

+

* [Kokoro-82M: The Best TTS Model in Just 82 Million Parameters](https://medium.com/data-science-in-your-pocket/kokoro-82m-the-best-tts-model-in-just-82-million-parameters-512b4ba4f94c)

|

| 101 |

+

* [StyleTTS2 Model Implementation](https://github.com/yl4579/StyleTTS2/blob/main/models.py)

|

assets/system_architecture.svg

ADDED

|

|

assets/timing_chart.png

ADDED

|

assets/video_demo.mov

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4aa16650f035a094e65d759ac07e9050ccf22204f77816776b957cea203caf9c

|

| 3 |

+

size 11758861

|

data/models/kokoro.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:70cbf37f84610967f2ca72dadb95456fdd8b6c72cdd6dc7372c50f525889ff0c

|

| 3 |

+

size 163731194

|

data/voices/af.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fad4192fd8a840f925b0e3fc2be54e20531f91a9ac816a485b7992ca0bd83ebf

|

| 3 |

+

size 524355

|

data/voices/af_alloy.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6d877149dd8b348fbad12e5845b7e43d975390e9f3b68a811d1d86168bef5aa3

|

| 3 |

+

size 523425

|

data/voices/af_aoede.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c03bd1a4c3716c2d8eaa3d50022f62d5c31cfbd6e15933a00b17fefe13841cc4

|

| 3 |

+

size 523425

|

data/voices/af_bella.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2828c6c2f94275ef3441a2edfcf48293298ee0f9b56ce70fb2e344345487b922

|

| 3 |

+

size 524449

|

data/voices/af_bella_nicole.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d41525cea0e607c8c775adad8a81faa015d5ddafcbc66d9454c5c6aaef12137a

|

| 3 |

+

size 524623

|

data/voices/af_heart.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0ab5709b8ffab19bfd849cd11d98f75b60af7733253ad0d67b12382a102cb4ff

|

| 3 |

+

size 523425

|

data/voices/af_jessica.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cdfdccb8cc975aa34ee6b89642963b0064237675de0e41a30ae64cc958dd4e87

|

| 3 |

+

size 523435

|

data/voices/af_kore.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8bfbc512321c3db49dff984ac675fa5ac7eaed5a96cc31104d3a9080e179d69d

|

| 3 |

+

size 523420

|

data/voices/af_nicole.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9401802fb0b7080c324dec1a75d60f31d977ced600a99160e095dbc5a1172692

|

| 3 |

+

size 524454

|

data/voices/af_nicole_sky.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:587f36a3a2d9f295cd5538a923747be2fe398bbd81598896bac07bbdb7ff25b0

|

| 3 |

+

size 524623

|

data/voices/af_nova.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e0233676ddc21908c37a1f102f6b88a59e4e5c1bd764983616eb9eda629dbcd2

|

| 3 |

+

size 523420

|

data/voices/af_river.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e149459bd9c084416b74756b9bd3418256a8b839088abb07d463730c369dab8f

|

| 3 |

+

size 523425

|

data/voices/af_sarah.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ba7918c4ace6ace4221e7e01eb3a6d16596cba9729850551c758cd2ad3a4cd08

|

| 3 |

+

size 524449

|

data/voices/af_sarah_nicole.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fa529793c4853a4107bb9857023a0ceb542466c664340ba0aeeb7c8570b2c51c

|

| 3 |

+

size 524623

|

data/voices/af_sky.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9f16f1bb778de36a177ae4b0b6f1e59783d5f4d3bcecf752c3e1ee98299b335e

|

| 3 |

+

size 524375

|

data/voices/af_sky_adam.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2fa5978fab741ccd0d2a4992e34c85a7498f61062a665257a9d9b315dca327c3

|

| 3 |

+

size 524464

|

data/voices/af_sky_emma.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cfb3af5b8a0cbdd07d76fd201b572437ba2b048c03b65f2535a1f2810d01a99f

|

| 3 |

+

size 524464

|

data/voices/af_sky_emma_isabella.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:12852daf302220b828a49a1d9089def6ff2b81fdab0a9ee500c66b0f37a2052f

|

| 3 |

+

size 524509

|

data/voices/am_adam.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1921528b400a553f66528c27899d95780918fe33b1ac7e2a871f6a0de475f176

|

| 3 |

+

size 524444

|

data/voices/am_michael.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a255c9562c363103adc56c09b7daf837139d3bdaa8bd4dd74847ab1e3e8c28be

|

| 3 |

+

size 524459

|

data/voices/bf_alice.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d292651b6af6c0d81705c2580dcb4463fccc0ff7b8d618a471dbb4e45655b3f3

|

| 3 |

+

size 523425

|

data/voices/bf_emma.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:992e6d8491b8926ef4a16205250e51a21d9924405a5d37e2db6e94adfd965c3b

|

| 3 |

+

size 524365

|

data/voices/bf_isabella.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d0865a03931230100167f7a81d394b143c072efe2d7e4c4a87b5c54d6283f580

|

| 3 |

+

size 524365

|

data/voices/bm_george.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7d763dfe13e934357f4d8322b718787d79e32f2181e29ca0cf6aa637d8092b96

|

| 3 |

+

size 524464

|

data/voices/bm_lewis.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f70d9ea4d65f522f224628f06d86ea74279faae23bd7e765848a374aba916b76

|

| 3 |

+

size 524449

|

data/voices/ef_dora.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d9d69b0f8a2b87a345f269d89639f89dfbd1a6c9da0c498ae36dd34afcf35530

|

| 3 |

+

size 523420

|

data/voices/if_sara.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6c0b253b955fe32f1a1a86006aebe83d050ea95afd0e7be15182f087deedbf55

|

| 3 |

+

size 523425

|

data/voices/jf_alpha.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1bf4c9dc69e45ee46183b071f4db766349aac5592acbcfeaf051018048a5d787

|

| 3 |

+

size 523425

|

data/voices/jf_gongitsune.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1b171917f18f351e65f2bf9657700cd6bfec4e65589c297525b9cf3c20105770

|

| 3 |

+

size 523351

|

data/voices/pf_dora.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:07e4ff987c5d5a8c3995efd15cc4f0db7c4c15e881b198d8ab7f67ecf51f5eb7

|

| 3 |

+

size 523425

|

data/voices/zf_xiaoxiao.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cfaf6f2ded1ee56f1ff94fcd2b0e6cdf32e5b794bdc05b44e7439d44aef5887c

|

| 3 |

+

size 523440

|

data/voices/zf_xiaoyi.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b5235dbaeef85a4c613bf78af9a88ff63c25bac5f26ba77e36186d8b7ebf05e2

|

| 3 |

+

size 523430

|

requirements.txt

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

phonemizer

|

| 2 |

+

torch

|

| 3 |

+

transformers

|

| 4 |

+

scipy

|

| 5 |

+

munch

|

| 6 |

+

sounddevice

|

| 7 |

+

python-multipart

|

| 8 |

+

soundfile

|

| 9 |

+

pydantic

|

| 10 |

+

requests

|

| 11 |

+

python-dotenv

|

| 12 |

+

numpy

|

| 13 |

+

pyaudio

|

| 14 |

+

pyannote.audio

|

| 15 |

+

torch_audiomentations

|

| 16 |

+

pydantic_settings

|

speech_to_speech.py

ADDED

|

@@ -0,0 +1,334 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import msvcrt

|

| 2 |

+

import traceback

|

| 3 |

+

import time

|

| 4 |

+

import requests

|

| 5 |

+

import time

|

| 6 |

+

from transformers import WhisperProcessor, WhisperForConditionalGeneration

|

| 7 |

+

from src.utils.config import settings

|

| 8 |

+

from src.utils import (

|

| 9 |

+

VoiceGenerator,

|

| 10 |

+

get_ai_response,

|

| 11 |

+

play_audio_with_interrupt,

|

| 12 |

+

init_vad_pipeline,

|

| 13 |

+

detect_speech_segments,

|

| 14 |

+

record_continuous_audio,

|

| 15 |

+

check_for_speech,

|

| 16 |

+

transcribe_audio,

|

| 17 |

+

)

|

| 18 |

+

from src.utils.audio_queue import AudioGenerationQueue

|

| 19 |

+

from src.utils.llm import parse_stream_chunk

|

| 20 |

+

import threading

|

| 21 |

+

from src.utils.text_chunker import TextChunker

|

| 22 |

+

|

| 23 |

+

settings.setup_directories()

|

| 24 |

+

timing_info = {

|

| 25 |

+

"vad_start": None,

|

| 26 |

+

"transcription_start": None,

|

| 27 |

+

"llm_first_token": None,

|

| 28 |

+

"audio_queued": None,

|

| 29 |

+

"first_audio_play": None,

|

| 30 |

+

"playback_start": None,

|

| 31 |

+

"end": None,

|

| 32 |

+

"transcription_duration": None,

|

| 33 |

+

}

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def process_input(

|

| 37 |

+

session: requests.Session,

|

| 38 |

+

user_input: str,

|

| 39 |

+

messages: list,

|

| 40 |

+

generator: VoiceGenerator,

|

| 41 |

+

speed: float,

|

| 42 |

+

) -> tuple[bool, None]:

|

| 43 |

+

"""Processes user input, generates a response, and handles audio output.

|

| 44 |

+

|

| 45 |

+

Args:

|

| 46 |

+

session (requests.Session): The requests session to use.

|

| 47 |

+

user_input (str): The user's input text.

|

| 48 |

+

messages (list): The list of messages to send to the LLM.

|

| 49 |

+

generator (VoiceGenerator): The voice generator object.

|

| 50 |

+

speed (float): The playback speed.

|

| 51 |

+

|

| 52 |

+

Returns:

|

| 53 |

+

tuple[bool, None]: A tuple containing a boolean indicating if the process was interrupted and None.

|

| 54 |

+

"""

|

| 55 |

+

global timing_info

|

| 56 |

+

timing_info = {k: None for k in timing_info}

|

| 57 |

+

timing_info["vad_start"] = time.perf_counter()

|

| 58 |

+

|

| 59 |

+

messages.append({"role": "user", "content": user_input})

|

| 60 |

+

print("\nThinking...")

|

| 61 |

+

start_time = time.time()

|

| 62 |

+

try:

|

| 63 |

+

response_stream = get_ai_response(

|

| 64 |

+

session=session,

|

| 65 |

+

messages=messages,

|

| 66 |

+

llm_model=settings.LLM_MODEL,

|

| 67 |

+

llm_url=settings.OLLAMA_URL,

|

| 68 |

+

max_tokens=settings.MAX_TOKENS,

|

| 69 |

+

stream=True,

|

| 70 |

+

)

|

| 71 |

+

|

| 72 |

+

if not response_stream:

|

| 73 |

+

print("Failed to get AI response stream.")

|

| 74 |

+

return False, None

|

| 75 |

+

|

| 76 |

+

audio_queue = AudioGenerationQueue(generator, speed)

|

| 77 |

+

audio_queue.start()

|

| 78 |

+

chunker = TextChunker()

|

| 79 |

+

complete_response = []

|

| 80 |

+

|

| 81 |

+

playback_thread = threading.Thread(

|

| 82 |

+

target=lambda: audio_playback_worker(audio_queue)

|

| 83 |

+

)

|

| 84 |

+

playback_thread.daemon = True

|

| 85 |

+

playback_thread.start()

|

| 86 |

+

|

| 87 |

+

for chunk in response_stream:

|

| 88 |

+

data = parse_stream_chunk(chunk)

|

| 89 |

+

if not data or "choices" not in data:

|

| 90 |

+

continue

|

| 91 |

+

|

| 92 |

+

choice = data["choices"][0]

|

| 93 |

+

if "delta" in choice and "content" in choice["delta"]:

|

| 94 |

+

content = choice["delta"]["content"]

|

| 95 |

+

if content:

|

| 96 |

+

if not timing_info["llm_first_token"]:

|

| 97 |

+

timing_info["llm_first_token"] = time.perf_counter()

|

| 98 |

+

print(content, end="", flush=True)

|

| 99 |

+

chunker.current_text.append(content)

|

| 100 |

+

|

| 101 |

+

text = "".join(chunker.current_text)

|

| 102 |

+

if chunker.should_process(text):

|

| 103 |

+

if not timing_info["audio_queued"]:

|

| 104 |

+

timing_info["audio_queued"] = time.perf_counter()

|

| 105 |

+

remaining = chunker.process(text, audio_queue)

|

| 106 |

+

chunker.current_text = [remaining]

|

| 107 |

+

complete_response.append(text[: len(text) - len(remaining)])

|

| 108 |

+

|

| 109 |

+

if choice.get("finish_reason") == "stop":

|

| 110 |

+

final_text = "".join(chunker.current_text).strip()

|

| 111 |

+

if final_text:

|

| 112 |

+

chunker.process(final_text, audio_queue)

|

| 113 |

+

complete_response.append(final_text)

|

| 114 |

+

break

|

| 115 |

+

|

| 116 |

+

messages.append({"role": "assistant", "content": " ".join(complete_response)})

|

| 117 |

+

print()

|

| 118 |

+

|

| 119 |

+

time.sleep(0.1)

|

| 120 |

+

audio_queue.stop()

|

| 121 |

+

playback_thread.join()

|

| 122 |

+

|

| 123 |

+

def playback_wrapper():

|

| 124 |

+

timing_info["playback_start"] = time.perf_counter()

|

| 125 |

+

result = audio_playback_worker(audio_queue)

|

| 126 |

+

return result

|

| 127 |

+

|

| 128 |

+

playback_thread = threading.Thread(target=playback_wrapper)

|

| 129 |

+

|

| 130 |

+

timing_info["end"] = time.perf_counter()

|

| 131 |

+

print_timing_chart(timing_info)

|

| 132 |

+

return False, None

|

| 133 |

+

|

| 134 |

+

except Exception as e:

|

| 135 |

+

print(f"\nError during streaming: {str(e)}")

|

| 136 |

+

if "audio_queue" in locals():

|

| 137 |

+

audio_queue.stop()

|

| 138 |

+

return False, None

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

def audio_playback_worker(audio_queue) -> tuple[bool, None]:

|

| 142 |

+

"""Manages audio playback in a separate thread, handling interruptions.

|

| 143 |

+

|

| 144 |

+

Args:

|

| 145 |

+

audio_queue (AudioGenerationQueue): The audio queue object.

|

| 146 |

+

|

| 147 |

+

Returns:

|

| 148 |

+

tuple[bool, None]: A tuple containing a boolean indicating if the playback was interrupted and the interrupt audio data.

|

| 149 |

+

"""

|

| 150 |

+

global timing_info

|

| 151 |

+

was_interrupted = False

|

| 152 |

+

interrupt_audio = None

|

| 153 |

+

|

| 154 |

+

try:

|

| 155 |

+

while True:

|

| 156 |

+

speech_detected, audio_data = check_for_speech()

|

| 157 |

+

if speech_detected:

|

| 158 |

+

was_interrupted = True

|

| 159 |

+

interrupt_audio = audio_data

|

| 160 |

+

break

|

| 161 |

+

|

| 162 |

+

audio_data, _ = audio_queue.get_next_audio()

|

| 163 |

+

if audio_data is not None:

|

| 164 |

+

if not timing_info["first_audio_play"]:

|

| 165 |

+

timing_info["first_audio_play"] = time.perf_counter()

|

| 166 |

+

|

| 167 |

+

was_interrupted, interrupt_data = play_audio_with_interrupt(audio_data)

|

| 168 |

+

if was_interrupted:

|

| 169 |

+

interrupt_audio = interrupt_data

|

| 170 |

+

break

|

| 171 |

+

else:

|

| 172 |

+

time.sleep(settings.PLAYBACK_DELAY)

|

| 173 |

+

|

| 174 |

+

if (

|

| 175 |

+

not audio_queue.is_running

|

| 176 |

+

and audio_queue.sentence_queue.empty()

|

| 177 |

+

and audio_queue.audio_queue.empty()

|

| 178 |

+

):

|

| 179 |

+

break

|

| 180 |

+

|

| 181 |

+

except Exception as e:

|

| 182 |

+

print(f"Error in audio playback: {str(e)}")

|

| 183 |

+

|

| 184 |

+

return was_interrupted, interrupt_audio

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

def main():

|

| 188 |

+

"""Main function to run the voice chat bot."""

|

| 189 |

+

with requests.Session() as session:

|

| 190 |

+

try:

|

| 191 |

+

session = requests.Session()

|

| 192 |

+

generator = VoiceGenerator(settings.MODELS_DIR, settings.VOICES_DIR)

|

| 193 |

+

messages = [{"role": "system", "content": settings.DEFAULT_SYSTEM_PROMPT}]

|

| 194 |

+

print("\nInitializing Whisper model...")

|

| 195 |

+

whisper_processor = WhisperProcessor.from_pretrained(settings.WHISPER_MODEL)

|

| 196 |

+

whisper_model = WhisperForConditionalGeneration.from_pretrained(

|

| 197 |

+

settings.WHISPER_MODEL

|

| 198 |

+

)

|

| 199 |

+

print("\nInitializing Voice Activity Detection...")

|

| 200 |

+

vad_pipeline = init_vad_pipeline(settings.HUGGINGFACE_TOKEN)

|

| 201 |

+

print("\n=== Voice Chat Bot Initializing ===")

|

| 202 |

+

print("Device being used:", generator.device)

|

| 203 |

+

print("\nInitializing voice generator...")

|

| 204 |

+

result = generator.initialize(settings.TTS_MODEL, settings.VOICE_NAME)

|

| 205 |

+

print(result)

|

| 206 |

+

speed = settings.SPEED

|

| 207 |

+

try:

|

| 208 |

+

print("\nWarming up the LLM model...")

|

| 209 |

+

health = session.get("http://localhost:11434", timeout=3)

|

| 210 |

+

if health.status_code != 200:

|

| 211 |

+

print("Ollama not running! Start it first.")

|

| 212 |

+

return

|

| 213 |

+

response_stream = get_ai_response(

|

| 214 |

+

session=session,

|

| 215 |

+

messages=[

|

| 216 |

+

{"role": "system", "content": settings.DEFAULT_SYSTEM_PROMPT},

|

| 217 |

+

{"role": "user", "content": "Hi!"},

|

| 218 |

+

],

|

| 219 |

+

llm_model=settings.LLM_MODEL,

|

| 220 |

+

llm_url=settings.OLLAMA_URL,

|

| 221 |

+

max_tokens=settings.MAX_TOKENS,

|

| 222 |

+

stream=False,

|

| 223 |

+

)

|

| 224 |

+

if not response_stream:

|

| 225 |

+

print("Failed to initialized the AI model!")

|

| 226 |

+

return

|

| 227 |

+

except requests.RequestException as e:

|

| 228 |

+

print(f"Warmup failed: {str(e)}")

|

| 229 |

+

|

| 230 |

+

print("\n\n=== Voice Chat Bot Ready ===")

|

| 231 |

+

print("The bot is now listening for speech.")

|

| 232 |

+

print("Just start speaking, and I'll respond automatically!")

|

| 233 |

+

print("You can interrupt me anytime by starting to speak.")

|

| 234 |

+

while True:

|

| 235 |

+

try:

|

| 236 |

+

if msvcrt.kbhit():

|

| 237 |

+

user_input = input("\nYou (text): ").strip()

|

| 238 |

+

|

| 239 |

+

if user_input.lower() == "quit":

|

| 240 |

+

print("Goodbye!")

|

| 241 |

+

break

|

| 242 |

+

|

| 243 |

+

audio_data = record_continuous_audio()

|

| 244 |

+

if audio_data is not None:

|

| 245 |

+

speech_segments = detect_speech_segments(

|

| 246 |

+

vad_pipeline, audio_data

|

| 247 |

+

)

|

| 248 |

+

|

| 249 |

+

if speech_segments is not None:

|

| 250 |

+

print("\nTranscribing detected speech...")

|

| 251 |

+

timing_info["transcription_start"] = time.perf_counter()

|

| 252 |

+

|

| 253 |

+

user_input = transcribe_audio(

|

| 254 |

+

whisper_processor, whisper_model, speech_segments

|

| 255 |

+

)

|

| 256 |

+

|

| 257 |

+

timing_info["transcription_duration"] = (

|

| 258 |

+

time.perf_counter() - timing_info["transcription_start"]

|

| 259 |

+

)

|

| 260 |

+

if user_input.strip():

|

| 261 |

+

print(f"You (voice): {user_input}")

|

| 262 |

+

was_interrupted, speech_data = process_input(

|

| 263 |

+

session, user_input, messages, generator, speed

|

| 264 |

+

)

|

| 265 |

+

if was_interrupted and speech_data is not None:

|

| 266 |

+

speech_segments = detect_speech_segments(

|

| 267 |

+

vad_pipeline, speech_data

|

| 268 |

+

)

|

| 269 |

+

if speech_segments is not None:

|

| 270 |

+

print("\nTranscribing interrupted speech...")

|

| 271 |

+

user_input = transcribe_audio(

|

| 272 |

+

whisper_processor,

|

| 273 |

+

whisper_model,

|

| 274 |

+

speech_segments,

|

| 275 |

+

)

|

| 276 |

+

if user_input.strip():

|

| 277 |

+

print(f"You (voice): {user_input}")

|

| 278 |

+

process_input(