Update README.md

Browse files

README.md

CHANGED

|

@@ -1,12 +1,26 @@

|

|

| 1 |

-

---

|

| 2 |

-

base_model:

|

| 3 |

-

- meta-llama/Meta-Llama-3-8B

|

| 4 |

-

library_name: transformers

|

| 5 |

-

tags:

|

| 6 |

-

- mergekit

|

| 7 |

-

- merge

|

| 8 |

-

|

| 9 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

# model

|

| 11 |

|

| 12 |

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

|

@@ -37,4 +51,4 @@ slices:

|

|

| 37 |

merge_method: passthrough

|

| 38 |

dtype: bfloat16

|

| 39 |

|

| 40 |

-

```

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model:

|

| 3 |

+

- meta-llama/Meta-Llama-3-8B

|

| 4 |

+

library_name: transformers

|

| 5 |

+

tags:

|

| 6 |

+

- mergekit

|

| 7 |

+

- merge

|

| 8 |

+

- llama3

|

| 9 |

+

license: llama3

|

| 10 |

+

language:

|

| 11 |

+

- en

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

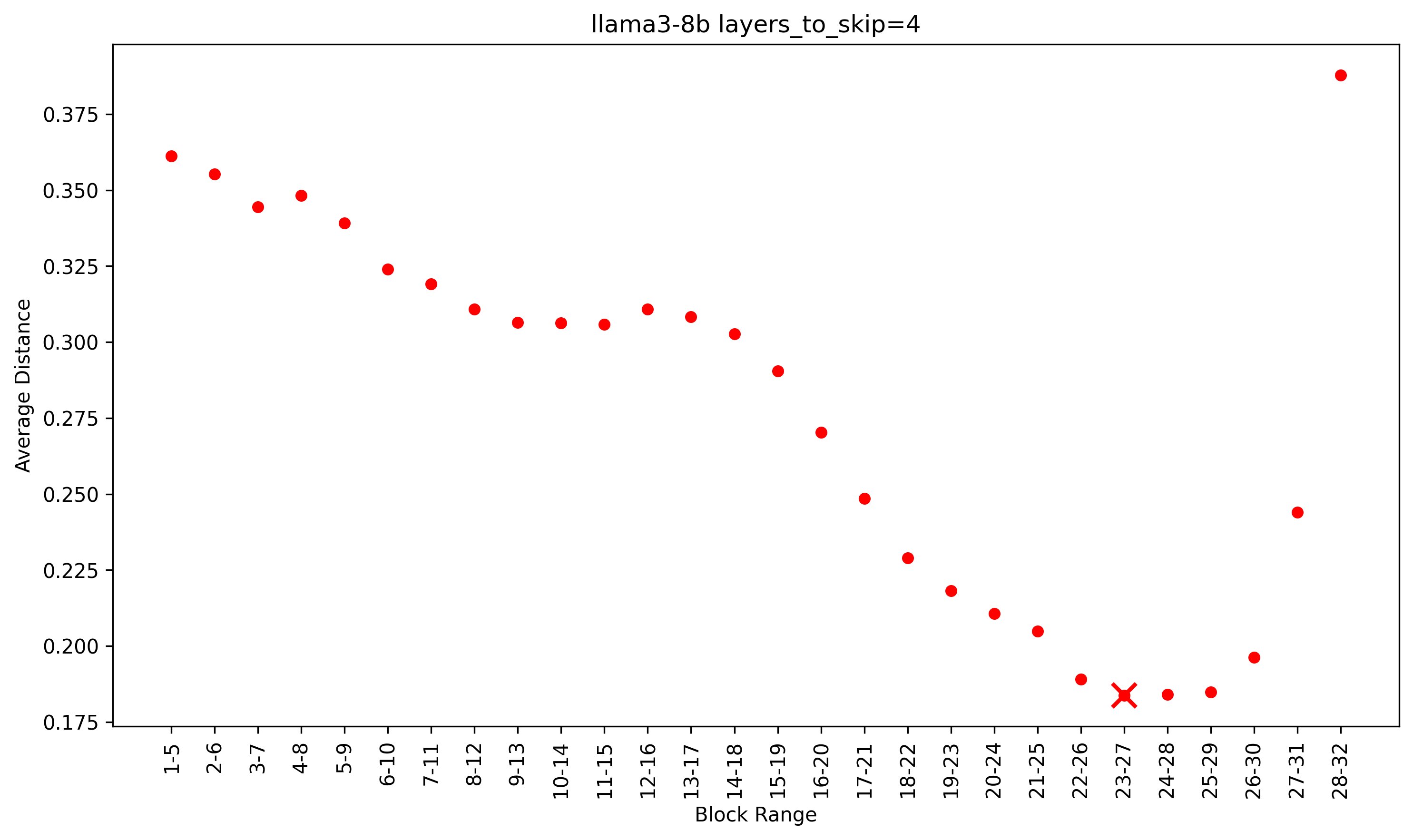

Meta's Llama 3 8B pruned to 7B parameters(w/ 28 layers). Layers to prune selected using PruneMe repo on Github.

|

| 15 |

+

|

| 16 |

+

- layers_to_skip = 4

|

| 17 |

+

- Layer 23 to 27 has the minimum average distance of 0.18376044921875

|

| 18 |

+

|

| 19 |

+

- [ ] To Do : Post pruning training.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

# model

|

| 25 |

|

| 26 |

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

|

|

|

| 51 |

merge_method: passthrough

|

| 52 |

dtype: bfloat16

|

| 53 |

|

| 54 |

+

```

|