daliprf

commited on

Commit

·

3dbf98e

1

Parent(s):

f7f2fc9

init

Browse files- .gitattributes +3 -0

- Data_custom_generator.py +56 -0

- README.md +150 -3

- cnn_model.py +94 -0

- configuration.py +77 -0

- custom_Losses.py +63 -0

- image_utility.py +585 -0

- img_printer.py +81 -0

- main.py +45 -0

- models/students/student_Net_300w.h5 +3 -0

- models/students/student_Net_COFW.h5 +3 -0

- models/students/student_Net_WFLW.h5 +3 -0

- pca_utility.py +286 -0

- requirements.txt +23 -0

- samples/KDLoss-1.jpg +3 -0

- samples/KD_300W_samples-1.jpg +3 -0

- samples/KD_L2_L1-1.jpg +3 -0

- samples/KD_WFLW_samples-1.jpg +3 -0

- samples/KD_cofw_samples-1.jpg +3 -0

- samples/general_framework-1.jpg +3 -0

- samples/loss_weight-1.jpg +3 -0

- samples/main_loss-1.jpg +3 -0

- samples/teacher_arch-1.jpg +3 -0

- student_train.py +292 -0

- teacher_trainer.py +215 -0

- test.py +40 -0

.gitattributes

CHANGED

|

@@ -29,3 +29,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

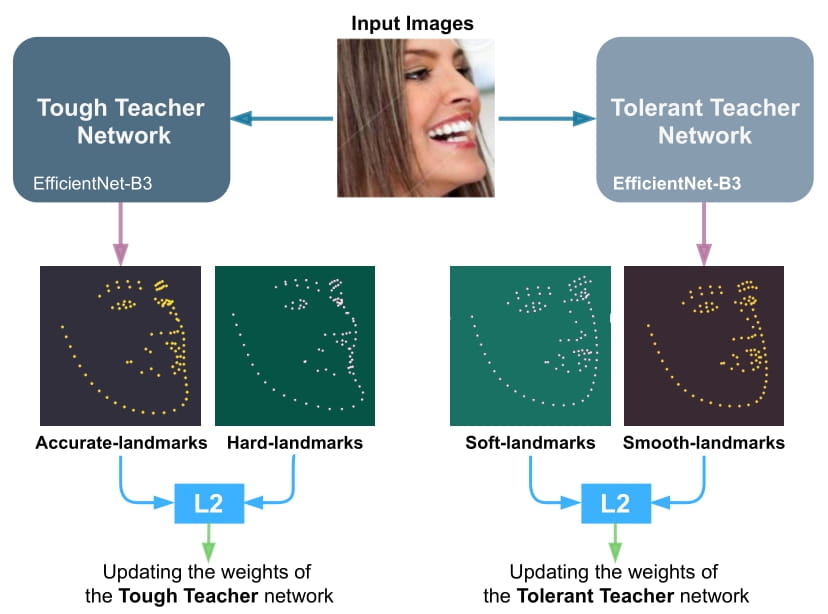

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

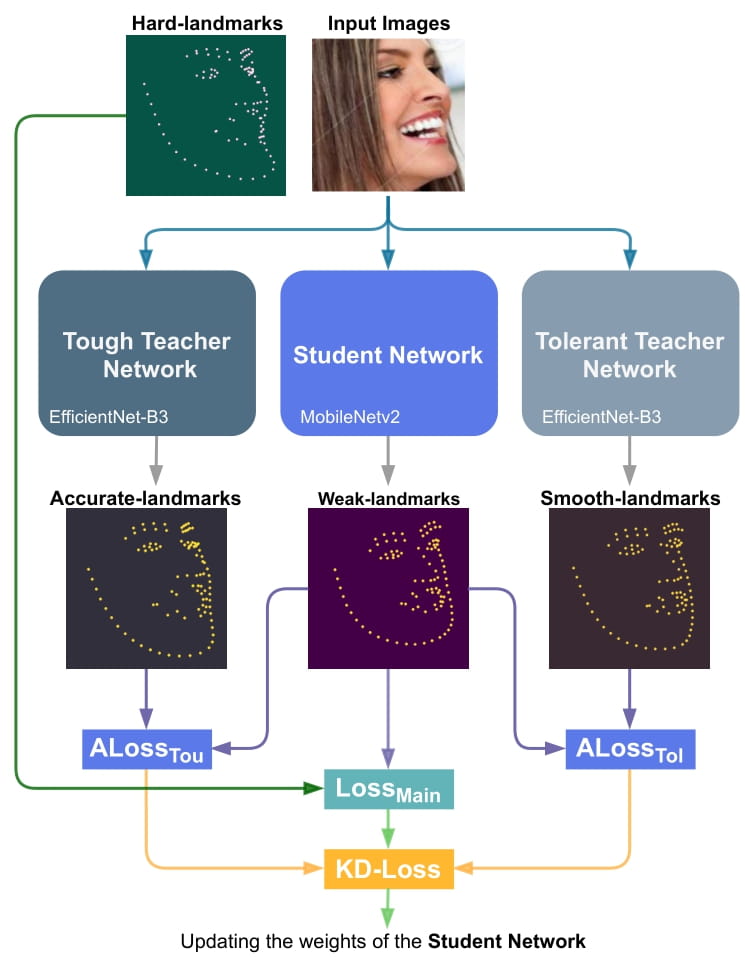

|

|

|

|

|

|

|

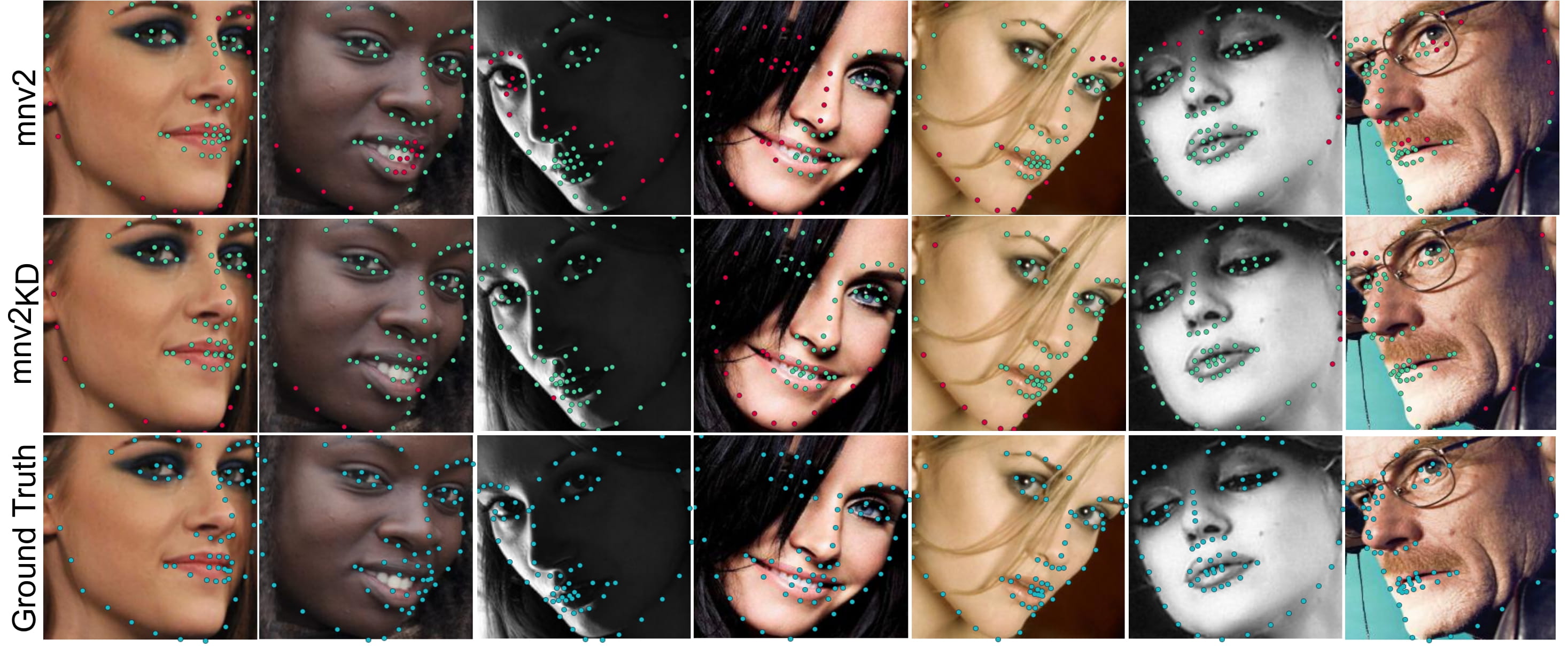

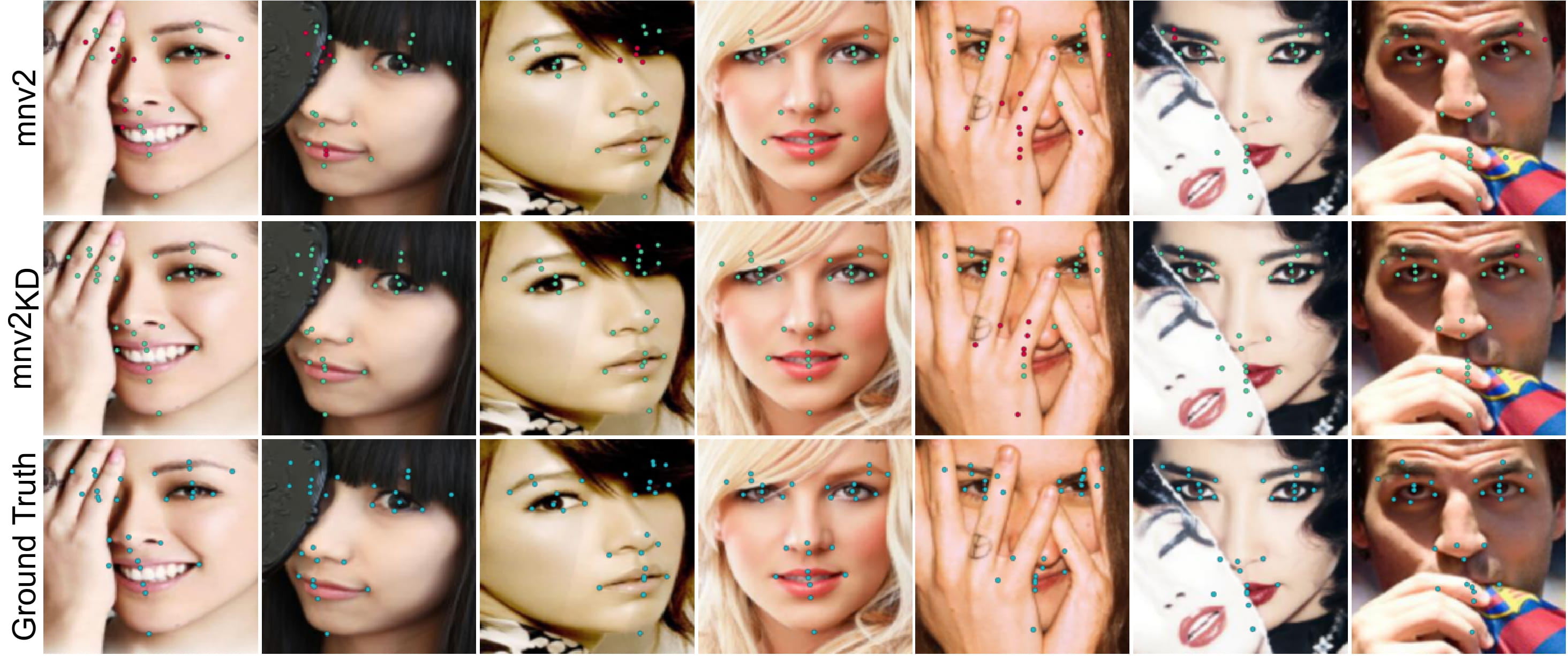

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.pdf filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

Data_custom_generator.py

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from skimage.io import imread

|

| 2 |

+

from skimage.transform import resize

|

| 3 |

+

import numpy as np

|

| 4 |

+

import tensorflow as tf

|

| 5 |

+

import keras

|

| 6 |

+

from skimage.transform import resize

|

| 7 |

+

from tf_record_utility import TFRecordUtility

|

| 8 |

+

from configuration import DatasetName, DatasetType, \

|

| 9 |

+

AffectnetConf, D300wConf, W300Conf, InputDataSize, LearningConfig

|

| 10 |

+

from numpy import save, load, asarray

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class CustomHeatmapGenerator(keras.utils.Sequence):

|

| 14 |

+

|

| 15 |

+

def __init__(self, is_single, image_filenames, label_filenames, batch_size, n_outputs, accuracy=100):

|

| 16 |

+

self.image_filenames = image_filenames

|

| 17 |

+

self.label_filenames = label_filenames

|

| 18 |

+

self.batch_size = batch_size

|

| 19 |

+

self.n_outputs = n_outputs

|

| 20 |

+

self.is_single = is_single

|

| 21 |

+

self.accuracy = accuracy

|

| 22 |

+

|

| 23 |

+

def __len__(self):

|

| 24 |

+

_len = np.ceil(len(self.image_filenames) // float(self.batch_size))

|

| 25 |

+

return int(_len)

|

| 26 |

+

|

| 27 |

+

def __getitem__(self, idx):

|

| 28 |

+

img_path = D300wConf.train_images_dir

|

| 29 |

+

tr_path_85 = D300wConf.train_hm_dir_85

|

| 30 |

+

tr_path_90 = D300wConf.train_hm_dir_90

|

| 31 |

+

tr_path_97 = D300wConf.train_hm_dir_97

|

| 32 |

+

tr_path = D300wConf.train_hm_dir

|

| 33 |

+

|

| 34 |

+

batch_x = self.image_filenames[idx * self.batch_size:(idx + 1) * self.batch_size]

|

| 35 |

+

batch_y = self.label_filenames[idx * self.batch_size:(idx + 1) * self.batch_size]

|

| 36 |

+

|

| 37 |

+

img_batch = np.array([imread(img_path + file_name) for file_name in batch_x])

|

| 38 |

+

|

| 39 |

+

if self.is_single:

|

| 40 |

+

if self.accuracy == 85:

|

| 41 |

+

lbl_batch = np.array([load(tr_path_85 + file_name) for file_name in batch_y])

|

| 42 |

+

elif self.accuracy == 90:

|

| 43 |

+

lbl_batch = np.array([load(tr_path_90 + file_name) for file_name in batch_y])

|

| 44 |

+

elif self.accuracy == 97:

|

| 45 |

+

lbl_batch = np.array([load(tr_path_97 + file_name) for file_name in batch_y])

|

| 46 |

+

else:

|

| 47 |

+

lbl_batch = np.array([load(tr_path + file_name) for file_name in batch_y])

|

| 48 |

+

|

| 49 |

+

lbl_out_array = lbl_batch

|

| 50 |

+

else:

|

| 51 |

+

lbl_batch_85 = np.array([load(tr_path_85 + file_name) for file_name in batch_y])

|

| 52 |

+

lbl_batch_90 = np.array([load(tr_path_90 + file_name) for file_name in batch_y])

|

| 53 |

+

lbl_batch_97 = np.array([load(tr_path_97 + file_name) for file_name in batch_y])

|

| 54 |

+

lbl_out_array = [lbl_batch_85, lbl_batch_90, lbl_batch_97]

|

| 55 |

+

|

| 56 |

+

return img_batch, lbl_out_array

|

README.md

CHANGED

|

@@ -1,3 +1,150 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

[](https://paperswithcode.com/sota/face-alignment-on-cofw?p=facial-landmark-points-detection-using)

|

| 3 |

+

|

| 4 |

+

#

|

| 5 |

+

Facial Landmark Points Detection Using Knowledge Distillation-Based Neural Networks

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

#### Link to the paper:

|

| 10 |

+

Google Scholar:

|

| 11 |

+

https://scholar.google.com/citations?view_op=view_citation&hl=en&user=96lS6HIAAAAJ&citation_for_view=96lS6HIAAAAJ:zYLM7Y9cAGgC

|

| 12 |

+

|

| 13 |

+

Elsevier:

|

| 14 |

+

https://www.sciencedirect.com/science/article/pii/S1077314221001582

|

| 15 |

+

|

| 16 |

+

Arxiv:

|

| 17 |

+

https://arxiv.org/abs/2111.07047

|

| 18 |

+

|

| 19 |

+

#### Link to the paperswithcode.com:

|

| 20 |

+

https://paperswithcode.com/paper/facial-landmark-points-detection-using

|

| 21 |

+

|

| 22 |

+

```diff

|

| 23 |

+

@@plaese STAR the repo if you like it.@@

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

```

|

| 27 |

+

Please cite this work as:

|

| 28 |

+

|

| 29 |

+

@article{fard2022facial,

|

| 30 |

+

title={Facial landmark points detection using knowledge distillation-based neural networks},

|

| 31 |

+

author={Fard, Ali Pourramezan and Mahoor, Mohammad H},

|

| 32 |

+

journal={Computer Vision and Image Understanding},

|

| 33 |

+

volume={215},

|

| 34 |

+

pages={103316},

|

| 35 |

+

year={2022},

|

| 36 |

+

publisher={Elsevier}

|

| 37 |

+

}

|

| 38 |

+

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

## Introduction

|

| 42 |

+

Facial landmark detection is a vital step for numerous facial image analysis applications. Although some deep learning-based methods have achieved good performances in this task, they are often not suitable for running on mobile devices. Such methods rely on networks with many parameters, which makes the training and inference time-consuming. Training lightweight neural networks such as MobileNets are often challenging, and the models might have low accuracy. Inspired by knowledge distillation (KD), this paper presents a novel loss function to train a lightweight Student network (e.g., MobileNetV2) for facial landmark detection. We use two Teacher networks, a Tolerant-Teacher and a Tough-Teacher in conjunction with the Student network. The Tolerant-Teacher is trained using Soft-landmarks created by active shape models, while the Tough-Teacher is trained using the ground truth (aka Hard-landmarks) landmark points. To utilize the facial landmark points predicted by the Teacher networks, we define an Assistive Loss (ALoss) for each Teacher network. Moreover, we define a loss function called KD-Loss that utilizes the facial landmark points predicted by the two pre-trained Teacher networks (EfficientNet-b3) to guide the lightweight Student network towards predicting the Hard-landmarks. Our experimental results on three challenging facial datasets show that the proposed architecture will result in a better-trained Student network that can extract facial landmark points with high accuracy.

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

##Architecture

|

| 46 |

+

|

| 47 |

+

We train the Tough-Teacher, and the Tolerant-Teacher networks independently using the Hard-landmarks and the Soft-landmarks respectively utilizing the L2 loss:

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

Proposed KD-based architecture for training the Student network. KDLoss uses the knowledge of the previously trained Teacher networks by utilizing the assistive loss functions ALossT ou and ALossT ol, to improve the performance the face alignment task:

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

## Evaluation

|

| 58 |

+

|

| 59 |

+

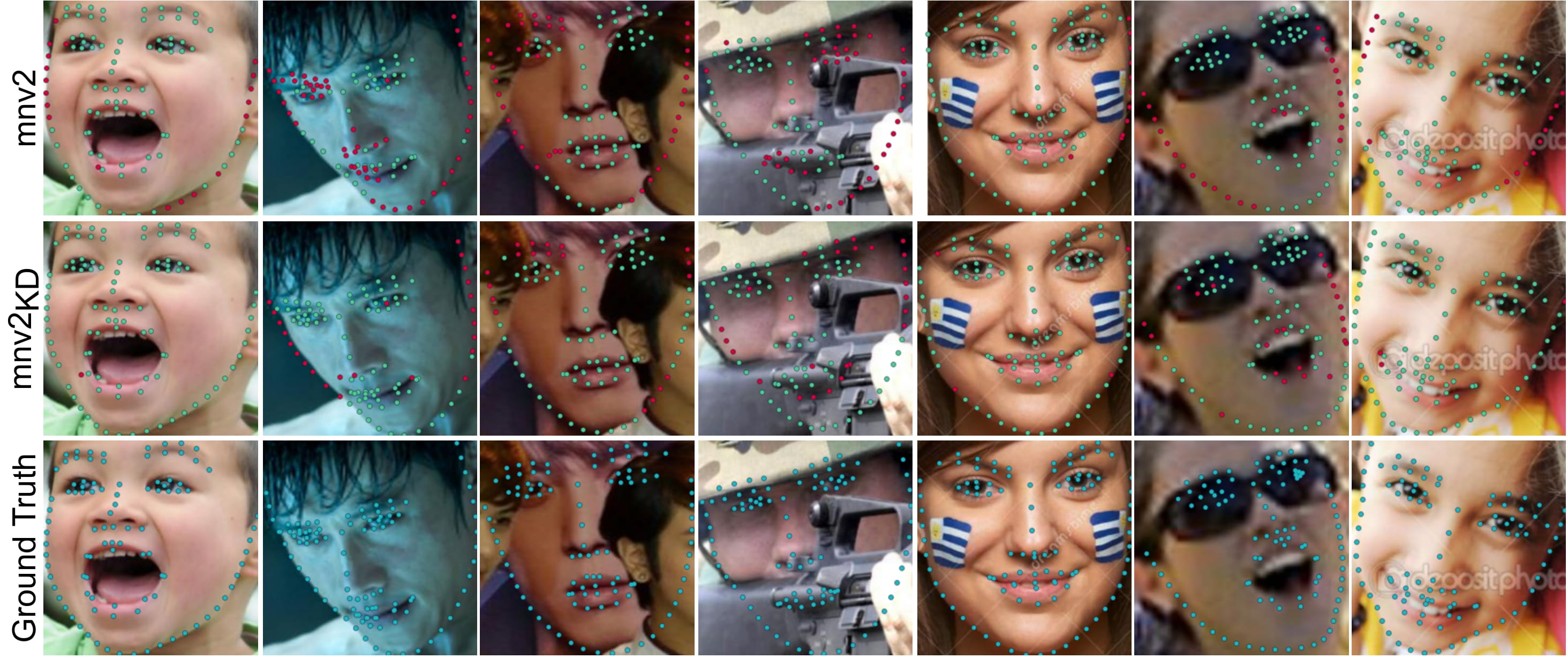

Following are some samples in order to show the visual performance of KD-Loss on 300W, COFW and WFLW datasets:

|

| 60 |

+

|

| 61 |

+

300W:

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

COFW:

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

WFLW:

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

----------------------------------------------------------------------------------------------------------------------------------

|

| 71 |

+

## Installing the requirements

|

| 72 |

+

In order to run the code you need to install python >= 3.5.

|

| 73 |

+

The requirements and the libraries needed to run the code can be installed using the following command:

|

| 74 |

+

|

| 75 |

+

```

|

| 76 |

+

pip install -r requirements.txt

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

## Using the pre-trained models

|

| 81 |

+

You can test and use the preetrained models using the following codes which are available in the test.py:

|

| 82 |

+

The pretrained student model are also located in "models/students".

|

| 83 |

+

|

| 84 |

+

```

|

| 85 |

+

cnn = CNNModel()

|

| 86 |

+

model = cnn.get_model(arch=arch, input_tensor=None, output_len=self.output_len)

|

| 87 |

+

|

| 88 |

+

model.load_weights(weight_fname)

|

| 89 |

+

|

| 90 |

+

img = None # load a cropped image

|

| 91 |

+

|

| 92 |

+

image_utility = ImageUtility()

|

| 93 |

+

pose_predicted = []

|

| 94 |

+

image = np.expand_dims(img, axis=0)

|

| 95 |

+

|

| 96 |

+

pose_predicted = model.predict(image)[1][0]

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

## Training Network from scratch

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

### Preparing Data

|

| 104 |

+

Data needs to be normalized and saved in npy format.

|

| 105 |

+

|

| 106 |

+

### Training

|

| 107 |

+

|

| 108 |

+

### Training Teacher Networks:

|

| 109 |

+

|

| 110 |

+

The training implementation is located in teacher_trainer.py class. You can use the following code to start the training for the teacher networks:

|

| 111 |

+

|

| 112 |

+

```

|

| 113 |

+

'''train Teacher Networks'''

|

| 114 |

+

trainer = TeacherTrainer(dataset_name=DatasetName.w300)

|

| 115 |

+

trainer.train(arch='efficientNet',weight_path=None)

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

### Training Student Networks:

|

| 119 |

+

After Training the teacher networks, you can use the trained teachers to train the student network. The implemetation of training of the student network is provided in teacher_trainer.py . You can use the following code to start the training for the student networks:

|

| 120 |

+

|

| 121 |

+

```

|

| 122 |

+

st_trainer = StudentTrainer(dataset_name=DatasetName.w300, use_augmneted=True)

|

| 123 |

+

st_trainer.train(arch_student='mobileNetV2', weight_path_student=None,

|

| 124 |

+

loss_weight_student=2.0,

|

| 125 |

+

arch_tough_teacher='efficientNet', weight_path_tough_teacher='./models/teachers/ds_300w_ef_tou.h5',

|

| 126 |

+

loss_weight_tough_teacher=1,

|

| 127 |

+

arch_tol_teacher='efficientNet', weight_path_tol_teacher='./models/teachers/ds_300w_ef_tol.h5',

|

| 128 |

+

loss_weight_tol_teacher=1)

|

| 129 |

+

```

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

```

|

| 134 |

+

Please cite this work as:

|

| 135 |

+

|

| 136 |

+

@article{fard2022facial,

|

| 137 |

+

title={Facial landmark points detection using knowledge distillation-based neural networks},

|

| 138 |

+

author={Fard, Ali Pourramezan and Mahoor, Mohammad H},

|

| 139 |

+

journal={Computer Vision and Image Understanding},

|

| 140 |

+

volume={215},

|

| 141 |

+

pages={103316},

|

| 142 |

+

year={2022},

|

| 143 |

+

publisher={Elsevier}

|

| 144 |

+

}

|

| 145 |

+

|

| 146 |

+

```

|

| 147 |

+

|

| 148 |

+

```diff

|

| 149 |

+

@@plaese STAR the repo if you like it.@@

|

| 150 |

+

```

|

cnn_model.py

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from configuration import DatasetName, DatasetType, \

|

| 2 |

+

D300wConf, InputDataSize, LearningConfig

|

| 3 |

+

|

| 4 |

+

import tensorflow as tf

|

| 5 |

+

# tf.compat.v1.disable_eager_execution()

|

| 6 |

+

|

| 7 |

+

from tensorflow import keras

|

| 8 |

+

from skimage.transform import resize

|

| 9 |

+

from keras.regularizers import l2

|

| 10 |

+

|

| 11 |

+

# tf.logging.set_verbosity(tf.logging.ERROR)

|

| 12 |

+

from tensorflow.keras.models import Model

|

| 13 |

+

from tensorflow.keras.applications import mobilenet_v2, mobilenet, resnet50, densenet

|

| 14 |

+

|

| 15 |

+

from tensorflow.keras.layers import Dense, MaxPooling2D, Conv2D, Flatten, \

|

| 16 |

+

BatchNormalization, Activation, GlobalAveragePooling2D, DepthwiseConv2D, Dropout, ReLU, Concatenate, Input, Conv2DTranspose

|

| 17 |

+

|

| 18 |

+

from keras.callbacks import ModelCheckpoint

|

| 19 |

+

from keras import backend as K

|

| 20 |

+

|

| 21 |

+

from keras.optimizers import Adam

|

| 22 |

+

import numpy as np

|

| 23 |

+

import matplotlib.pyplot as plt

|

| 24 |

+

import math

|

| 25 |

+

from keras.callbacks import CSVLogger

|

| 26 |

+

from datetime import datetime

|

| 27 |

+

|

| 28 |

+

import cv2

|

| 29 |

+

import os.path

|

| 30 |

+

from keras.utils.vis_utils import plot_model

|

| 31 |

+

from scipy.spatial import distance

|

| 32 |

+

import scipy.io as sio

|

| 33 |

+

|

| 34 |

+

import efficientnet.tfkeras as efn

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

class CNNModel:

|

| 38 |

+

def get_model(self, arch, input_tensor, output_len,

|

| 39 |

+

inp_shape=[InputDataSize.image_input_size, InputDataSize.image_input_size, 3],

|

| 40 |

+

weight_path=None):

|

| 41 |

+

if arch == 'efficientNet':

|

| 42 |

+

model = self.create_efficientNet(inp_shape=inp_shape, input_tensor=input_tensor, output_len=output_len)

|

| 43 |

+

elif arch == 'mobileNetV2':

|

| 44 |

+

model = self.create_MobileNet(inp_shape=inp_shape, inp_tensor=input_tensor)

|

| 45 |

+

return model

|

| 46 |

+

|

| 47 |

+

def create_MobileNet(self, inp_shape, inp_tensor):

|

| 48 |

+

|

| 49 |

+

mobilenet_model = mobilenet_v2.MobileNetV2(input_shape=inp_shape,

|

| 50 |

+

alpha=1.0,

|

| 51 |

+

include_top=True,

|

| 52 |

+

weights=None,

|

| 53 |

+

input_tensor=inp_tensor,

|

| 54 |

+

pooling=None)

|

| 55 |

+

|

| 56 |

+

inp = mobilenet_model.input

|

| 57 |

+

out_landmarks = mobilenet_model.get_layer('O_L').output

|

| 58 |

+

revised_model = Model(inp, [out_landmarks])

|

| 59 |

+

model_json = revised_model.to_json()

|

| 60 |

+

with open("mobileNet_v2_stu.json", "w") as json_file:

|

| 61 |

+

json_file.write(model_json)

|

| 62 |

+

return revised_model

|

| 63 |

+

|

| 64 |

+

def create_efficientNet(self, inp_shape, input_tensor, output_len, is_teacher=True):

|

| 65 |

+

if is_teacher: # for teacher we use a heavier network

|

| 66 |

+

eff_net = efn.EfficientNetB3(include_top=True,

|

| 67 |

+

weights=None,

|

| 68 |

+

input_tensor=None,

|

| 69 |

+

input_shape=[InputDataSize.image_input_size, InputDataSize.image_input_size,

|

| 70 |

+

3],

|

| 71 |

+

pooling=None,

|

| 72 |

+

classes=output_len)

|

| 73 |

+

else: # for student we use the small network

|

| 74 |

+

eff_net = efn.EfficientNetB0(include_top=True,

|

| 75 |

+

weights=None,

|

| 76 |

+

input_tensor=None,

|

| 77 |

+

input_shape=inp_shape,

|

| 78 |

+

pooling=None,

|

| 79 |

+

classes=output_len) # or weights='noisy-student'

|

| 80 |

+

|

| 81 |

+

eff_net.layers.pop()

|

| 82 |

+

inp = eff_net.input

|

| 83 |

+

|

| 84 |

+

x = eff_net.get_layer('top_activation').output

|

| 85 |

+

x = GlobalAveragePooling2D()(x)

|

| 86 |

+

x = keras.layers.Dropout(rate=0.5)(x)

|

| 87 |

+

output = Dense(output_len, activation='linear', name='out')(x)

|

| 88 |

+

|

| 89 |

+

eff_net = Model(inp, output)

|

| 90 |

+

|

| 91 |

+

eff_net.summary()

|

| 92 |

+

|

| 93 |

+

return eff_net

|

| 94 |

+

|

configuration.py

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

class DatasetName:

|

| 2 |

+

w300 = 'w300'

|

| 3 |

+

cofw = 'cofw'

|

| 4 |

+

wflw = 'wflw'

|

| 5 |

+

|

| 6 |

+

class DatasetType:

|

| 7 |

+

data_type_train = 0

|

| 8 |

+

data_type_validation = 1

|

| 9 |

+

data_type_test = 2

|

| 10 |

+

|

| 11 |

+

class LearningConfig:

|

| 12 |

+

batch_size = 70

|

| 13 |

+

epochs = 150

|

| 14 |

+

|

| 15 |

+

class InputDataSize:

|

| 16 |

+

image_input_size = 224

|

| 17 |

+

|

| 18 |

+

class WflwConf:

|

| 19 |

+

Wflw_prefix_path = './data/wflw/' # --> local

|

| 20 |

+

|

| 21 |

+

''' augmented version'''

|

| 22 |

+

augmented_train_pose = Wflw_prefix_path + 'training_set/augmented/pose/'

|

| 23 |

+

augmented_train_annotation = Wflw_prefix_path + 'training_set/augmented/annotations/'

|

| 24 |

+

augmented_train_atr = Wflw_prefix_path + 'training_set/augmented/atrs/'

|

| 25 |

+

augmented_train_image = Wflw_prefix_path + 'training_set/augmented/images/'

|

| 26 |

+

augmented_train_tf_path = Wflw_prefix_path + 'training_set/augmented/tf/'

|

| 27 |

+

''' original version'''

|

| 28 |

+

no_aug_train_annotation = Wflw_prefix_path + 'training_set/no_aug/annotations/'

|

| 29 |

+

no_aug_train_atr = Wflw_prefix_path + 'training_set/no_aug/atrs/'

|

| 30 |

+

no_aug_train_pose = Wflw_prefix_path + 'training_set/no_aug/pose/'

|

| 31 |

+

no_aug_train_image = Wflw_prefix_path + 'training_set/no_aug/images/'

|

| 32 |

+

no_aug_train_tf_path = Wflw_prefix_path + 'training_set/no_aug/tf/'

|

| 33 |

+

|

| 34 |

+

orig_number_of_training = 7500

|

| 35 |

+

orig_number_of_test = 2500

|

| 36 |

+

|

| 37 |

+

augmentation_factor = 4 # create . image from 1

|

| 38 |

+

augmentation_factor_rotate = 15 # create . image from 1

|

| 39 |

+

num_of_landmarks = 98

|

| 40 |

+

|

| 41 |

+

class CofwConf:

|

| 42 |

+

Cofw_prefix_path = './data/cofw/' # --> local

|

| 43 |

+

|

| 44 |

+

augmented_train_pose = Cofw_prefix_path + 'training_set/augmented/pose/'

|

| 45 |

+

augmented_train_annotation = Cofw_prefix_path + 'training_set/augmented/annotations/'

|

| 46 |

+

augmented_train_image = Cofw_prefix_path + 'training_set/augmented/images/'

|

| 47 |

+

augmented_train_tf_path = Cofw_prefix_path + 'training_set/augmented/tf/'

|

| 48 |

+

|

| 49 |

+

orig_number_of_training = 1345

|

| 50 |

+

orig_number_of_test = 507

|

| 51 |

+

|

| 52 |

+

augmentation_factor = 10

|

| 53 |

+

num_of_landmarks = 29

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

class D300wConf:

|

| 57 |

+

w300w_prefix_path = './data/300W/' # --> local

|

| 58 |

+

|

| 59 |

+

orig_300W_train = w300w_prefix_path + 'orig_300W_train/'

|

| 60 |

+

augmented_train_pose = w300w_prefix_path + 'training_set/augmented/pose/'

|

| 61 |

+

augmented_train_annotation = w300w_prefix_path + 'training_set/augmented/annotations/'

|

| 62 |

+

augmented_train_image = w300w_prefix_path + 'training_set/augmented/images/'

|

| 63 |

+

augmented_train_tf_path = w300w_prefix_path + 'training_set/augmented/tf/'

|

| 64 |

+

|

| 65 |

+

no_aug_train_annotation = w300w_prefix_path + 'training_set/no_aug/annotations/'

|

| 66 |

+

no_aug_train_pose = w300w_prefix_path + 'training_set/no_aug/pose/'

|

| 67 |

+

no_aug_train_image = w300w_prefix_path + 'training_set/no_aug/images/'

|

| 68 |

+

no_aug_train_tf_path = w300w_prefix_path + 'training_set/no_aug/tf/'

|

| 69 |

+

|

| 70 |

+

orig_number_of_training = 3148

|

| 71 |

+

orig_number_of_test_full = 689

|

| 72 |

+

orig_number_of_test_common = 554

|

| 73 |

+

orig_number_of_test_challenging = 135

|

| 74 |

+

|

| 75 |

+

augmentation_factor = 4 # create . image from 1

|

| 76 |

+

num_of_landmarks = 68

|

| 77 |

+

|

custom_Losses.py

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

|

| 3 |

+

import numpy as np

|

| 4 |

+

import tensorflow as tf

|

| 5 |

+

|

| 6 |

+

# tf.compat.v1.disable_eager_execution()

|

| 7 |

+

# tf.compat.v1.enable_eager_execution()

|

| 8 |

+

|

| 9 |

+

from PIL import Image

|

| 10 |

+

from tensorflow.keras import backend as K

|

| 11 |

+

from scipy.spatial import distance

|

| 12 |

+

|

| 13 |

+

from cnn_model import CNNModel

|

| 14 |

+

from configuration import DatasetName, D300wConf, LearningConfig

|

| 15 |

+

from image_utility import ImageUtility

|

| 16 |

+

from pca_utility import PCAUtility

|

| 17 |

+

|

| 18 |

+

print(tf.__version__)

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

class Custom_losses:

|

| 22 |

+

|

| 23 |

+

def MSE(self, x_pr, x_gt):

|

| 24 |

+

return tf.losses.mean_squared_error(x_pr, x_gt)

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

def kd_loss(self, x_pr, x_gt, x_tough, x_tol, alpha_tough, alpha_mi_tough, alpha_tol, alpha_mi_tol,

|

| 28 |

+

main_loss_weight, tough_loss_weight, tol_loss_weight):

|

| 29 |

+

"""km"""

|

| 30 |

+

'''los KD'''

|

| 31 |

+

# we revise teachers for reflection:

|

| 32 |

+

x_tough = x_gt + tf.sign(x_pr - x_gt) * tf.abs(x_tough - x_gt)

|

| 33 |

+

b_tough = x_gt + tf.sign(x_pr - x_gt) * tf.abs(x_tough - x_gt) * 0.15

|

| 34 |

+

x_tol = x_gt + tf.sign(x_pr - x_gt) * tf.abs(x_tol - x_gt)

|

| 35 |

+

b_tol = x_gt + tf.sign(x_pr - x_gt) * tf.abs(x_tol - x_gt) * 0.15

|

| 36 |

+

# Region A: from T -> +inf

|

| 37 |

+

tou_pos_map = tf.where(tf.sign(x_pr - x_tough) * tf.sign(x_tough - x_gt) > 0, alpha_tough, 0.0)

|

| 38 |

+

tou_neg_map = tf.where(tf.sign(x_tough - x_pr) * tf.sign(x_pr - b_tough) >= 0, alpha_mi_tough, 0.0)

|

| 39 |

+

# tou_red_map = tf.where(tf.sign(tf.abs(b_tough) - tf.abs(x_pr))*tf.sign(tf.abs(x_pr) - tf.abs(x_gt)) > 0, 0.1, 0.0)

|

| 40 |

+

tou_map = tou_pos_map + tou_neg_map # + tou_red_map

|

| 41 |

+

|

| 42 |

+

tol_pos_map = tf.where(tf.sign(x_pr - x_tol) * tf.sign(x_tol - x_gt) > 0, alpha_tol, 0.0)

|

| 43 |

+

tol_neg_map = tf.where(tf.sign(x_tol - x_pr) * tf.sign(x_pr - b_tol) >= 0, alpha_mi_tol, 0.0)

|

| 44 |

+

# tol_red_map = tf.where(tf.sign(tf.abs(b_tol) - tf.abs(x_pr))*tf.sign(tf.abs(x_pr) - tf.abs(x_gt)) > 0, 0.1, 0.0)

|

| 45 |

+

tol_map = tol_pos_map + tol_neg_map # + tou_red_map

|

| 46 |

+

|

| 47 |

+

'''calculate dif map for linear and non-linear part'''

|

| 48 |

+

low_diff_main_map = tf.where(tf.abs(x_gt - x_pr) <= tf.abs(x_gt - x_tol), 1.0, 0.0)

|

| 49 |

+

high_diff_main_map = tf.where(tf.abs(x_gt - x_pr) > tf.abs(x_gt - x_tol), 1.0, 0.0)

|

| 50 |

+

|

| 51 |

+

'''calculate loss'''

|

| 52 |

+

loss_main_high_dif = tf.reduce_mean(

|

| 53 |

+

high_diff_main_map * (tf.square(x_gt - x_pr) + (3 * tf.abs(x_gt - x_tol)) - tf.square(x_gt - x_tol)))

|

| 54 |

+

loss_main_low_dif = tf.reduce_mean(low_diff_main_map * (3 * tf.abs(x_gt - x_pr)))

|

| 55 |

+

loss_main = main_loss_weight * (loss_main_high_dif + loss_main_low_dif)

|

| 56 |

+

|

| 57 |

+

loss_tough_assist = tough_loss_weight * tf.reduce_mean(tou_map * tf.abs(x_tough - x_pr))

|

| 58 |

+

loss_tol_assist = tol_loss_weight * tf.reduce_mean(tol_map * tf.abs(x_tol - x_pr))

|

| 59 |

+

|

| 60 |

+

'''dif loss:'''

|

| 61 |

+

loss_total = loss_main + loss_tough_assist + loss_tol_assist

|

| 62 |

+

|

| 63 |

+

return loss_total, loss_main, loss_tough_assist, loss_tol_assist

|

image_utility.py

ADDED

|

@@ -0,0 +1,585 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import random

|

| 2 |

+

import numpy as np

|

| 3 |

+

|

| 4 |

+

import matplotlib

|

| 5 |

+

matplotlib.use('agg')

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

|

| 8 |

+

import math

|

| 9 |

+

from skimage.transform import warp, AffineTransform

|

| 10 |

+

import cv2

|

| 11 |

+

from scipy import misc

|

| 12 |

+

from skimage.transform import rotate

|

| 13 |

+

from PIL import Image

|

| 14 |

+

from PIL import ImageOps

|

| 15 |

+

from skimage.transform import resize

|

| 16 |

+

from skimage import transform

|

| 17 |

+

from skimage.transform import SimilarityTransform, AffineTransform

|

| 18 |

+

import random

|

| 19 |

+

|

| 20 |

+

class ImageUtility:

|

| 21 |

+

|

| 22 |

+

def random_rotate(self, _image, _label, file_name, fn):

|

| 23 |

+

|

| 24 |

+

xy_points, x_points, y_points = self.create_landmarks(landmarks=_label,

|

| 25 |

+

scale_factor_x=1, scale_factor_y=1)

|

| 26 |

+

_image, _label = self.cropImg_2time(_image, x_points, y_points)

|

| 27 |

+

|

| 28 |

+

scale = (np.random.uniform(0.7, 1.3), np.random.uniform(0.7, 1.3))

|

| 29 |

+

# scale = (1, 1)

|

| 30 |

+

|

| 31 |

+

rot = np.random.uniform(-1 * 0.55, 0.55)

|

| 32 |

+

translation = (0, 0)

|

| 33 |

+

shear = 0

|

| 34 |

+

|

| 35 |

+

tform = AffineTransform(

|

| 36 |

+

scale=scale, # ,

|

| 37 |

+

rotation=rot,

|

| 38 |

+

translation=translation,

|

| 39 |

+

shear=np.deg2rad(shear)

|

| 40 |

+

)

|

| 41 |

+

|

| 42 |

+

output_img = transform.warp(_image, tform.inverse, mode='symmetric')

|

| 43 |

+

|

| 44 |

+

sx, sy = scale

|

| 45 |

+

t_matrix = np.array([

|

| 46 |

+

[sx * math.cos(rot), -sy * math.sin(rot + shear), 0],

|

| 47 |

+

[sx * math.sin(rot), sy * math.cos(rot + shear), 0],

|

| 48 |

+

[0, 0, 1]

|

| 49 |

+

])

|

| 50 |

+

landmark_arr_xy, landmark_arr_x, landmark_arr_y = self.create_landmarks(_label, 1, 1)

|

| 51 |

+

label = np.array(landmark_arr_x + landmark_arr_y).reshape([2, 68])

|

| 52 |

+

marging = np.ones([1, 68])

|

| 53 |

+

label = np.concatenate((label, marging), axis=0)

|

| 54 |

+

|

| 55 |

+

label_t = np.dot(t_matrix, label)

|

| 56 |

+

lbl_flat = np.delete(label_t, 2, axis=0).reshape([136])

|

| 57 |

+

|

| 58 |

+

t_label = self.__reorder(lbl_flat)

|

| 59 |

+

|

| 60 |

+

'''crop data: we add a small margin to the images'''

|

| 61 |

+

xy_points, x_points, y_points = self.create_landmarks(landmarks=t_label,

|

| 62 |

+

scale_factor_x=1, scale_factor_y=1)

|

| 63 |

+

img_arr, points_arr = self.cropImg(output_img, x_points, y_points, no_padding=False)

|

| 64 |

+

# img_arr = output_img

|

| 65 |

+

# points_arr = t_label

|

| 66 |

+

'''resize image to 224*224'''

|

| 67 |

+

resized_img = resize(img_arr,

|

| 68 |

+

(224, 224, 3),

|

| 69 |

+

anti_aliasing=True)

|

| 70 |

+

dims = img_arr.shape

|

| 71 |

+

height = dims[0]

|

| 72 |

+

width = dims[1]

|

| 73 |

+

scale_factor_y = 224 / height

|

| 74 |

+

scale_factor_x = 224 / width

|

| 75 |

+

|

| 76 |

+

'''rescale and retrieve landmarks'''

|

| 77 |

+

landmark_arr_xy, landmark_arr_x, landmark_arr_y = \

|

| 78 |

+

self.create_landmarks(landmarks=points_arr,

|

| 79 |

+

scale_factor_x=scale_factor_x,

|

| 80 |

+

scale_factor_y=scale_factor_y)

|

| 81 |

+

|

| 82 |

+

if not(min(landmark_arr_x) < 0.0 or min(landmark_arr_y) < 0.0 or max(landmark_arr_x) > 224 or max(landmark_arr_y) > 224):

|

| 83 |

+

|

| 84 |

+

# self.print_image_arr(fn, resized_img, landmark_arr_x, landmark_arr_y)

|

| 85 |

+

|

| 86 |

+

im = Image.fromarray((resized_img * 255).astype(np.uint8))

|

| 87 |

+

im.save(str(file_name) + '.jpg')

|

| 88 |

+

|

| 89 |

+

pnt_file = open(str(file_name) + ".pts", "w")

|

| 90 |

+

pre_txt = ["version: 1 \n", "n_points: 68 \n", "{ \n"]

|

| 91 |

+

pnt_file.writelines(pre_txt)

|

| 92 |

+

points_txt = ""

|

| 93 |

+

for i in range(0, len(landmark_arr_xy), 2):

|

| 94 |

+

points_txt += str(landmark_arr_xy[i]) + " " + str(landmark_arr_xy[i + 1]) + "\n"

|

| 95 |

+

|

| 96 |

+

pnt_file.writelines(points_txt)

|

| 97 |

+

pnt_file.write("} \n")

|

| 98 |

+

pnt_file.close()

|

| 99 |

+

|

| 100 |

+

return t_label, output_img

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

def random_rotate_m(self, _image, _label_img, file_name):

|

| 105 |

+

|

| 106 |

+

rot = random.uniform(-80.9, 80.9)

|

| 107 |

+

|

| 108 |

+

output_img = rotate(_image, rot, resize=True)

|

| 109 |

+

output_img_lbl = rotate(_label_img, rot, resize=True)

|

| 110 |

+

|

| 111 |

+

im = Image.fromarray((output_img * 255).astype(np.uint8))

|

| 112 |

+

im_lbl = Image.fromarray((output_img_lbl * 255).astype(np.uint8))

|

| 113 |

+

|

| 114 |

+

im_m = ImageOps.mirror(im)

|

| 115 |

+

im_lbl_m = ImageOps.mirror(im_lbl)

|

| 116 |

+

|

| 117 |

+

im.save(str(file_name)+'.jpg')

|

| 118 |

+

# im_lbl.save(str(file_name)+'_lbl.jpg')

|

| 119 |

+

|

| 120 |

+

im_m.save(str(file_name) + '_m.jpg')

|

| 121 |

+

# im_lbl_m.save(str(file_name) + '_m_lbl.jpg')

|

| 122 |

+

|

| 123 |

+

im_lbl_ar = np.array(im_lbl)

|

| 124 |

+

im_lbl_m_ar = np.array(im_lbl_m)

|

| 125 |

+

|

| 126 |

+

self.__save_label(im_lbl_ar, file_name, np.array(im))

|

| 127 |

+

self.__save_label(im_lbl_m_ar, file_name+"_m", np.array(im_m))

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

def __save_label(self, im_lbl_ar, file_name, img_arr):

|

| 131 |

+

|

| 132 |

+

im_lbl_point = []

|

| 133 |

+

for i in range(im_lbl_ar.shape[0]):

|

| 134 |

+

for j in range(im_lbl_ar.shape[1]):

|

| 135 |

+

if im_lbl_ar[i, j] != 0:

|

| 136 |

+

im_lbl_point.append(j)

|

| 137 |

+

im_lbl_point.append(i)

|

| 138 |

+

|

| 139 |

+

pnt_file = open(str(file_name)+".pts", "w")

|

| 140 |

+

|

| 141 |

+

pre_txt = ["version: 1 \n", "n_points: 68 \n", "{ \n"]

|

| 142 |

+

pnt_file.writelines(pre_txt)

|

| 143 |

+

points_txt = ""

|

| 144 |

+

for i in range(0, len(im_lbl_point), 2):

|

| 145 |

+

points_txt += str(im_lbl_point[i]) + " " + str(im_lbl_point[i+1]) + "\n"

|

| 146 |

+

|

| 147 |

+

pnt_file.writelines(points_txt)

|

| 148 |

+

pnt_file.write("} \n")

|

| 149 |

+

pnt_file.close()

|

| 150 |

+

|

| 151 |

+

'''crop data: we add a small margin to the images'''

|

| 152 |

+

xy_points, x_points, y_points = self.create_landmarks(landmarks=im_lbl_point,

|

| 153 |

+

scale_factor_x=1, scale_factor_y=1)

|

| 154 |

+

img_arr, points_arr = self.cropImg(img_arr, x_points, y_points)

|

| 155 |

+

|

| 156 |

+

'''resize image to 224*224'''

|

| 157 |

+

resized_img = resize(img_arr,

|

| 158 |

+

(224, 224, 3),

|

| 159 |

+

anti_aliasing=True)

|

| 160 |

+

dims = img_arr.shape

|

| 161 |

+

height = dims[0]

|

| 162 |

+

width = dims[1]

|

| 163 |

+

scale_factor_y = 224 / height

|

| 164 |

+

scale_factor_x = 224 / width

|

| 165 |

+

|

| 166 |

+

'''rescale and retrieve landmarks'''

|

| 167 |

+

landmark_arr_xy, landmark_arr_x, landmark_arr_y = \

|

| 168 |

+

self.create_landmarks(landmarks=points_arr,

|

| 169 |

+

scale_factor_x=scale_factor_x,

|

| 170 |

+

scale_factor_y=scale_factor_y)

|

| 171 |

+

|

| 172 |

+

im = Image.fromarray((resized_img * 255).astype(np.uint8))

|

| 173 |

+

im.save(str(im_lbl_point[0])+'.jpg')

|

| 174 |

+

# self.print_image_arr(im_lbl_point[0], resized_img, landmark_arr_x, landmark_arr_y)

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

def augment(self, _image, _label):

|

| 178 |

+

|

| 179 |

+

# face = misc.face(gray=True)

|

| 180 |

+

#

|

| 181 |

+

# rotate_face = ndimage.rotate(_image, 45)

|

| 182 |

+

# self.print_image_arr(_label[0], rotate_face, [],[])

|

| 183 |

+

|

| 184 |

+

# hue_img = tf.image.random_hue(_image, max_delta=0.1) # max_delta must be in the interval [0, 0.5].

|

| 185 |

+

# sat_img = tf.image.random_saturation(hue_img, lower=0.0, upper=3.0)

|

| 186 |

+

#

|

| 187 |

+

# sat_img = K.eval(sat_img)

|

| 188 |

+

#

|

| 189 |

+

_image = self.__noisy(_image)

|

| 190 |

+

|

| 191 |

+

shear = 0

|

| 192 |

+

|

| 193 |

+

# rot = 0.0

|

| 194 |

+

# scale = (1, 1)

|

| 195 |

+

|

| 196 |

+

rot = np.random.uniform(-1 * 0.009, 0.009)

|

| 197 |

+

|

| 198 |

+

scale = (random.uniform(0.9, 1.00), random.uniform(0.9, 1.00))

|

| 199 |

+

|

| 200 |

+

tform = AffineTransform(scale=scale, rotation=rot, shear=shear,

|

| 201 |

+

translation=(0, 0))

|

| 202 |

+

|

| 203 |

+

output_img = warp(_image, tform.inverse, output_shape=(_image.shape[0], _image.shape[1]))

|

| 204 |

+

|

| 205 |

+

sx, sy = scale

|

| 206 |

+

t_matrix = np.array([

|

| 207 |

+

[sx * math.cos(rot), -sy * math.sin(rot + shear), 0],

|

| 208 |

+

[sx * math.sin(rot), sy * math.cos(rot + shear), 0],

|

| 209 |

+

[0, 0, 1]

|

| 210 |

+

])

|

| 211 |

+

landmark_arr_xy, landmark_arr_x, landmark_arr_y = self.create_landmarks(_label, 1, 1)

|

| 212 |

+

label = np.array(landmark_arr_x + landmark_arr_y).reshape([2, 68])

|

| 213 |

+

marging = np.ones([1, 68])

|

| 214 |

+

label = np.concatenate((label, marging), axis=0)

|

| 215 |

+

|

| 216 |

+

label_t = np.dot(t_matrix, label)

|

| 217 |

+

lbl_flat = np.delete(label_t, 2, axis=0).reshape([136])

|

| 218 |

+

|

| 219 |

+

t_label = self.__reorder(lbl_flat)

|

| 220 |

+

return t_label, output_img

|

| 221 |

+

|

| 222 |

+

def __noisy(self, image):

|

| 223 |

+

noise_typ = random.randint(0, 3)

|

| 224 |

+

if noise_typ == 0 :#"gauss":

|

| 225 |

+

row, col, ch = image.shape

|

| 226 |

+

mean = 0

|

| 227 |

+

var = 0.1

|

| 228 |

+

sigma = var ** 0.5

|

| 229 |

+

gauss = np.random.normal(mean, sigma, (row, col, ch))

|

| 230 |

+

gauss = gauss.reshape(row, col, ch)

|

| 231 |

+

noisy = image + gauss

|

| 232 |

+

return noisy

|

| 233 |

+

elif noise_typ == 1 :# "s&p":

|

| 234 |

+

row, col, ch = image.shape

|

| 235 |

+

s_vs_p = 0.5

|

| 236 |

+

amount = 0.004

|

| 237 |

+

out = np.copy(image)

|

| 238 |

+

# Salt mode

|

| 239 |

+

num_salt = np.ceil(amount * image.size * s_vs_p)

|

| 240 |

+

coords = [np.random.randint(0, i - 1, int(num_salt))

|

| 241 |

+

for i in image.shape]

|

| 242 |

+

out[coords] = 1

|

| 243 |

+

|

| 244 |

+

# Pepper mode

|

| 245 |

+

num_pepper = np.ceil(amount * image.size * (1. - s_vs_p))

|

| 246 |

+

coords = [np.random.randint(0, i - 1, int(num_pepper))

|

| 247 |

+

for i in image.shape]

|

| 248 |

+

out[coords] = 0

|

| 249 |

+

return out

|

| 250 |

+

|

| 251 |

+

elif noise_typ == 2: #"speckle":

|

| 252 |

+

row, col, ch = image.shape

|

| 253 |

+

gauss = np.random.randn(row, col, ch)

|

| 254 |

+

gauss = gauss.reshape(row, col, ch)

|

| 255 |

+

noisy = image + image * gauss

|

| 256 |

+

return noisy

|

| 257 |

+

else:

|

| 258 |

+

return image

|

| 259 |

+

|

| 260 |

+

def __reorder(self, input_arr):

|

| 261 |

+

out_arr = []

|

| 262 |

+

for i in range(68):

|

| 263 |

+

out_arr.append(input_arr[i])

|

| 264 |

+

k = 68 + i

|

| 265 |

+

out_arr.append(input_arr[k])

|

| 266 |

+

return np.array(out_arr)

|

| 267 |

+

|

| 268 |

+

def print_image_arr_heat(self, k, image):

|

| 269 |

+

plt.figure()

|

| 270 |

+

plt.imshow(image)

|

| 271 |

+

implot = plt.imshow(image)

|

| 272 |

+

plt.axis('off')

|

| 273 |

+

plt.savefig('heat' + str(k) + '.png', bbox_inches='tight')

|

| 274 |

+

plt.clf()

|

| 275 |

+

|

| 276 |

+

def print_image_arr(self, k, image, landmarks_x, landmarks_y):

|

| 277 |

+

plt.figure()

|

| 278 |

+

plt.imshow(image)

|

| 279 |

+

implot = plt.imshow(image)

|

| 280 |

+

|

| 281 |

+

plt.scatter(x=landmarks_x[:], y=landmarks_y[:], c='black', s=20)

|

| 282 |

+

plt.scatter(x=landmarks_x[:], y=landmarks_y[:], c='white', s=15)

|

| 283 |

+

plt.axis('off')

|

| 284 |

+

plt.savefig('sss' + str(k) + '.png', bbox_inches='tight')

|

| 285 |

+

# plt.show()

|

| 286 |

+

plt.clf()

|

| 287 |

+

|

| 288 |

+