lizhiyuan

commited on

Commit

•

a951ae0

1

Parent(s):

c4c3fca

update model

Browse files- .gitattributes +2 -0

- README.md +222 -3

- README_EN.md +217 -0

- assets/github-mark.png +0 -0

- assets/megrez_logo.png +0 -0

- assets/multitask.jpg +0 -0

- assets/opencompass.jpg +0 -0

- assets/wechat-group.jpg +0 -0

- assets/wechat-official.jpg +0 -0

- assets/wechat.jpg +0 -0

- audio.py +228 -0

- config.json +125 -0

- configuration_megrezo.py +87 -0

- generation_config.json +7 -0

- image_processing_megrezo.py +386 -0

- model-00001-of-00002.safetensors +3 -0

- model-00002-of-00002.safetensors +3 -0

- model.safetensors.index.json +0 -0

- modeling_megrezo.py +328 -0

- modeling_navit_siglip.py +937 -0

- preprocessor_config.json +46 -0

- processing_megrezo.py +587 -0

- processor_config.json +7 -0

- resampler.py +783 -0

- special_tokens_map.json +10 -0

- tokenizer.json +0 -0

- tokenizer_config.json +257 -0

- tokenizer_wrapper.py +63 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

model-00001-of-00002.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

model-00002-of-00002.safetensors filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,3 +1,222 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

| 4 |

+

# Megrez-3B-Omni: 首个端侧全模态理解开源模型

|

| 5 |

+

<p align="center">

|

| 6 |

+

<img src="assets/megrez_logo.png" width="400"/>

|

| 7 |

+

<p>

|

| 8 |

+

<p align="center">

|

| 9 |

+

🔗 <a href="https://github.com/infinigence/Infini-Megrez-Omni">GitHub</a>   |   🏠 <a href="https://huggingface.co/spaces/Infinigence/Megrez-3B-Omni">Demo</a>   |   📖 <a href="assets/png/wechat-official.jpg">WeChat Official</a>   |   💬 <a href="assets/wechat-group.jpg">WeChat Groups</a>

|

| 10 |

+

</p>

|

| 11 |

+

<h4 align="center">

|

| 12 |

+

<p>

|

| 13 |

+

<b>中文</b> | <a href="https://huggingface.co/Infinigence/Megrez-3B-Omni/blob/main/README_EN.md">English</a>

|

| 14 |

+

<p>

|

| 15 |

+

</h4>

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## 模型简介

|

| 19 |

+

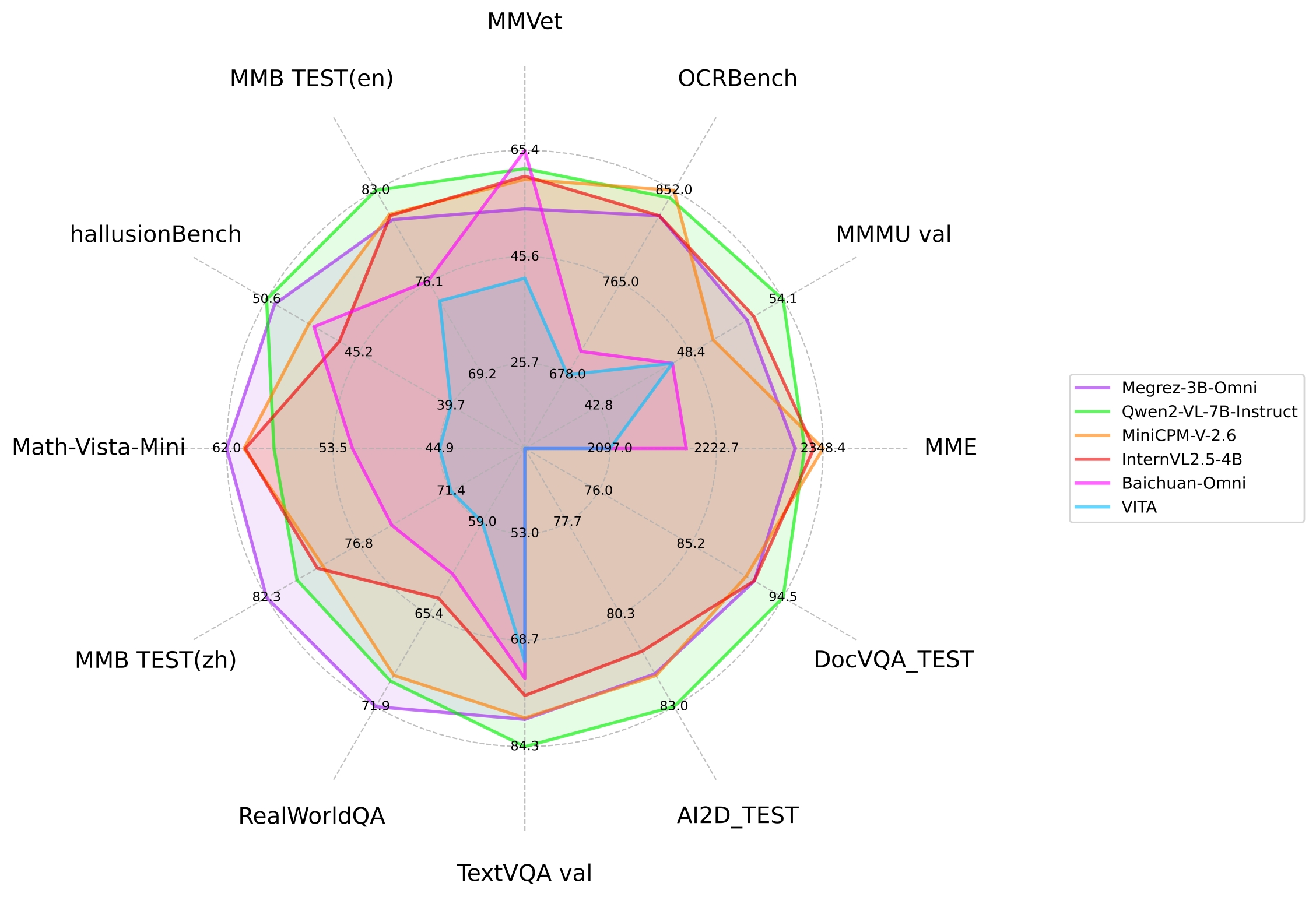

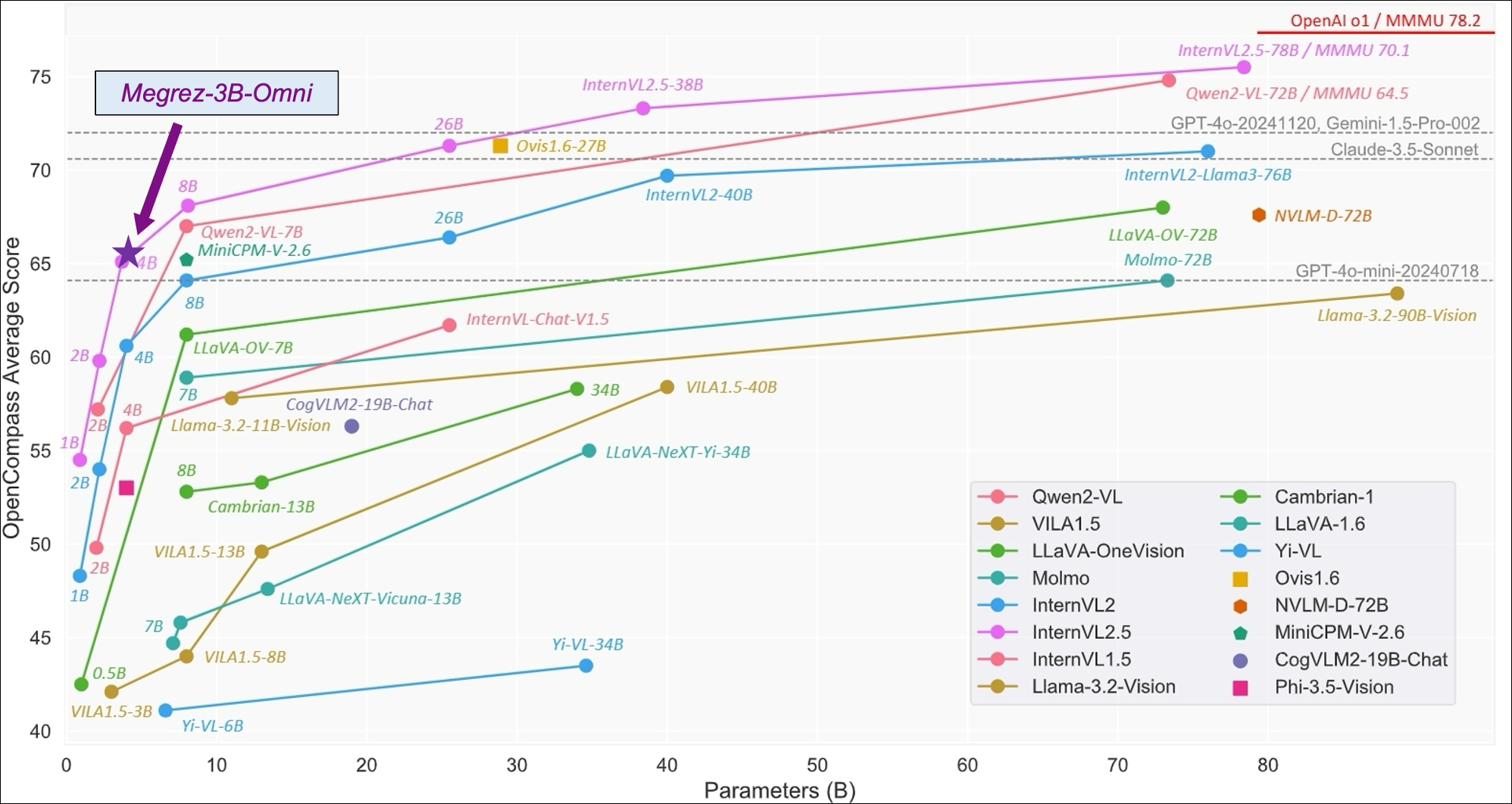

Megrez-3B-Omni是由无问芯穹([Infinigence AI](https://cloud.infini-ai.com/platform/ai))研发的**端侧全模态**理解模型,基于无问大语言模型Megrez-3B-Instruct扩展,同时具备图片、文本、音频三种模态数据的理解分析能力,在三个方面均取得最优精度

|

| 20 |

+

- 在图像理解方面,基于SigLip-400M构建图像Token,在OpenCompass榜单上(综合8个主流多模态评测基准)平均得分66.2,超越LLaVA-NeXT-Yi-34B等更大参数规模的模型。Megrez-3B-Omni也是在MME、MMMU、OCRBench等测试集上目前精度最高的图像理解模型之一,在场景理解、OCR等方面具有良好表现。

|

| 21 |

+

- 在语言理解方面,Megrez-3B-Omni并未牺牲模型的文本处理能力,综合能力较单模态版本(Megrez-3B-Instruct)精度变化小于2%,保持在C-EVAL、MMLU/MMLU Pro、AlignBench等多个测试集上的最优精度优势,依然取得超越上一代14B模型的能力表现

|

| 22 |

+

- 在语音理解方面,采用Qwen2-Audio/whisper-large-v3的Encoder作为语音输入,支持中英文语音输入及多轮对话,支持对输入图片的语音提问,根据语音指令直接响应文本,在多项基准任务上取得了领先的结果

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## 基础信息

|

| 26 |

+

<table>

|

| 27 |

+

<thead>

|

| 28 |

+

<tr>

|

| 29 |

+

<th></th>

|

| 30 |

+

<th>Language Module</th>

|

| 31 |

+

<th>Vision Module</th>

|

| 32 |

+

<th>Audio Module</th>

|

| 33 |

+

</tr>

|

| 34 |

+

</thead>

|

| 35 |

+

<tbody>

|

| 36 |

+

<tr>

|

| 37 |

+

<td>Architecture</td>

|

| 38 |

+

<td>Llama-2 with GQA</td>

|

| 39 |

+

<td>SigLip-SO400M</td>

|

| 40 |

+

<td>Whisper-large-v3

|

| 41 |

+

(encoder-only)</td>

|

| 42 |

+

</tr>

|

| 43 |

+

<tr>

|

| 44 |

+

<td># Params (Backbone)</td>

|

| 45 |

+

<td>2.29B</td>

|

| 46 |

+

<td>0.42B</td>

|

| 47 |

+

<td>0.64B</td>

|

| 48 |

+

</tr>

|

| 49 |

+

<tr>

|

| 50 |

+

<td>Connector</td>

|

| 51 |

+

<td>-</td>

|

| 52 |

+

<td>Cross Attention</td>

|

| 53 |

+

<td>Linear</td>

|

| 54 |

+

</tr>

|

| 55 |

+

<tr>

|

| 56 |

+

<td># Params (Others)</td>

|

| 57 |

+

<td>Emb: 0.31B<br>Softmax: 0.31B</td>

|

| 58 |

+

<td>Connector: 0.036B</td>

|

| 59 |

+

<td>Connector: 0.003B</td>

|

| 60 |

+

</tr>

|

| 61 |

+

<tr>

|

| 62 |

+

<td># Params (Total)</td>

|

| 63 |

+

<td colspan="3">4B</td>

|

| 64 |

+

</tr>

|

| 65 |

+

<tr>

|

| 66 |

+

<td># Vocab Size</td>

|

| 67 |

+

<td>122880</td>

|

| 68 |

+

<td>64 tokens/slice</td>

|

| 69 |

+

<td>-</td>

|

| 70 |

+

</tr>

|

| 71 |

+

<tr>

|

| 72 |

+

<td>Context length</td>

|

| 73 |

+

<td colspan="3">4K tokens</td>

|

| 74 |

+

</tr>

|

| 75 |

+

<tr>

|

| 76 |

+

<td>Supported languages</td>

|

| 77 |

+

<td colspan="3">Chinese & English</td>

|

| 78 |

+

</tr>

|

| 79 |

+

</tbody>

|

| 80 |

+

</table>

|

| 81 |

+

|

| 82 |

+

### 图片理解能力

|

| 83 |

+

- 上图为Megrez-3B-Omni与其他开源模型在主流图片多模态任务上的性能比较

|

| 84 |

+

- 下图为Megrez-3B-Omni在OpenCompass测试集上表现,图片引用自: [InternVL 2.5 Blog Post](https://internvl.github.io/blog/2024-12-05-InternVL-2.5/)

|

| 85 |

+

<!-- <div style="display: flex; justify-content: space-between;">

|

| 86 |

+

<img src="assets/multitask.jpg" alt="Image 1" style="width: 45%;">

|

| 87 |

+

<img src="assets/opencompass.jpg" alt="Image 2" style="width: 45%;">

|

| 88 |

+

</div> -->

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

| model | basemodel | 发布时间 | OpenCompass | MME | MMMU val | OCRBench | MathVista | RealWorldQA | MMVet | hallusionBench | MMB TEST (en) | MMB TEST (zh) | TextVQA val | AI2D_TEST | MMstar | DocVQA_TEST |

|

| 94 |

+

|-----------------------|-----------------------|----------------|--------------------|----------|-----------|----------|-----------------|-------------|--------|----------------|--------------|--------------|-------------|-----------|-----------|-------------|

|

| 95 |

+

| **Megrez-3B-Omni** | **Megrez-3B** | **2024.12.16** | **66.2** | **2315** | **51.89** | **82.8** | **62** | **71.89** | **60** | **50.12** | **80.8** | **82.3** | **80.3** | **82.05** | **60.46** | **91.62** |

|

| 96 |

+

| Qwen2-VL-2B-Instruct | Qwen2-1.5B | 2024.08.28 | 57.2 | 1872 | 41.1 | 79.4 | 43 | 62.9 | 49.5 | 41.7 | 74.9 | 73.5 | 79.7 | 74.7 | 48 | 90.1 |

|

| 97 |

+

| InternVL2.5-2B | Internlm2.5-1.8B-chat | 2024.12.06 | 59.9 | 2138 | 43.6 | 80.4 | 51.3 | 60.1 | 60.8 | 42.6 | 74.7 | 71.9 | 74.3 | 74.9 | 53.7 | 88.7 |

|

| 98 |

+

| BlueLM-V-3B | - | 2024.11.29 | 66.1 | - | 45.1 | 82.9 | 60.8 | 66.7 | 61.8 | 48 | 83 | 80.5 | 78.4 | 85.3 | 62.3 | 87.8 |

|

| 99 |

+

| InternVL2.5-4B | Qwen2.5-3B-Instruct | 2024.12.06 | 65.1 | 2337 | 52.3 | 82.8 | 60.5 | 64.3 | 60.6 | 46.3 | 81.1 | 79.3 | 76.8 | 81.4 | 58.3 | 91.6 |

|

| 100 |

+

| Baichuan-Omni | Unknown-7B | 2024.10.11 | - | 2186 | 47.3 | 70.0 | 51.9 | 62.6 | 65.4 | 47.8 | 76.2 | 74.9 | 74.3 | - | - | - |

|

| 101 |

+

| MiniCPM-V-2.6 | Qwen2-7B | 2024.08.06 | 65.2 | 2348 | 49.8 | 85.2 | 60.6 | 69.7 | 60 | 48.1 | 81.2 | 79 | 80.1 | 82.1 | 57.26 | 90.8 |

|

| 102 |

+

| Qwen2-VL-7B-Instruct | Qwen2-7B | 2024.08.28 | 67 | 2326 | 54.1 | 84.5 | 58.2 | 70.1 | 62 | 50.6 | 83 | 80.5 | 84.3 | 83 | 60.7 | 94.5 |

|

| 103 |

+

| MiniCPM-Llama3-V-2.5 | Llama3-Instruct 8B | 2024.05.20 | 58.8 | 2024 | 45.8 | 72.5 | 54.3 | 63.5 | 52.8 | 42.4 | 77.2 | 74.2 | 76.6 | 78.4 | - | 84.8 |

|

| 104 |

+

| VITA | Mixtral 8x7B | 2024.08.12 | - | 2097 | 47.3 | 67.8 | 44.9 | 59 | 41.6 | 39.7 | 74.7 | 71.4 | 71.8 | - | - | - |

|

| 105 |

+

| GLM-4V-9B | GLM-4-9B | 2024.06.04 | 59.1 | 2018 | 46.9 | 77.6 | 51.1 | - | 58 | 46.6 | 81.1 | 79.4 | - | 81.1 | 58.7 | - |

|

| 106 |

+

| LLaVA-NeXT-Yi-34B | Yi-34B | 2024.01.18 | 55 | 2006 | 48.8 | 57.4 | 40.4 | 66 | 50.7 | 34.8 | 81.1 | 79 | 69.3 | 78.9 | 51.6 | - |

|

| 107 |

+

| Qwen2-VL-72B-Instruct | Qwen2-72B | 2024.08.28 | 74.8 | 2482 | 64.5 | 87.7 | 70.5 | 77.8 | 74 | 58.1 | 86.5 | 86.6 | 85.5 | 88.1 | 68.3 | 96.5 |

|

| 108 |

+

|

| 109 |

+

### 文本处理能力

|

| 110 |

+

| | | | | 对话&指令 | | | 中文&英文任务 | | | | 代码任务 | | 数学任务 | |

|

| 111 |

+

|:---------------------:|:--------:|:-----------:|:-------------------------------------:|:---------:|:---------------:|:------:|:-------------:|:----------:|:-----:|:--------:|:---------:|:-----:|:--------:|:-----:|

|

| 112 |

+

| models | 指令模型 | 发布时间 | # Non-Emb Params | MT-Bench | AlignBench (ZH) | IFEval | C-EVAL (ZH) | CMMLU (ZH) | MMLU | MMLU-Pro | HumanEval | MBPP | GSM8K | MATH |

|

| 113 |

+

| Megrez-3B-Omni | Y | 2024.12.16 | 2.3 | 8.4 | 6.94 | 66.5 | 84.0 | 75.3 | 73.3 | 45.2 | 72.6 | 60.6 | 63.8 | 27.3 |

|

| 114 |

+

| Megrez-3B-Instruct | Y | 2024.12.16 | 2.3 | 8.64 | 7.06 | 68.6 | 84.8 | 74.7 | 72.8 | 46.1 | 78.7 | 71.0 | 65.5 | 28.3 |

|

| 115 |

+

| Baichuan-Omni | Y | 2024.10.11 | 7.0 | - | - | - | 68.9 | 72.2 | 65.3 | - | - | - | - | - |

|

| 116 |

+

| VITA | Y | 2024.08.12 | 12.9 | - | - | - | 56.7 | 46.6 | 71.0 | - | - | - | 75.7 | - |

|

| 117 |

+

| Qwen1.5-7B | | 2024.02.04 | 6.5 | - | - | - | 74.1 | 73.1 | 61.0 | 29.9 | 36.0 | 51.6 | 62.5 | 20.3 |

|

| 118 |

+

| Qwen1.5-7B-Chat | Y | 2024.02.04 | 6.5 | 7.60 | 6.20 | - | 67.3 | - | 59.5 | 29.1 | 46.3 | 48.9 | 60.3 | 23.2 |

|

| 119 |

+

| Qwen1.5-14B | | 2024.02.04 | 12.6 | - | - | - | 78.7 | 77.6 | 67.6 | - | 37.8 | 44.0 | 70.1 | 29.2 |

|

| 120 |

+

| Qwen1.5-14B-Chat | Y | 2024.02.04 | 12.6 | 7.9 | - | - | - | - | - | - | - | - | - | - |

|

| 121 |

+

| Qwen2-7B | | 2024.06.07 | 6.5 | - | - | - | 83.2 | 83.9 | 70.3 | 40.0 | 51.2 | 65.9 | 79.9 | 44.2 |

|

| 122 |

+

| Qwen2-7b-Instruct | Y | 2024.06.07 | 6.5 | 8.41 | 7.21 | 51.4 | 80.9 | 77.2 | 70.5 | 44.1 | 79.9 | 67.2 | 85.7 | 52.9 |

|

| 123 |

+

| Qwen2.5-3B-Instruct | Y | 2024.9.19 | 2.8 | - | - | - | - | - | - | 43.7 | 74.4 | 72.7 | 86.7 | 65.9 |

|

| 124 |

+

| Qwen2.5-7B | | 2024.9.19 | 6.5 | - | - | - | - | - | 74.2 | 45.0 | 57.9 | 74.9 | 85.4 | 49.8 |

|

| 125 |

+

| Qwen2.5-7B-Instruct | Y | 2024.09.19 | 6.5 | 8.75 | - | 74.9 | - | - | - | 56.3 | 84.8 | 79.2 | 91.6 | 75.5 |

|

| 126 |

+

| Llama-3.1-8B | | 2024.07.23 | 7.0 | 8.3 | 5.7 | 71.5 | 55.2 | 55.8 | 66.7 | 37.1 | - | - | 84.5 | 51.9 |

|

| 127 |

+

| Llama-3.2-3B | | 2024.09.25 | 2.8 | - | - | 77.4 | - | - | 63.4 | - | - | - | 77.7 | 48.0 |

|

| 128 |

+

| Phi-3.5-mini-instruct | Y | 2024.08.23 | 3.6 | 8.6 | 5.7 | 49.4 | 46.1 | 46.9 | 69.0 | 47.4 | 62.8 | 69.6 | 86.2 | 48.5 |

|

| 129 |

+

| MiniCPM3-4B | Y | 2024.09.05 | 3.9 | 8.41 | 6.74 | 68.4 | 73.6 | 73.3 | 67.2 | - | 74.4 | 72.5 | 81.1 | 46.6 |

|

| 130 |

+

| Yi-1.5-6B-Chat | Y | 2024.05.11 | 5.5 | 7.50 | 6.20 | - | 74.2 | 74.7 | 61.0 | - | 64.0 | 70.9 | 78.9 | 40.5 |

|

| 131 |

+

| GLM-4-9B-chat | Y | 2024.06.04 | 8.2 | 8.35 | 7.01 | 64.5 | 75.6 | 71.5 | 72.4 | - | 71.8 | - | 79.6 | 50.6 |

|

| 132 |

+

| Baichuan2-13B-Base | | 2023.09.06 | 12.6 | - | 5.25 | - | 58.1 | 62.0 | 59.2 | - | 17.1 | 30.2 | 52.8 | 10.1 |

|

| 133 |

+

|

| 134 |

+

注:Qwen2-1.5B模型的指标在论文和Qwen2.5报告中点数不一致,当前采用原始论文中的精度

|

| 135 |

+

|

| 136 |

+

### 语音理解能力

|

| 137 |

+

| Model | Base model | Release Time | Fleurs test-zh | WenetSpeech test_net | WenetSpeech test_meeting |

|

| 138 |

+

|:----------------:|:------------------:|:-------------:|:--------------:|:--------------------:|:------------------------:|

|

| 139 |

+

| Megrez-3B-Omni | Megrez-3B-Instruct | 2024.12.16 | 10.8 | - | 16.4 |

|

| 140 |

+

| Whisper-large-v3 | - | 2023.11.06 | 12.4 | 17.5 | 30.8 |

|

| 141 |

+

| Qwen2-Audio-7B | Qwen2-7B | 2024.08.09 | 9 | 11 | 10.7 |

|

| 142 |

+

| Baichuan2-omni | Unknown-7B | 2024.10.11 | 7 | 6.9 | 8.4 |

|

| 143 |

+

| VITA | Mixtral 8x7B | 2024.08.12 | - | -/12.2(CER) | -/16.5(CER) |

|

| 144 |

+

|

| 145 |

+

### 速度

|

| 146 |

+

| | image_tokens | prefill (tokens/s) | decode (tokens/s) |

|

| 147 |

+

|----------------|:------------:|:------------------:|:-----------------:|

|

| 148 |

+

| Megrez-3B-Omni | 448 | 6312.66 | 1294.9 |

|

| 149 |

+

| Qwen2-VL-2B | 1378 | 7349.39 | 685.66 |

|

| 150 |

+

| MiniCPM-V-2_6 | 448 | 2167.09 | 452.51 |

|

| 151 |

+

|

| 152 |

+

实验设置:

|

| 153 |

+

- 测试环境:NVIDIA H100,vLLM下输入128个Text token和一张1480x720大小图片,输出128个token,num_seqs固定为8

|

| 154 |

+

- Qwen2-VL-2B虽然其具备更小尺寸的基座模型,但编码上述大小图片后的image_token相较Megrez-3B-Omni多很多,导致此实验下的decode速度小于Megrez-3B-Omni

|

| 155 |

+

|

| 156 |

+

## 快速上手

|

| 157 |

+

|

| 158 |

+

### 在线体验

|

| 159 |

+

[HF Chat Demo](https://huggingface.co/spaces/Infinigence/Megrez-3B-Omni)

|

| 160 |

+

|

| 161 |

+

### 本地部署

|

| 162 |

+

环境安装和vLLM推理代码等部署问题可以参考 [Infini-Megrez-Omni](https://github.com/infinigence/Infini-Megrez-Omni)

|

| 163 |

+

|

| 164 |

+

如下是一个使用transformers进行推理的例子,通过在content字段中分别传入text、image和audio,可以图文/图音等多种模态和模型进行交互。

|

| 165 |

+

```python

|

| 166 |

+

import torch

|

| 167 |

+

from transformers import AutoModelForCausalLM

|

| 168 |

+

|

| 169 |

+

path = "{{PATH_TO_PRETRAINED_MODEL}}" # Change this to the path of the model.

|

| 170 |

+

|

| 171 |

+

model = (

|

| 172 |

+

AutoModelForCausalLM.from_pretrained(

|

| 173 |

+

path,

|

| 174 |

+

trust_remote_code=True,

|

| 175 |

+

torch_dtype=torch.bfloat16,

|

| 176 |

+

attn_implementation="flash_attention_2",

|

| 177 |

+

)

|

| 178 |

+

.eval()

|

| 179 |

+

.cuda()

|

| 180 |

+

)

|

| 181 |

+

|

| 182 |

+

# Chat with text and image

|

| 183 |

+

messages = [

|

| 184 |

+

{

|

| 185 |

+

"role": "user",

|

| 186 |

+

"content": {

|

| 187 |

+

"text": "Please describe the content of the image.",

|

| 188 |

+

"image": "./data/sample_image.jpg",

|

| 189 |

+

},

|

| 190 |

+

},

|

| 191 |

+

]

|

| 192 |

+

|

| 193 |

+

# Chat with audio and image

|

| 194 |

+

messages = [

|

| 195 |

+

{

|

| 196 |

+

"role": "user",

|

| 197 |

+

"content": {

|

| 198 |

+

"image": "./data/sample_image.jpg",

|

| 199 |

+

"audio": "./data/sample_audio.m4a",

|

| 200 |

+

},

|

| 201 |

+

},

|

| 202 |

+

]

|

| 203 |

+

|

| 204 |

+

MAX_NEW_TOKENS = 100

|

| 205 |

+

response = model.chat(

|

| 206 |

+

messages,

|

| 207 |

+

sampling=False,

|

| 208 |

+

max_new_tokens=MAX_NEW_TOKENS,

|

| 209 |

+

temperature=0,

|

| 210 |

+

)

|

| 211 |

+

print(response)

|

| 212 |

+

```

|

| 213 |

+

|

| 214 |

+

## 注意事项

|

| 215 |

+

1. 请将图片尽量在首轮输入以保证推理效果,语音和文本无此限制,可以自由切换

|

| 216 |

+

2. 语音识别(ASR)场景下,只需要将content['text']修改为“将语音转化为文字。”

|

| 217 |

+

3. OCR场景下开启采样可能会引入语言模型幻觉导致的文字变化,可考虑关闭采样进行推理(sampling=False),但关闭采样可能引入模型复读

|

| 218 |

+

|

| 219 |

+

## 开源协议及使用声明

|

| 220 |

+

- 协议:本仓库中代码依照 [Apache-2.0](https://www.apache.org/licenses/LICENSE-2.0) 协议开源。

|

| 221 |

+

- 幻觉:大模型天然存在幻觉问题,用户使用过程中请勿完全相信模型生成的内容。

|

| 222 |

+

- 价值观及安全性:本模型已尽全力确保训练过程中使用的数据的合规性,但由于数据的大体量及复杂性,仍有可能存在一些无法预见的问题。如果出现使用本开源模型而导致的任何问题,包括但不限于数据安全问题、公共舆论风险,或模型被误导、滥用、传播或不当利用所带来的任何风险和问题,我们将不承担任何责任。

|

README_EN.md

ADDED

|

@@ -0,0 +1,217 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

| 4 |

+

# Megrez-3B-Omni: The First Open-Source On-device LLM with Full Modality Understanding

|

| 5 |

+

<p align="center">

|

| 6 |

+

<img src="assets/megrez_logo.png" width="400"/>

|

| 7 |

+

<p>

|

| 8 |

+

<p align="center">

|

| 9 |

+

🔗 <a href="https://github.com/infinigence/Infini-Megrez-Omni">GitHub</a>   |   🏠 <a href="https://huggingface.co/spaces/Infinigence/Megrez-3B-Omni">Demo</a>   |   📖 <a href="assets/wechat-official.jpg">WeChat Official</a>   |   💬 <a href="assets/wechat-group.jpg">WeChat Groups</a>

|

| 10 |

+

</p>

|

| 11 |

+

<h4 align="center">

|

| 12 |

+

<p>

|

| 13 |

+

<a href="https://huggingface.co/Infinigence/Megrez-3B-Omni/blob/main/README.md">中文</a> | <b>English</b>

|

| 14 |

+

<p>

|

| 15 |

+

</h4>

|

| 16 |

+

|

| 17 |

+

## Introduction

|

| 18 |

+

**Megrez-3B-Omni** is an on-device multimodal understanding LLM model developed by **Infinigence AI** ([Infinigence AI](https://cloud.infini-ai.com/platform/ai)). It is an extension of the Megrez-3B-Instruct model and supports analysis of image, text, and audio modalities. The model achieves state-of-the-art accuracy in all three domains:

|

| 19 |

+

- Image Understanding: By utilizing SigLip-400M for constructing image tokens, Megrez-3B-Omni outperforms models with more parameters such as LLaVA-NeXT-Yi-34B. It is one of the best image understanding models among multiple mainstream benchmarks, including MME, MMMU, and OCRBench. It demonstrates excellent performance in tasks such as scene understanding and OCR.

|

| 20 |

+

- Language Understanding: Megrez-3B-Omni retains text understanding capabilities without significant trade-offs. Compared to its single-modal counterpart (Megrez-3B-Instruct), the accuracy variation is less than 2%, maintaining state-of-the-art performance on benchmarks like C-EVAL, MMLU/MMLU Pro, and AlignBench. It also outperforms previous-generation models with 14B parameters.

|

| 21 |

+

- Speech Understanding: Equipped with the encoder head of Qwen2-Audio/whisper-large-v3, the model supports both Chinese and English speech input, multi-turn conversations, and voice-based questions about input images. It can directly respond to voice commands with text and achieved leading results across multiple benchmarks.

|

| 22 |

+

|

| 23 |

+

## Model Info

|

| 24 |

+

<table>

|

| 25 |

+

<thead>

|

| 26 |

+

<tr>

|

| 27 |

+

<th></th>

|

| 28 |

+

<th>Language Module</th>

|

| 29 |

+

<th>Vision Module</th>

|

| 30 |

+

<th>Audio Module</th>

|

| 31 |

+

</tr>

|

| 32 |

+

</thead>

|

| 33 |

+

<tbody>

|

| 34 |

+

<tr>

|

| 35 |

+

<td>Architecture</td>

|

| 36 |

+

<td>Llama-2 with GQA</td>

|

| 37 |

+

<td>SigLip-SO400M</td>

|

| 38 |

+

<td>Whisper-large-v3

|

| 39 |

+

(encoder-only)</td>

|

| 40 |

+

</tr>

|

| 41 |

+

<tr>

|

| 42 |

+

<td># Params (Backbone)</td>

|

| 43 |

+

<td>2.29B</td>

|

| 44 |

+

<td>0.42B</td>

|

| 45 |

+

<td>0.64B</td>

|

| 46 |

+

</tr>

|

| 47 |

+

<tr>

|

| 48 |

+

<td>Connector</td>

|

| 49 |

+

<td>-</td>

|

| 50 |

+

<td>Cross Attention</td>

|

| 51 |

+

<td>Linear</td>

|

| 52 |

+

</tr>

|

| 53 |

+

<tr>

|

| 54 |

+

<td># Params (Others)</td>

|

| 55 |

+

<td>Emb: 0.31B<br>Softmax: 0.31B</td>

|

| 56 |

+

<td>Connector: 0.036B</td>

|

| 57 |

+

<td>Connector: 0.003B</td>

|

| 58 |

+

</tr>

|

| 59 |

+

<tr>

|

| 60 |

+

<td># Params (Total)</td>

|

| 61 |

+

<td colspan="3">4B</td>

|

| 62 |

+

</tr>

|

| 63 |

+

<tr>

|

| 64 |

+

<td># Vocab Size</td>

|

| 65 |

+

<td>122880</td>

|

| 66 |

+

<td>64 tokens/slice</td>

|

| 67 |

+

<td>-</td>

|

| 68 |

+

</tr>

|

| 69 |

+

<tr>

|

| 70 |

+

<td>Context length</td>

|

| 71 |

+

<td colspan="3">4K tokens</td>

|

| 72 |

+

</tr>

|

| 73 |

+

<tr>

|

| 74 |

+

<td>Supported languages</td>

|

| 75 |

+

<td colspan="3">Chinese & English</td>

|

| 76 |

+

</tr>

|

| 77 |

+

</tbody>

|

| 78 |

+

</table>

|

| 79 |

+

|

| 80 |

+

### Image Understanding

|

| 81 |

+

- The above image compares the performance of Megrez-3B-Omni with other open-source models on mainstream image multimodal tasks.

|

| 82 |

+

- The below image shows the performance of Megrez-3B-Omni on the OpenCompass test set. Image reference: [InternVL 2.5 Blog Post](https://internvl.github.io/blog/2024-12-05-InternVL-2.5/)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

| model | basemodel | release time | OpenCompass | MME | MMMU val | OCRBench | MathVista | RealWorldQA | MMVet | hallusionBench | MMB TEST (en) | MMB TEST (zh) | TextVQA val | AI2D_TEST | MMstar | DocVQA_TEST |

|

| 88 |

+

|-----------------------|-----------------------|----------------|--------------------|----------|-----------|----------|-----------------|-------------|--------|----------------|--------------|--------------|-------------|-----------|-----------|-------------|

|

| 89 |

+

| **Megrez-3B-Omni** | **Megrez-3B** | **2024.12.16** | **66.2** | **2315** | **51.89** | **82.8** | **62** | **71.89** | **60** | **50.12** | **80.8** | **82.3** | **80.3** | **82.05** | **60.46** | **91.62** |

|

| 90 |

+

| Qwen2-VL-2B-Instruct | Qwen2-1.5B | 2024.08.28 | 57.2 | 1872 | 41.1 | 79.4 | 43 | 62.9 | 49.5 | 41.7 | 74.9 | 73.5 | 79.7 | 74.7 | 48 | 90.1 |

|

| 91 |

+

| InternVL2.5-2B | Internlm2.5-1.8B-chat | 2024.12.06 | 59.9 | 2138 | 43.6 | 80.4 | 51.3 | 60.1 | 60.8 | 42.6 | 74.7 | 71.9 | 74.3 | 74.9 | 53.7 | 88.7 |

|

| 92 |

+

| BlueLM-V-3B | - | 2024.11.29 | 66.1 | - | 45.1 | 82.9 | 60.8 | 66.7 | 61.8 | 48 | 83 | 80.5 | 78.4 | 85.3 | 62.3 | 87.8 |

|

| 93 |

+

| InternVL2.5-4B | Qwen2.5-3B-Instruct | 2024.12.06 | 65.1 | 2337 | 52.3 | 82.8 | 60.5 | 64.3 | 60.6 | 46.3 | 81.1 | 79.3 | 76.8 | 81.4 | 58.3 | 91.6 |

|

| 94 |

+

| Baichuan-Omni | Unknown-7B | 2024.10.11 | - | 2186 | 47.3 | 70.0 | 51.9 | 62.6 | 65.4 | 47.8 | 76.2 | 74.9 | 74.3 | - | - | - |

|

| 95 |

+

| MiniCPM-V-2.6 | Qwen2-7B | 2024.08.06 | 65.2 | 2348 | 49.8 | 85.2 | 60.6 | 69.7 | 60 | 48.1 | 81.2 | 79 | 80.1 | 82.1 | 57.26 | 90.8 |

|

| 96 |

+

| Qwen2-VL-7B-Instruct | Qwen2-7B | 2024.08.28 | 67 | 2326 | 54.1 | 84.5 | 58.2 | 70.1 | 62 | 50.6 | 83 | 80.5 | 84.3 | 83 | 60.7 | 94.5 |

|

| 97 |

+

| MiniCPM-Llama3-V-2.5 | Llama3-Instruct 8B | 2024.05.20 | 58.8 | 2024 | 45.8 | 72.5 | 54.3 | 63.5 | 52.8 | 42.4 | 77.2 | 74.2 | 76.6 | 78.4 | - | 84.8 |

|

| 98 |

+

| VITA | Mixtral 8x7B | 2024.08.12 | - | 2097 | 47.3 | 67.8 | 44.9 | 59 | 41.6 | 39.7 | 74.7 | 71.4 | 71.8 | - | - | - |

|

| 99 |

+

| GLM-4V-9B | GLM-4-9B | 2024.06.04 | 59.1 | 2018 | 46.9 | 77.6 | 51.1 | - | 58 | 46.6 | 81.1 | 79.4 | - | 81.1 | 58.7 | - |

|

| 100 |

+

| LLaVA-NeXT-Yi-34B | Yi-34B | 2024.01.18 | 55 | 2006 | 48.8 | 57.4 | 40.4 | 66 | 50.7 | 34.8 | 81.1 | 79 | 69.3 | 78.9 | 51.6 | - |

|

| 101 |

+

| Qwen2-VL-72B-Instruct | Qwen2-72B | 2024.08.28 | 74.8 | 2482 | 64.5 | 87.7 | 70.5 | 77.8 | 74 | 58.1 | 86.5 | 86.6 | 85.5 | 88.1 | 68.3 | 96.5 |

|

| 102 |

+

|

| 103 |

+

### Text Understanding

|

| 104 |

+

| | | | | Chat&Instruction | | | Zh&En Tasks | | | | Code | | Math | |

|

| 105 |

+

|:---------------------:|:--------:|:-----------:|:-------------------------------------:|:---------:|:---------------:|:------:|:-------------:|:----------:|:-----:|:--------:|:---------:|:-----:|:--------:|:-----:|

|

| 106 |

+

| models | Instruction | Release Time | Non-Emb Params | MT-Bench | AlignBench (ZH) | IFEval | C-EVAL (ZH) | CMMLU (ZH) | MMLU | MMLU-Pro | HumanEval | MBPP | GSM8K | MATH |

|

| 107 |

+

| Megrez-3B-Omni | Y | 2024.12.16 | 2.3 | 8.4 | 6.94 | 66.5 | 84.0 | 75.3 | 73.3 | 45.2 | 72.6 | 60.6 | 63.8 | 27.3 |

|

| 108 |

+

| Megrez-3B-Instruct | Y | 2024.12.16 | 2.3 | 8.64 | 7.06 | 68.6 | 84.8 | 74.7 | 72.8 | 46.1 | 78.7 | 71.0 | 65.5 | 28.3 |

|

| 109 |

+

| Baichuan-Omni | Y | 2024.10.11 | 7.0 | - | - | - | 68.9 | 72.2 | 65.3 | - | - | - | - | - |

|

| 110 |

+

| VITA | Y | 2024.08.12 | 12.9 | - | - | - | 56.7 | 46.6 | 71.0 | - | - | - | 75.7 | - |

|

| 111 |

+

| Qwen1.5-7B | | 2024.02.04 | 6.5 | - | - | - | 74.1 | 73.1 | 61.0 | 29.9 | 36.0 | 51.6 | 62.5 | 20.3 |

|

| 112 |

+

| Qwen1.5-7B-Chat | Y | 2024.02.04 | 6.5 | 7.60 | 6.20 | - | 67.3 | - | 59.5 | 29.1 | 46.3 | 48.9 | 60.3 | 23.2 |

|

| 113 |

+

| Qwen1.5-14B | | 2024.02.04 | 12.6 | - | - | - | 78.7 | 77.6 | 67.6 | - | 37.8 | 44.0 | 70.1 | 29.2 |

|

| 114 |

+

| Qwen1.5-14B-Chat | Y | 2024.02.04 | 12.6 | 7.9 | - | - | - | - | - | - | - | - | - | - |

|

| 115 |

+

| Qwen2-7B | | 2024.06.07 | 6.5 | - | - | - | 83.2 | 83.9 | 70.3 | 40.0 | 51.2 | 65.9 | 79.9 | 44.2 |

|

| 116 |

+

| Qwen2-7b-Instruct | Y | 2024.06.07 | 6.5 | 8.41 | 7.21 | 51.4 | 80.9 | 77.2 | 70.5 | 44.1 | 79.9 | 67.2 | 85.7 | 52.9 |

|

| 117 |

+

| Qwen2.5-3B-Instruct | Y | 2024.9.19 | 2.8 | - | - | - | - | - | - | 43.7 | 74.4 | 72.7 | 86.7 | 65.9 |

|

| 118 |

+

| Qwen2.5-7B | | 2024.9.19 | 6.5 | - | - | - | - | - | 74.2 | 45.0 | 57.9 | 74.9 | 85.4 | 49.8 |

|

| 119 |

+

| Qwen2.5-7B-Instruct | Y | 2024.09.19 | 6.5 | 8.75 | - | 74.9 | - | - | - | 56.3 | 84.8 | 79.2 | 91.6 | 75.5 |

|

| 120 |

+

| Llama-3.1-8B | | 2024.07.23 | 7.0 | 8.3 | 5.7 | 71.5 | 55.2 | 55.8 | 66.7 | 37.1 | - | - | 84.5 | 51.9 |

|

| 121 |

+

| Llama-3.2-3B | | 2024.09.25 | 2.8 | - | - | 77.4 | - | - | 63.4 | - | - | - | 77.7 | 48.0 |

|

| 122 |

+

| Phi-3.5-mini-instruct | Y | 2024.08.23 | 3.6 | 8.6 | 5.7 | 49.4 | 46.1 | 46.9 | 69.0 | 47.4 | 62.8 | 69.6 | 86.2 | 48.5 |

|

| 123 |

+

| MiniCPM3-4B | Y | 2024.09.05 | 3.9 | 8.41 | 6.74 | 68.4 | 73.6 | 73.3 | 67.2 | - | 74.4 | 72.5 | 81.1 | 46.6 |

|

| 124 |

+

| Yi-1.5-6B-Chat | Y | 2024.05.11 | 5.5 | 7.50 | 6.20 | - | 74.2 | 74.7 | 61.0 | - | 64.0 | 70.9 | 78.9 | 40.5 |

|

| 125 |

+

| GLM-4-9B-chat | Y | 2024.06.04 | 8.2 | 8.35 | 7.01 | 64.5 | 75.6 | 71.5 | 72.4 | - | 71.8 | - | 79.6 | 50.6 |

|

| 126 |

+

| Baichuan2-13B-Base | | 2023.09.06 | 12.6 | - | 5.25 | - | 58.1 | 62.0 | 59.2 | - | 17.1 | 30.2 | 52.8 | 10.1 |

|

| 127 |

+

|

| 128 |

+

- The metrics for the Qwen2-1.5B model differ between the original paper and the Qwen2.5 report. Currently, the accuracy figures from the original paper are being used.

|

| 129 |

+

|

| 130 |

+

### Audio Understanding

|

| 131 |

+

| Model | Base model | Release Time | Fleurs test-zh | WenetSpeech test_net | WenetSpeech test_meeting |

|

| 132 |

+

|:----------------:|:------------------:|:-------------:|:--------------:|:--------------------:|:------------------------:|

|

| 133 |

+

| Megrez-3B-Omni | Megrez-3B-Instruct | 2024.12.16 | 10.8 | - | 16.4 |

|

| 134 |

+

| Whisper-large-v3 | - | 2023.11.06 | 12.4 | 17.5 | 30.8 |

|

| 135 |

+

| Qwen2-Audio-7B | Qwen2-7B | 2024.08.09 | 9 | 11 | 10.7 |

|

| 136 |

+

| Baichuan2-omni | Unknown-7B | 2024.10.11 | 7 | 6.9 | 8.4 |

|

| 137 |

+

| VITA | Mixtral 8x7B | 2024.08.12 | - | -/12.2(CER) | -/16.5(CER) |

|

| 138 |

+

|

| 139 |

+

### Inference Speed

|

| 140 |

+

| | image_tokens | prefill (tokens/s) | decode (tokens/s) |

|

| 141 |

+

|----------------|:------------:|:------------------:|:-----------------:|

|

| 142 |

+

| Megrez-3B-Omni | 448 | 6312.66 | 1294.9 |

|

| 143 |

+

| Qwen2-VL-2B | 1378 | 7349.39 | 685.66 |

|

| 144 |

+

| MiniCPM-V-2_6 | 448 | 2167.09 | 452.51 |

|

| 145 |

+

|

| 146 |

+

Setup:

|

| 147 |

+

- The testing environment utilizes an NVIDIA H100 GPU with vLLM. Each test includes 128 text tokens and a 720×1480 image as input, producing 128 output tokens, with `num_seqs` fixed at 8.

|

| 148 |

+

- Under this setup, the decoding speed of Qwen2-VL-2B is slower than Megrez-3B-Omni, despite having a smaller base LLM. This is due to the larger number of image tokens generated when encoding images of the specified size, which impacts actual inference speed.

|

| 149 |

+

|

| 150 |

+

## Quickstart

|

| 151 |

+

|

| 152 |

+

### Online Experience

|

| 153 |

+

[HF Chat Demo](https://huggingface.co/spaces/Infinigence/Megrez-3B-Omni)(recommend)

|

| 154 |

+

|

| 155 |

+

### Local Deployment

|

| 156 |

+

For environment installation and vLLM inference code deployment, refer to [Infini-Megrez-Omni](https://github.com/infinigence/Infini-Megrez-Omni)

|

| 157 |

+

|

| 158 |

+

Below is an example of using transformers for inference. By passing text, image, and audio in the content field, you can interact with various modalities and models.

|

| 159 |

+

```python

|

| 160 |

+

import torch

|

| 161 |

+

from transformers import AutoModelForCausalLM

|

| 162 |

+

|

| 163 |

+

path = "{{PATH_TO_PRETRAINED_MODEL}}" # Change this to the path of the model.

|

| 164 |

+

|

| 165 |

+

model = (

|

| 166 |

+

AutoModelForCausalLM.from_pretrained(

|

| 167 |

+

path,

|

| 168 |

+

trust_remote_code=True,

|

| 169 |

+

torch_dtype=torch.bfloat16,

|

| 170 |

+

attn_implementation="flash_attention_2",

|

| 171 |

+

)

|

| 172 |

+

.eval()

|

| 173 |

+

.cuda()

|

| 174 |

+

)

|

| 175 |

+

|

| 176 |

+

# Chat with text and image

|

| 177 |

+

messages = [

|

| 178 |

+

{

|

| 179 |

+

"role": "user",

|

| 180 |

+

"content": {

|

| 181 |

+

"text": "Please describe the content of the image.",

|

| 182 |

+

"image": "./data/sample_image.jpg",

|

| 183 |

+

},

|

| 184 |

+

},

|

| 185 |

+

]

|

| 186 |

+

|

| 187 |

+

# Chat with audio and image

|

| 188 |

+

messages = [

|

| 189 |

+

{

|

| 190 |

+

"role": "user",

|

| 191 |

+

"content": {

|

| 192 |

+

"image": "./data/sample_image.jpg",

|

| 193 |

+

"audio": "./data/sample_audio.m4a",

|

| 194 |

+

},

|

| 195 |

+

},

|

| 196 |

+

]

|

| 197 |

+

|

| 198 |

+

MAX_NEW_TOKENS = 100

|

| 199 |

+

response = model.chat(

|

| 200 |

+

messages,

|

| 201 |

+

sampling=False,

|

| 202 |

+

max_new_tokens=MAX_NEW_TOKENS,

|

| 203 |

+

temperature=0,

|

| 204 |

+

)

|

| 205 |

+

print(response)

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

## Notes

|

| 209 |

+

1. We recommend to put the images in the first round of chat for better inference results. There are no such restrictions for audio and text, which can be switched freely.

|

| 210 |

+

2. In the Automatic Speech Recognition (ASR) scenario, simply change content['text'] to "Convert speech to text."

|

| 211 |

+

3. In the OCR scenario, enabling sampling may introduce language model hallucinations which cause text changes. Users may consider disabling sampling in inference (sampling=False). However, disabling sampling may introduce model repetition.

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

## Open Source License and Usage Statement

|

| 215 |

+

- **License**: The code in this repository is open-sourced under the [Apache-2.0](https://www.apache.org/licenses/LICENSE-2.0) license.

|

| 216 |

+

- **Hallucination**: Large models inherently have hallucination issues. Users should not completely trust the content generated by the model.

|

| 217 |

+

- **Values and Safety**: While we have made every effort to ensure compliance of the data used during training, the large volume and complexity of the data may still lead to unforeseen issues. We disclaim any liability for problems arising from the use of this open-source model, including but not limited to data security issues, public opinion risks, or risks and problems caused by misleading, misuse, propagation, or improper utilization of the model.

|

assets/github-mark.png

ADDED

|

assets/megrez_logo.png

ADDED

|

assets/multitask.jpg

ADDED

|

assets/opencompass.jpg

ADDED

|

assets/wechat-group.jpg

ADDED

|

assets/wechat-official.jpg

ADDED

|

assets/wechat.jpg

ADDED

|

audio.py

ADDED

|

@@ -0,0 +1,228 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- encoding: utf-8 -*-

|

| 2 |

+

# File: audio.py

|

| 3 |

+

# Description: None

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

from typing import Iterable, List, Optional

|

| 7 |

+

|

| 8 |

+

import numpy as np

|

| 9 |

+

import torch

|

| 10 |

+

import torch.nn as nn

|

| 11 |

+

import torch.nn.functional as F

|

| 12 |

+

from torch import Tensor

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class LayerNorm(nn.LayerNorm):

|

| 16 |

+

def forward(self, x: Tensor) -> Tensor:

|

| 17 |

+

return super().forward(x).type(x.dtype)

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

class Linear(nn.Linear):

|

| 21 |

+

def forward(self, x: Tensor) -> Tensor:

|

| 22 |

+

return F.linear(

|

| 23 |

+

x,

|

| 24 |

+

self.weight.to(x.dtype),

|

| 25 |

+

None if self.bias is None else self.bias.to(x.dtype),

|

| 26 |

+

)

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

class Conv1d(nn.Conv1d):

|

| 30 |

+

def _conv_forward(self, x: Tensor, weight: Tensor, bias: Optional[Tensor]) -> Tensor:

|

| 31 |

+

return super()._conv_forward(x, weight.to(x.dtype), None if bias is None else bias.to(x.dtype))

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

def sinusoids(length, channels, max_timescale=10000):

|

| 35 |

+

"""Returns sinusoids for positional embedding"""

|

| 36 |

+

assert channels % 2 == 0

|

| 37 |

+

log_timescale_increment = np.log(max_timescale) / (channels // 2 - 1)

|

| 38 |

+

inv_timescales = torch.exp(-log_timescale_increment * torch.arange(channels // 2))

|

| 39 |

+

scaled_time = torch.arange(length)[:, np.newaxis] * inv_timescales[np.newaxis, :]

|

| 40 |

+

return torch.cat([torch.sin(scaled_time), torch.cos(scaled_time)], dim=1)

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

class MultiHeadAttention(nn.Module):

|

| 44 |

+

def __init__(self, n_state: int, n_head: int):

|

| 45 |

+

super().__init__()

|

| 46 |

+

self.n_head = n_head

|

| 47 |

+

self.query = Linear(n_state, n_state)

|

| 48 |

+

self.key = Linear(n_state, n_state, bias=False)

|

| 49 |

+

self.value = Linear(n_state, n_state)

|

| 50 |

+

self.out = Linear(n_state, n_state)

|

| 51 |

+

|

| 52 |

+

def forward(

|

| 53 |

+

self,

|

| 54 |

+

x: Tensor,

|

| 55 |

+

xa: Optional[Tensor] = None,

|

| 56 |

+

mask: Optional[Tensor] = None,

|

| 57 |

+

kv_cache: Optional[dict] = None,

|

| 58 |

+

):

|

| 59 |

+

q = self.query(x)

|

| 60 |

+

|

| 61 |

+

if kv_cache is None or xa is None or self.key not in kv_cache:

|

| 62 |

+

# hooks, if installed (i.e. kv_cache is not None), will prepend the cached kv tensors;

|

| 63 |

+

# otherwise, perform key/value projections for self- or cross-attention as usual.

|

| 64 |

+

k = self.key(x if xa is None else xa)

|

| 65 |

+

v = self.value(x if xa is None else xa)

|

| 66 |

+

else:

|

| 67 |

+

# for cross-attention, calculate keys and values once and reuse in subsequent calls.

|

| 68 |

+

k = kv_cache[self.key]

|

| 69 |

+

v = kv_cache[self.value]

|

| 70 |

+

|

| 71 |

+

wv, qk = self.qkv_attention(q, k, v, mask)

|

| 72 |

+

return self.out(wv), qk

|

| 73 |

+

|

| 74 |

+

def qkv_attention(self, q: Tensor, k: Tensor, v: Tensor, mask: Optional[Tensor] = None):

|

| 75 |

+

n_batch, n_ctx, n_state = q.shape

|

| 76 |

+

scale = (n_state // self.n_head) ** -0.25

|

| 77 |

+

q = q.view(*q.shape[:2], self.n_head, -1).permute(0, 2, 1, 3) * scale

|

| 78 |

+

k = k.view(*k.shape[:2], self.n_head, -1).permute(0, 2, 3, 1) * scale

|

| 79 |

+

v = v.view(*v.shape[:2], self.n_head, -1).permute(0, 2, 1, 3)

|

| 80 |

+

|

| 81 |

+

qk = q @ k

|

| 82 |

+

if mask is not None:

|

| 83 |

+

qk += mask

|

| 84 |

+

|

| 85 |

+

w = F.softmax(qk, dim=-1).to(q.dtype)

|

| 86 |

+

return (w @ v).permute(0, 2, 1, 3).flatten(start_dim=2), qk.detach()

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

class ResidualAttentionBlock(nn.Module):

|

| 90 |

+

def __init__(self, n_state: int, n_head: int, cross_attention: bool = False):

|

| 91 |

+

super().__init__()

|

| 92 |

+

|

| 93 |

+

self.attn = MultiHeadAttention(n_state, n_head)

|

| 94 |

+

self.attn_ln = LayerNorm(n_state)

|

| 95 |

+

|

| 96 |

+

self.cross_attn = MultiHeadAttention(n_state, n_head) if cross_attention else None

|

| 97 |

+

self.cross_attn_ln = LayerNorm(n_state) if cross_attention else None

|

| 98 |

+

|

| 99 |

+

n_mlp = n_state * 4

|

| 100 |

+

self.mlp = nn.Sequential(Linear(n_state, n_mlp), nn.GELU(), Linear(n_mlp, n_state))

|

| 101 |

+

self.mlp_ln = LayerNorm(n_state)

|

| 102 |

+

|

| 103 |

+

def forward(

|

| 104 |

+

self,

|

| 105 |

+

x: Tensor,

|

| 106 |

+

xa: Optional[Tensor] = None,

|

| 107 |

+

mask: Optional[Tensor] = None,

|

| 108 |

+

kv_cache: Optional[dict] = None,

|

| 109 |

+

):

|

| 110 |

+

x = x + self.attn(self.attn_ln(x), mask=mask, kv_cache=kv_cache)[0]

|

| 111 |

+

if self.cross_attn:

|

| 112 |

+

x = x + self.cross_attn(self.cross_attn_ln(x), xa, kv_cache=kv_cache)[0]

|

| 113 |

+

x = x + self.mlp(self.mlp_ln(x))

|

| 114 |

+

return x

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

class AudioEncoder(nn.Module):

|

| 118 |

+

def __init__(

|

| 119 |

+

self,

|

| 120 |

+

n_mels: int,

|

| 121 |

+

n_ctx: int,

|

| 122 |

+

n_state: int,

|

| 123 |

+

n_head: int,

|

| 124 |

+

n_layer: int,

|

| 125 |

+

output_dim: int = 512,

|

| 126 |

+

avg_pool: bool = True,

|

| 127 |

+

add_audio_bos_eos_token: bool = True,

|

| 128 |

+

**kwargs,

|

| 129 |

+

):

|

| 130 |

+

super().__init__()

|

| 131 |

+

self.conv1 = Conv1d(n_mels, n_state, kernel_size=3, padding=1)

|

| 132 |

+

self.conv2 = Conv1d(n_state, n_state, kernel_size=3, stride=2, padding=1)

|

| 133 |

+

self.register_buffer("positional_embedding", sinusoids(n_ctx, n_state))

|

| 134 |

+

|

| 135 |

+

self.blocks: Iterable[ResidualAttentionBlock] = nn.ModuleList(

|

| 136 |

+

[ResidualAttentionBlock(n_state, n_head) for _ in range(n_layer)]

|

| 137 |

+

)

|

| 138 |

+

self.ln_post = LayerNorm(n_state)

|

| 139 |

+

|

| 140 |

+

if avg_pool:

|

| 141 |

+

self.avg_pooler = nn.AvgPool1d(2, stride=2)

|

| 142 |

+

else:

|

| 143 |

+

self.avg_pooler = None

|

| 144 |

+

self.proj = nn.Linear(n_state, output_dim)

|

| 145 |

+

if add_audio_bos_eos_token:

|

| 146 |

+

self.audio_bos_eos_token = nn.Embedding(2, output_dim)

|

| 147 |

+

else:

|

| 148 |

+

self.audio_bos_eos_token = None

|

| 149 |

+

self.output_dim = output_dim

|

| 150 |

+

self.n_head = n_head

|

| 151 |

+

|

| 152 |

+

def forward(self, x: Tensor, padding_mask: Tensor = None, audio_lengths: Tensor = None):

|

| 153 |

+

"""

|

| 154 |

+

x : torch.Tensor, shape = (batch_size, n_mels, n_ctx)

|

| 155 |

+

the mel spectrogram of the audio

|

| 156 |

+

"""

|

| 157 |

+

x = x.to(dtype=self.conv1.weight.dtype, device=self.conv1.weight.device)

|

| 158 |

+

if audio_lengths is not None:

|

| 159 |

+

input_mel_len = audio_lengths[:, 0] * 2

|

| 160 |

+

max_mel_len_in_batch = input_mel_len.max()

|

| 161 |

+

x = x[:, :, :max_mel_len_in_batch]

|

| 162 |

+

x = F.gelu(self.conv1(x))

|

| 163 |

+

x = F.gelu(self.conv2(x))

|

| 164 |

+

x = x.permute(0, 2, 1) # B, L, D

|

| 165 |

+

bsz = x.size(0)

|

| 166 |

+

src_len = x.size(1)

|

| 167 |

+

|

| 168 |

+

self.input_positional_embedding = self.positional_embedding[:src_len]

|

| 169 |

+

assert (

|

| 170 |

+

x.shape[1:] == self.input_positional_embedding.shape

|

| 171 |

+

), f"incorrect audio shape: {x.shape[1:], self.input_positional_embedding.shape}"

|

| 172 |

+

x = (x + self.input_positional_embedding).to(x.dtype)

|

| 173 |

+

if padding_mask is not None:

|

| 174 |

+

padding_mask = padding_mask.to(dtype=self.conv1.weight.dtype, device=self.conv1.weight.device)

|

| 175 |

+

batch_src_len = padding_mask.size(1)

|

| 176 |

+

x = x[:, :batch_src_len, :]

|

| 177 |

+

padding_mask = padding_mask.view(bsz, -1, batch_src_len)

|

| 178 |

+

padding_mask_ = padding_mask.all(1)

|

| 179 |

+

x[padding_mask_] = 0

|

| 180 |

+

key_padding_mask = (

|

| 181 |

+

padding_mask_.view(bsz, 1, 1, batch_src_len)

|

| 182 |

+

.expand(-1, self.n_head, -1, -1)

|

| 183 |

+

.reshape(bsz, self.n_head, 1, batch_src_len)

|

| 184 |

+

)

|

| 185 |

+

new_padding_mask = torch.zeros_like(key_padding_mask, dtype=x.dtype)

|

| 186 |

+

padding_mask = new_padding_mask.masked_fill(key_padding_mask, float("-inf"))

|

| 187 |

+

|

| 188 |

+

for block in self.blocks:

|

| 189 |

+

x = block(x, mask=padding_mask)

|

| 190 |

+

|

| 191 |

+

if self.avg_pooler:

|

| 192 |

+

x = x.permute(0, 2, 1)

|

| 193 |

+

x = self.avg_pooler(x)

|

| 194 |

+

x = x.permute(0, 2, 1)

|

| 195 |

+

|

| 196 |

+

x = self.ln_post(x)

|

| 197 |

+

x = self.proj(x)

|

| 198 |

+

|

| 199 |

+

if self.audio_bos_eos_token is not None:

|

| 200 |

+

bos = self.audio_bos_eos_token.weight[0][None, :]

|

| 201 |

+

eos = self.audio_bos_eos_token.weight[1][None, :]

|

| 202 |

+

else:

|

| 203 |

+

bos, eos = None, None

|

| 204 |

+

return x, bos, eos

|

| 205 |

+

|

| 206 |

+

def encode(

|

| 207 |

+

self,

|

| 208 |

+

input_audios: Tensor,

|

| 209 |

+

input_audio_lengths: Tensor,

|

| 210 |

+

audio_span_tokens: List,

|

| 211 |

+

):

|

| 212 |

+

real_input_audio_lens = input_audio_lengths[:, 0].tolist()

|

| 213 |

+

max_len_in_batch = max(real_input_audio_lens)

|

| 214 |

+

padding_mask = torch.ones([input_audios.size(0), max_len_in_batch]).to(

|

| 215 |

+

dtype=self.conv1.weight.dtype, device=self.conv1.weight.device

|

| 216 |

+

)

|

| 217 |

+

for index in range(len(input_audios)):

|

| 218 |

+

padding_mask[index, : input_audio_lengths[index][0].item()] = 0

|

| 219 |

+

x, bos, eos = self(input_audios, padding_mask, input_audio_lengths)

|

| 220 |

+

output_audios = []

|

| 221 |

+

for i in range(len(audio_span_tokens)):

|

| 222 |

+

audio_span = audio_span_tokens[i]

|

| 223 |

+

audio = x[i][: audio_span - 2]

|

| 224 |

+

if bos is not None:

|

| 225 |

+

audio = torch.concat([bos, audio, eos])

|

| 226 |

+

assert len(audio) == audio_span

|

| 227 |

+

output_audios.append(audio)

|

| 228 |

+

return output_audios

|

config.json

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "/mnt/public/algm/lizhiyuan/models/megrez-o-release/pretrain_audio_stage3_vision_stage3_merge",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"MegrezO"

|

| 5 |

+

],

|

| 6 |

+

"attention_bias": false,

|

| 7 |

+

"attention_dropout": 0.0,

|

| 8 |

+

"audio_config": {

|

| 9 |

+

"add_audio_bos_eos_token": true,

|

| 10 |

+

"avg_pool": true,

|

| 11 |

+

"n_ctx": 1500,

|

| 12 |

+

"n_head": 20,

|

| 13 |

+

"n_layer": 32,

|

| 14 |

+

"n_mels": 128,

|

| 15 |

+

"n_state": 1280,

|

| 16 |

+

"output_dim": 2560

|

| 17 |

+

},

|

| 18 |

+

"auto_map": {

|

| 19 |

+

"AutoModel": "modeling_megrezo.MegrezO",

|

| 20 |

+

"AutoModelForCausalLM": "modeling_megrezo.MegrezO",

|

| 21 |

+

"AutoConfig": "configuration_megrezo.MegrezOConfig",

|

| 22 |

+

"AutoProcessor": "processing_megrezo.MegrezOProcessor",

|

| 23 |

+

"AutoImageProcessor": "image_processing_megrezo.MegrezOImageProcessor"

|

| 24 |

+

},

|

| 25 |

+

"bos_token_id": null,

|

| 26 |

+

"drop_vision_last_layer": false,

|

| 27 |

+

"eos_token_id": 120005,

|

| 28 |

+

"hidden_act": "silu",

|

| 29 |

+

"hidden_size": 2560,

|

| 30 |

+

"initializer_range": 0.02,

|